Real-time object detection has become a cornerstone of modern computer vision applications—from autonomous vehicles and robotics to industrial inspection and mobile AR. However, one persistent bottleneck for practitioners is the notoriously slow convergence of DETR (DEtection TRansformer)-based models. Traditional DETR variants often require hundreds of training epochs to reach competitive performance, consuming significant GPU time and delaying iteration cycles.

Enter DEIM (DETR with Improved Matching for Fast Convergence)—a streamlined training framework that slashes convergence time by ~50% while simultaneously boosting average precision (AP). Built specifically for real-time DETR architectures like RT-DETR and D-FINE, DEIM rethinks the core matching mechanism between predicted and ground-truth objects, delivering state-of-the-art results in just one day of training on a single NVIDIA 4090. For teams under tight deadlines or constrained by limited compute resources, DEIM offers a practical, drop-in solution that accelerates development without compromising accuracy.

How DEIM Solves the Slow Convergence Problem in DETR

The Challenge: Sparse Supervision in Standard O2O Matching

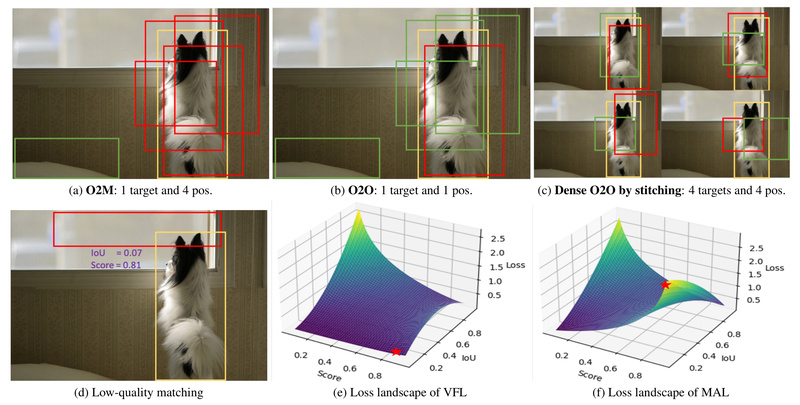

Standard DETR models use a one-to-one (O2O) bipartite matching strategy during training: each ground-truth object is matched to exactly one prediction. While elegant, this leads to extremely sparse supervision, especially in images with many objects. With only a handful of positive samples per image, gradients are weak, and optimization becomes inefficient—requiring 300+ epochs for full convergence.

DEIM’s Dual Innovations: Dense O2O + Matchability-Aware Loss

DEIM tackles this through two synergistic innovations:

Dense One-to-One Matching

Instead of matching only the “best” prediction per object, DEIM leverages standard data augmentations (e.g., random crops, scales, flips) to generate multiple augmented views of the same image. Each view produces additional high-confidence predictions that are also matched to the original ground-truth boxes. This densifies the supervision signal, dramatically increasing the number of positive samples per training step—without introducing new data or complex pipelines.

Matchability-Aware Loss (MAL)

However, denser matching risks including low-quality or ambiguous matches that could degrade performance. To counter this, DEIM introduces Matchability-Aware Loss (MAL)—a novel loss function that dynamically weights matches based on their reliability. MAL assigns higher importance to high-confidence matches while down-weighting noisy ones, ensuring that the model learns from meaningful signals rather than being misled by artifacts of augmentation.

Together, these components enable DEIM to converge in half the epochs while achieving higher AP across the board.

Performance That Speaks for Itself

DEIM’s efficiency gains are validated across multiple model families and hardware platforms:

- DEIM-D-FINE-X: Achieves 56.5% AP at 78 FPS on an NVIDIA T4 GPU—outperforming most real-time detectors without extra data.

- DEIM-RT-DETRv2 (M*): Reaches 53.2% AP in just 24 hours on a single RTX 4090.

- Ultra-light variants: The Atto model (0.49M parameters) delivers 23.8 AP at 320×320 resolution, making it ideal for mobile deployment.

These results demonstrate that DEIM isn’t just faster—it’s more effective. Every model size from Atto to X consistently gains +0.3 to +0.8 AP over its non-DEIM counterpart, proving that accelerated training doesn’t come at the cost of accuracy.

When Should You Use DEIM?

DEIM is particularly valuable in the following scenarios:

- Rapid prototyping: Need to iterate quickly on detector design? DEIM cuts training from days to hours.

- Limited GPU budget: Training on a single consumer-grade GPU (e.g., 4090)? DEIM makes high-accuracy real-time detection feasible.

- Edge or mobile deployment: With models like Atto, Pico, and Nano, DEIM supports resource-constrained environments without re-architecting your pipeline.

- No access to extra data: DEIM achieves its gains using only standard COCO data and augmentations—no external datasets or distillation tricks required.

Getting Started: A Practical Workflow

Using DEIM is straightforward, especially if you’re already familiar with COCO-style object detection setups.

1. Environment Setup

Create a Python 3.11 environment and install dependencies:

conda create -n deim python=3.11.9 conda activate deim pip install -r requirements.txt

2. Dataset Preparation

Organize your data in COCO format—either using the official COCO2017 dataset or a custom dataset with instances_train.json and instances_val.json. For custom datasets, ensure remap_mscoco_category: False in your config.

3. Training & Evaluation

Launch training with pre-configured YAML files:

torchrun --nproc_per_node=4 train.py -c configs/deim_dfine/deim_hgnetv2_l_coco.yml --use-amp

Testing, fine-tuning, and batch size adjustments are equally simple, with clear guidance provided for scaling learning rates and updating EMA settings.

4. Customization

Need smaller inputs for edge devices? Just change the size in your transform config and eval_spatial_size—DEIM handles the rest.

Deployment and Evaluation Tooling

DEIM includes a full suite of production-ready tools:

- ONNX/TensorRT export: Convert models for deployment with one command.

- Multi-backend inference: Run inference via PyTorch, ONNX Runtime, or TensorRT on images or videos.

- Benchmarking: Measure FLOPs, parameters, and TensorRT latency out of the box.

- Visualization: Use FiftyOne to inspect predictions and debug performance.

This end-to-end tooling ensures you can move seamlessly from research to production.

Limitations and Scope

DEIM is not a general-purpose detection framework. It is specifically optimized for DETR-family real-time detectors like RT-DETR and D-FINE. It assumes COCO-style annotations and works best with the provided configurations. If you’re using YOLO, Faster R-CNN, or non-DETR architectures, DEIM won’t apply directly. Additionally, while custom datasets are supported, they must strictly follow the COCO JSON format.

Summary

DEIM redefines what’s possible in real-time object detection by solving DETR’s biggest pain point: slow convergence. Through its intelligent combination of Dense O2O matching and Matchability-Aware Loss, it delivers faster training, higher accuracy, and seamless deployment—all without extra data or architectural changes. For project leads, ML engineers, and researchers under time or resource constraints, DEIM offers a compelling, ready-to-use upgrade that accelerates development while pushing performance boundaries.

With official support for models ranging from massive (X) to mobile-optimized (Atto), and full tooling for export, benchmarking, and visualization, DEIM is not just a research idea—it’s a production-ready solution for the modern vision team.