If you’re evaluating object detection frameworks for a new computer vision project, you’ve likely encountered the rise of DETR (Detection Transformer)—a paradigm-shifting approach that replaces hand-crafted components like anchor boxes with end-to-end transformer architectures. But while DETR and its many variants (Deformable DETR, DINO, MaskDINO, etc.) have shown impressive results, the ecosystem around them remains fragmented: inconsistent codebases, incompatible training setups, and missing baselines make fair comparison and rapid prototyping unnecessarily difficult.

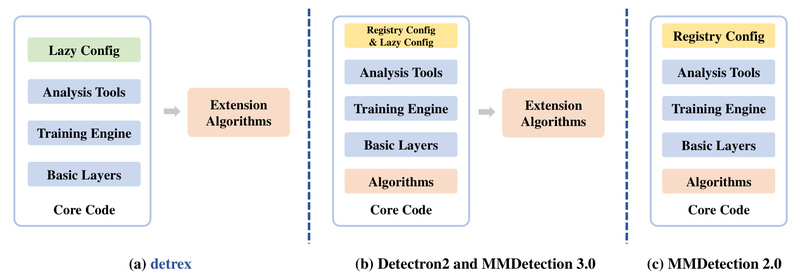

Enter detrex—an open-source, highly modular, and lightweight benchmarking codebase built specifically for DETR-based models. Developed by IDEA Research and built on Detectron2, detrex unifies over 20 state-of-the-art Detection Transformer algorithms under one roof, offering standardized implementations, strong baselines, and a flexible configuration system. Whether you’re a researcher benchmarking a new architecture, an engineer integrating a detection model into a production pipeline, or a student exploring modern vision transformers, detrex removes the friction that often slows down innovation.

Why detrex Matters for Technical Decision-Makers

Solving Fragmentation in DETR Research

Before detrex, adopting a DETR variant meant wrestling with codebases that differed in structure, dependencies, and training protocols—even for models published by the same team. This inconsistency made it hard to reproduce results, compare performance fairly, or build upon existing work confidently.

detrex directly addresses this by providing a unified codebase where every supported model—from the original DETR (ECCV 2020) to recent advances like DINO (ICLR 2023), Focus-DETR (ICCV 2023), and MaskDINO (CVPR 2023)—shares the same core infrastructure. This standardization ensures that performance gains truly reflect algorithmic improvements, not differences in training tricks or code quality.

Strong, Reproducible Baselines Out of the Box

One of detrex’s standout contributions is its optimized baselines. Through careful hyperparameter tuning (learning rate schedules, augmentation strategies, optimizer settings, etc.), the detrex team has boosted performance across most supported models by 0.2 to 1.1 AP over original implementations.

For example, if you’re evaluating DINO for a high-precision detection task, detrex doesn’t just give you a working version—it gives you the best-known version, validated on COCO and ready for transfer. This eliminates weeks of hyperparameter tuning and lets you focus on your core problem.

Key Technical Strengths

Modular Design for Rapid Customization

detrex decomposes the DETR pipeline into well-defined, swappable components: backbones, transformer encoders/decoders, matcher modules, loss functions, and post-processors. This modularity means you can:

- Swap a ResNet backbone for EVA-01 or EVA-02 with a single config change

- Plug in a new query generation strategy (e.g., dynamic anchor boxes from DAB-DETR)

- Replace the Hungarian matcher with a group-wise assignment (as in Group-DETR)

Because the architecture mirrors conceptual building blocks, even newcomers can understand and modify models without digging through monolithic code.

Lightweight and Developer-Friendly Tooling

Built on Detectron2’s LazyConfig system, detrex uses clean, Python-native configuration files—no more nested YAML or opaque registries. Configs are executable Python scripts, enabling dynamic logic, inheritance, and easy debugging.

The training engine is streamlined for efficiency, with built-in support for:

- Mixed-precision training (FP16)

- Model Exponential Moving Average (EMA)

- Activation checkpointing to reduce memory

- ONNX export for deployment (community-contributed)

Additionally, utilities for weight conversion (from original DETR repos), COCO result visualization, and custom dataset training lower the barrier to real-world use.

Ideal Use Cases

1. Benchmarking and Model Selection

Need to choose between DINO, Deformable DETR, and Conditional DETR for your drone-based object detection system? detrex lets you train and evaluate all three under identical conditions—same data loader, same optimizer, same evaluation protocol—so your comparison is scientifically sound.

2. Prototyping New DETR Variants

Building a novel query refinement module? detrex’s modular interface lets you implement your idea in a few dozen lines of code and plug it into any existing backbone or decoder. The unified loss and matcher interfaces ensure compatibility.

3. Deploying State-of-the-Art Detection in Products

Teams integrating detection into robotics, autonomous systems, or medical imaging can leverage detrex’s strong baselines and export tools (like ONNX support) to move from research to deployment faster. Models like MaskDINO even support joint detection and segmentation—ideal for applications requiring pixel-level understanding.

4. Academic Research and Education

For students and educators, detrex offers a consistent playground to learn how Detection Transformers work. Tutorials cover everything from config customization to result analysis, making it easier to grasp advanced concepts through hands-on experimentation.

Getting Started: Practical First Steps

- Installation: Requires PyTorch 1.10+ (1.12 recommended) and standard vision dependencies. Follow the official installation guide—typically a few pip commands and a git clone.

- Run Inference: Use pre-trained weights from the Model Zoo (e.g., DINO with EVA-02 backbone) and a provided config to run inference on your images in minutes.

- Train on Custom Data: Convert your dataset to COCO format, adjust the config path, and launch training—detrex handles the rest.

- Extend a Model: Copy an existing config, modify the transformer or matcher section, and train your variant without touching core code.

The codebase includes detailed tutorials on weight conversion, visualization, and performance analysis—ensuring you’re never stuck guessing how something works.

Limitations and Considerations

While powerful, detrex is not a general-purpose detection framework. It focuses exclusively on DETR-family models, so if your project relies on Faster R-CNN, YOLO, or other non-transformer detectors, you’ll need a different tool (like Detectron2 or MMDetection).

It also assumes familiarity with standard object detection concepts (e.g., AP metrics, COCO format) and PyTorch-based training workflows. Beginners may need to pair it with foundational tutorials on detection basics.

Finally, detrex requires PyTorch 1.10 or higher, which may pose upgrade challenges in legacy environments. However, this ensures access to modern features like improved AMP and better CUDA support.

Summary

detrex solves a critical pain point in modern computer vision: the lack of a standardized, high-quality benchmark for DETR-based models. By unifying implementations, delivering strong baselines, and offering a modular, lightweight codebase, it empowers researchers and engineers to evaluate, prototype, and deploy transformer-based detectors with confidence and speed. If your work involves object detection, instance segmentation, or pose estimation with transformers, detrex isn’t just another toolkit—it’s the foundation for reproducible, state-of-the-art progress.