Generative Adversarial Networks (GANs) have long been at the forefront of realistic image synthesis—but using them effectively in research or product development has been fraught with challenges. Reproducing results is hard. Benchmarks are inconsistent. Evaluation metrics vary across papers. And implementing robust, modern GANs from scratch eats up precious engineering time.

Enter StudioGAN: an open-source PyTorch library that solves these problems by providing a unified, modular, and rigorously validated framework for training, evaluating, and benchmarking GANs. Built by researchers at POSTECH, StudioGAN doesn’t just offer implementations—it delivers a reproducible ecosystem where every model runs under the same training and evaluation protocol, enabling fair, apples-to-apples comparisons across architectures, conditioning strategies, losses, and regularization techniques.

For technical decision-makers, ML engineers, and research teams, StudioGAN eliminates the “reinvent-the-wheel” tax that slows down innovation in generative modeling. Whether you’re prototyping a new conditional GAN, validating a novel loss function, or comparing your method against StyleGAN3 or BigGAN on ImageNet, StudioGAN gives you a reliable foundation—with pre-trained checkpoints, standardized metrics, and support for high-resolution datasets out of the box.

Why StudioGAN Stands Out

A Modular Playground for Modern GANs

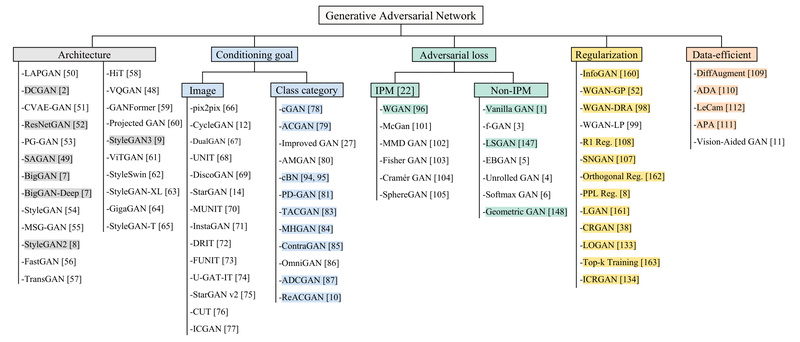

StudioGAN treats every component of a GAN as a plug-and-play module:

- 7 core architectures, including DCGAN, ResNetGAN, BigGAN, and StyleGAN2/3

- 9 conditioning methods, from projection discriminators (PD) to conditional BatchNorm (cBN) and cAdaIN

- 4 adversarial losses: Vanilla, Least Squares, Hinge, and Wasserstein

- 13 regularization techniques, including LeCam, CR, and spectral normalization

- 3 differentiable augmentations, such as DiffAugment and APA

This modularity is managed via simple YAML configuration files, allowing users to mix and match components without rewriting code. Want to test BigGAN with a contrastive loss and DiffAugment? Just adjust the config—no code changes needed.

Unified Training and Evaluation Protocol

Unlike ad-hoc GAN implementations scattered across GitHub, StudioGAN trains all models—from DCGAN to StyleGAN3-r—using a single, consistent pipeline. This eliminates hidden “tricks” or environmental differences that often skew benchmark results.

Even more critically, StudioGAN evaluates models using 8 metrics (IS, FID, Precision/Recall, Density/Coverage, Intra-class FID, CAS) across 5 backbones: InceptionV3, ResNet50, SwAV, DINO, and Swin Transformer. It also supports both “clean” and “architecture-friendly” evaluation modes—addressing recent critiques that standard metrics are biased toward certain model families.

Large-Scale, Multi-Model Benchmark

StudioGAN’s benchmark isn’t limited to GANs. It also includes scores for diffusion models (e.g., ADM) and autoregressive transformers (e.g., MaskGIT, RQ-Transformer), making it one of the few frameworks that enables cross-paradigm comparison.

The benchmark covers standard datasets at multiple scales:

- CIFAR10 (32×32)

- Baby/Papa/Grandpa ImageNet & ImageNet (64×64 to 128×128)

- AFHQv2 (512×512)

- FFHQ (1024×1024)

All pre-trained models and evaluation results are publicly available on Hugging Face Hub, so you can reproduce or extend findings immediately.

Who Should Use StudioGAN—and When

StudioGAN is ideal for:

- Research labs that need trustworthy baselines for new GAN algorithms

- Startups and product teams building generative tools (e.g., avatar creation, synthetic data generation) who want to avoid months of debugging legacy code

- ML engineers tasked with evaluating whether a GAN-based solution meets quality thresholds for deployment

- Graduate students beginning work in generative modeling who need a clean, well-documented codebase

Use cases include:

- Rapidly prototyping conditional image generators for domain-specific applications

- Running ablation studies on loss functions or regularization methods

- Validating that a new architecture improves both fidelity and diversity (via FID, Density, Coverage)

- Comparing GANs against non-GAN generative models on the same metric and dataset

Getting Started: Flexible, Scalable, and Reproducible

StudioGAN is designed for ease of use without sacrificing flexibility.

Configuration and Training

Experiments are controlled via YAML files. To train a BigGAN on CIFAR10 with mixed precision and synchronized batch norm:

CUDA_VISIBLE_DEVICES=0 python3 src/main.py -t -cfg configs/CIFAR10/BigGAN.yaml -data ./data -save ./results -mpc

For high-res datasets like FFHQ, multi-GPU DDP training is supported:

export MASTER_ADDR="localhost"; export MASTER_PORT=8888 CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python3 src/main.py -t -DDP -sync_bn -mpc -cfg configs/FFHQ/StyleGAN3-r.yaml ...

Evaluation and Analysis

Beyond standard metrics, StudioGAN includes rich analysis tools:

- K-nearest neighbor visualization to assess realism

- Linear interpolation for latent space smoothness

- Frequency analysis to detect high-frequency artifacts

- Semantic factorization (SeFa) for disentangled editing in BigGAN

You can also evaluate external image folders—useful when comparing your model against StudioGAN’s benchmark without retraining.

Reproducibility First

The team verified that most implemented GANs match or closely approximate original paper results. Preprocessing uses high-quality resizers (e.g., PIL.LANCZOS), and evaluation avoids common pitfalls like incorrect Inception network variants.

Limitations and Practical Notes

While StudioGAN sets a new standard for GAN reproducibility, users should be aware of a few constraints:

- Not all models are perfectly reproduced: PD-GAN, ACGAN, LOGAN, SAGAN, and BigGAN-Deep show some divergence from original reports—likely due to undocumented training nuances.

- Licensing: StyleGAN2/3 components are under NVIDIA’s license, not MIT. Ensure compliance if redistributing.

- Hardware demands: Training on FFHQ (1024×1024) requires 8+ high-end GPUs and significant VRAM.

- Learning curve: While modular, understanding the interplay between conditioning, loss, and architecture still requires GAN fundamentals.

These are not flaws—but realistic boundaries that help set expectations.

Summary

StudioGAN solves the fragmentation problem that has long plagued the GAN ecosystem. By offering a standardized, modular, and thoroughly benchmarked platform, it empowers teams to focus on innovation rather than infrastructure. Whether you’re validating a hypothesis, shipping a generative feature, or teaching advanced ML, StudioGAN provides the rigor, flexibility, and scale needed to move fast—without compromising reproducibility.

With its support for modern architectures, multi-backbone evaluation, and cross-model benchmarks, StudioGAN isn’t just another GAN repo—it’s the foundation for the next generation of fair, reliable generative research and development.