Imagine asking a computer vision system to “segment the object that makes the woman stand higher” or “show me the camera lens best suited for close-up shots.” Traditional segmentation models would fail—they’re trained to recognize predefined categories like “person,” “car,” or “chair,” not to interpret implicit intent or apply real-world knowledge.

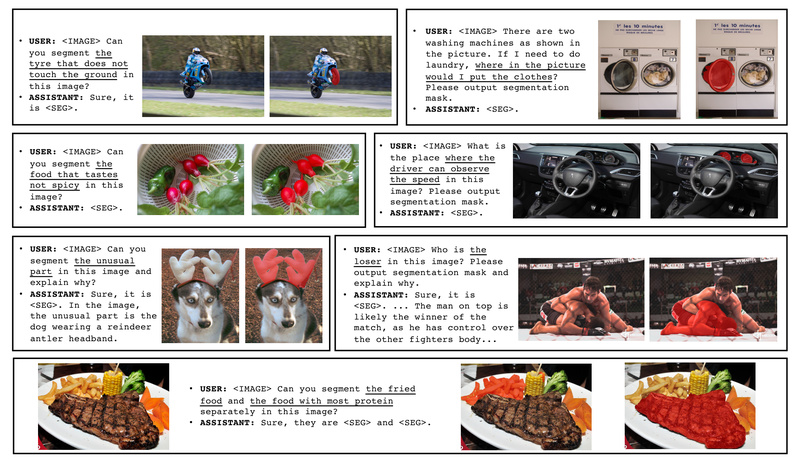

LISA (Large Language Instructed Segmentation Assistant) changes that. Built on the foundation of multimodal large language models (LLMs) and the Segment Anything Model (SAM), LISA introduces a new paradigm: reasoning segmentation. Instead of just labeling pixels, LISA understands complex, context-rich instructions, reasons about them using world knowledge, and outputs both a precise segmentation mask and a natural-language explanation.

This capability bridges a critical gap between human-like visual reasoning and pixel-level understanding—making LISA uniquely suited for applications where user intent is nuanced, ambiguous, or deeply contextual.

Why Reasoning Segmentation Matters

Conventional segmentation tools operate in a closed-world setting: they only recognize objects they’ve been explicitly trained to detect. This limits their usefulness in real-world scenarios where users don’t say “segment the ladder”—they say “segment what helps her reach the shelf.”

LISA redefines the task. Given an image and an open-ended, implicit query, it:

- Interprets the underlying intent,

- Applies commonsense or factual knowledge (e.g., “Jack Ma is the founder of Alibaba”),

- Locates the correct region in the image,

- Generates a binary segmentation mask, and

- Explains its reasoning in plain English.

This transforms segmentation from a passive labeling task into an active, interactive reasoning process—closer to how humans perceive and communicate about visual scenes.

Core Capabilities That Solve Real Problems

Handles Complex Reasoning and World Knowledge

LISA can answer questions like “Who was the president of the US in this image?” even if the person isn’t labeled as “Obama” or “Trump” in any training dataset. It leverages its language model’s vast knowledge base to connect textual concepts with visual evidence.

Delivers Explanatory Outputs

Unlike black-box models, LISA doesn’t just return a mask—it tells you why. For example:

“Sure, [SEG]. The unusual part is the dog wearing a reindeer antler headband, which is atypical for real dogs.”

This transparency builds trust and enables debugging—critical for enterprise and assistive applications.

Supports Multi-Turn Visual Conversations

LISA isn’t limited to one-off queries. It can maintain context across dialogue turns, allowing users to refine requests interactively—ideal for dynamic interfaces or accessibility tools.

Strong Zero-Shot Performance

Remarkably, even when trained only on standard (non-reasoning) segmentation and VQA datasets, LISA demonstrates robust zero-shot reasoning ability. Fine-tuning on just 239 reasoning examples further boosts performance, showing high data efficiency.

Practical Use Cases for Builders and Decision-Makers

- Intelligent User Interfaces: Enable apps that respond to vague or contextual requests (e.g., “highlight what I should click next” in a tutorial).

- Accessibility Tools: Help visually impaired users understand complex scenes by answering questions like “Where can I wash my hands in this restroom?”

- Enterprise Visual QA Systems: Integrate into customer support or internal tools to analyze diagrams, product images, or facility layouts using natural language.

- Rapid Prototyping for Research: Test hypotheses involving semantic reasoning without building custom pipelines from scratch.

Getting Started Without a PhD

You don’t need to be a researcher to use LISA. The team provides ready-to-use models on Hugging Face, including LISA-13B-llama2-v1 and its explanatory variant.

Quick Inference

Using the provided chat.py script, you can run LISA locally with just a few commands:

CUDA_VISIBLE_DEVICES=0 python chat.py --version='xinlai/LISA-13B-llama2-v1' --load_in_4bit

Then provide a prompt and an image path:

- Prompt: “Where can the driver see the car speed in this image? Please output segmentation mask.”

- Image:

example.jpg

LISA responds with a mask token [SEG] and a textual explanation.

Hardware-Friendly Deployment

Thanks to quantization support:

- 4-bit mode runs on a single GPU with 12GB VRAM (e.g., RTX 3060),

- 8-bit mode needs ~16GB,

- Full 16-bit precision requires ~30GB.

A lightweight demo (app.py) is also included for local web-based testing.

Limitations and Practical Considerations

While powerful, LISA isn’t a universal replacement for all segmentation tasks:

- Not for high-precision domains: In medical imaging or industrial inspection, where pixel-perfect boundaries of known classes are required, specialized models may outperform LISA.

- Prompt and image sensitivity: Performance depends on clear images and well-phrased instructions. Ambiguous prompts or low-quality visuals can lead to errors.

- Limited reasoning training data: The core model was fine-tuned on only 239 reasoning examples. For domain-specific tasks (e.g., legal document analysis), additional fine-tuning may be necessary.

Still, for open-world, intent-driven segmentation, LISA sets a new standard.

Summary

LISA represents a significant leap in multimodal AI—combining the world knowledge and reasoning of LLMs with precise visual segmentation. It solves a real pain point: the inability of traditional systems to understand what users actually mean, not just what they literally say.

For technical decision-makers, developers, and researchers building next-generation visual interfaces, assistive technologies, or intelligent agents, LISA offers a uniquely flexible and explainable solution. With accessible deployment options and strong zero-shot capabilities, it lowers the barrier to integrating advanced reasoning into real-world applications.

If your project involves interpreting implicit visual requests, LISA isn’t just an option—it’s a breakthrough worth exploring.