In today’s AI landscape, large language models (LLMs) excel at solving complex tasks—but only when carefully guided by humans. This dependency creates bottlenecks: crafting precise prompts, managing multi-step workflows, and coordinating actions across systems often require significant manual effort. What if AI agents could collaborate autonomously, maintain context over time, and evolve through interaction—just like human teams?

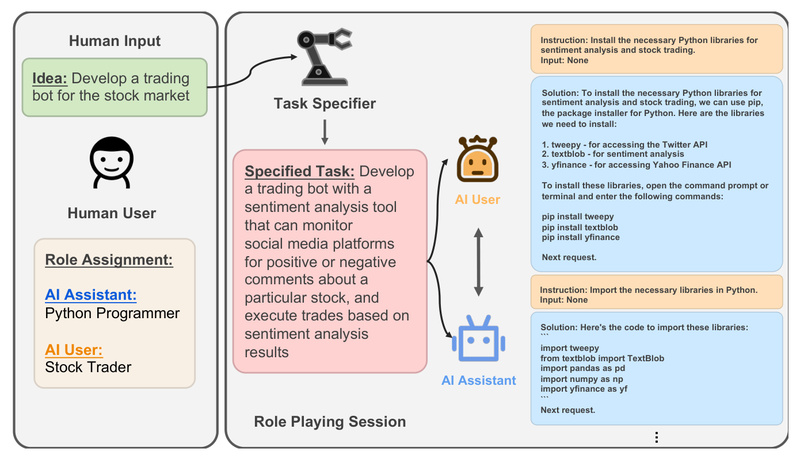

Enter CAMEL (Communicative Agents for "Mind" Exploration of Large Language Model Society), an open-source framework born from NeurIPS 2023 research and designed to enable scalable, self-coordinating societies of AI agents. Unlike single-agent systems that crumble under ambiguity, CAMEL empowers teams of role-playing agents to work together on tasks ranging from code generation to customer support, all while minimizing human intervention.

For technical decision-makers, product leads, and AI researchers, CAMEL offers a practical path to automate intricate workflows, generate high-quality synthetic data, and simulate collaborative intelligence—all grounded in reproducible, code-first design principles.

Why CAMEL Stands Out: Core Design Principles

CAMEL isn’t just another agent library—it’s built on four foundational principles that directly address real-world pain points in AI system development.

Evolvability Through Interaction and Learning

CAMEL agents don’t just execute static instructions. They evolve. By interacting with environments and generating conversational data, they produce verifiable trajectories that can fuel reinforcement learning or supervised fine-tuning. This makes CAMEL uniquely suited for iterative improvement—whether you’re refining a trading bot or optimizing a research assistant team.

Massive Scalability: Up to 1 Million Agents

Most multi-agent demos cap out at two or three participants. CAMEL is engineered for scale. Its architecture supports simulations with up to 1 million agents, enabling researchers to study emergent behaviors and uncover scaling laws in complex, dynamic environments—critical for understanding long-term system reliability and capabilities.

Stateful Memory for Multi-Step Reasoning

Unlike stateless chatbots that forget the past, CAMEL agents maintain persistent memory. This allows them to carry context across interactions, track task progress, and execute sophisticated, multi-phase workflows—such as drafting a technical report, validating sources, and summarizing findings—without losing coherence.

Code-as-Prompt: Clarity, Control, and Reproducibility

Every function, comment, and configuration in CAMEL serves as a prompt. This “code-as-prompt” philosophy ensures that agent behavior is both human-readable and machine-interpretable. The result? Greater control over agent alignment and easier debugging, testing, and sharing across teams.

Real Problems CAMEL Solves

CAMEL transitions theory into tangible impact. Here’s how it addresses concrete challenges across industries:

Automate Repetitive, Multi-Step Workflows

Need to analyze financial reports, scrape competitor websites, and generate executive summaries? Instead of chaining brittle scripts, deploy a CAMEL agent society: one agent researches, another validates data, and a third drafts the output—coordinating dynamically via natural language.

Generate High-Quality Training Data at Scale

Fine-tuning LLMs requires massive, structured datasets. CAMEL automates this by simulating conversations between role-playing agents (e.g., “Python developer” and “product manager”) to produce realistic instruction-following dialogues. These datasets—available on Hugging Face—cover code, math, science, and more.

Build Smarter Customer Support with Agentic RAG

Traditional RAG systems retrieve and respond in isolation. CAMEL enhances this with agentic RAG: a dedicated retrieval agent fetches relevant documents, while a reasoning agent synthesizes answers and a critic agent validates accuracy—dramatically improving reliability for enterprise chatbots.

Simulate Teams for Research and Development

Want to test how a software team would build a trading algorithm? CAMEL lets you instantiate agents as a “stock trader” and a “Python programmer” who collaborate in real time. This simulation capability accelerates prototyping and reveals gaps in task decomposition before human engineers get involved.

Who Should Use CAMEL—and When

CAMEL shines in scenarios requiring collaboration, context retention, and autonomy. Ideal users include:

- AI Researchers studying emergent behaviors in multi-agent systems or developing benchmarks for agent cooperation.

- Product Teams building autonomous workflows—like managing Airbnb listings, analyzing PowerPoint decks, or monitoring social media through simulated agent societies.

- ML Engineers who need structured, role-based conversational data for fine-tuning or alignment training.

- Technical Leaders seeking to reduce dependency on manual prompting in complex AI pipelines.

If your use case involves single-turn queries or purely deterministic automation, CAMEL may be overkill. But for any task requiring multi-step reasoning, tool use, or inter-agent coordination, it’s a compelling fit.

Getting Started Is Surprisingly Simple

Despite its advanced capabilities, CAMEL prioritizes accessibility. You can run your first agent in under five minutes:

-

Install via PyPI:

pip install camel-ai

-

Add tool support (e.g., web search):

pip install 'camel-ai[web_tools]'

-

Set your OpenAI API key and run:

from camel.models import ModelFactory from camel.types import ModelPlatformType, ModelType from camel.agents import ChatAgent from camel.toolkits import SearchToolkit model = ModelFactory.create(model_platform=ModelPlatformType.OPENAI,model_type=ModelType.GPT_4O,model_config_dict={"temperature": 0.0}, ) search_tool = SearchToolkit().search_duckduckgo agent = ChatAgent(model=model, tools=[search_tool]) response = agent.step("What is CAMEL-AI?") print(response.msgs[0].content)

From there, CAMEL’s extensive Cookbooks guide you through building agent societies, integrating RAG, generating Chain-of-Thought data, and deploying local models—all with clear, executable examples.

Limitations and Practical Considerations

CAMEL isn’t a magic bullet. Key considerations include:

- LLM Dependency: Core reasoning relies on external models (e.g., OpenAI, Anthropic, or open-source LLMs). This introduces latency, cost, and potential rate-limiting at scale.

- Prompt & Role Design Matters: Poorly defined roles or vague instructions lead to misaligned agent behavior. Success hinges on thoughtful task decomposition and inception prompting (as introduced in the original paper).

- Infrastructure Overhead: Simulating thousands of agents demands compute resources and efficient orchestration—though CAMEL’s modular design helps manage this.

That said, these constraints are common across advanced agent frameworks. CAMEL mitigates them through tool integration, memory management, and community support (via Discord and GitHub).

Summary

CAMEL redefines what’s possible with autonomous AI collaboration. By combining scalable multi-agent simulation, stateful memory, and a code-first philosophy, it enables teams to build systems that reason, adapt, and cooperate—without constant human babysitting. Whether you’re generating training data, automating business workflows, or probing the frontiers of agent-based AI, CAMEL provides the scaffolding to move fast—responsibly and reproducibly.