In the landscape of spoken language processing, accurately identifying who is speaking—across recordings, meetings, or voice-based interfaces—remains a critical yet challenging task. Enter 3D-Speaker, an open-source toolkit developed by Alibaba’s DAMO Academy that brings state-of-the-art speaker verification, speaker recognition, and speaker diarization capabilities within reach of developers, researchers, and product teams—without requiring deep expertise in speech modeling.

Built around novel architectures like ERes2Net and CAM++, and backed by extensive benchmarking on public datasets (VoxCeleb, CNCeleb) as well as the project’s own 3D-Speaker dataset, this toolkit delivers high accuracy while supporting both supervised and self-supervised learning paradigms. Whether you’re authenticating users via voice, analyzing multi-person conversations, or building multilingual voice assistants, 3D-Speaker offers production-ready models, clear recipes, and multimodal extensions out of the box.

Why 3D-Speaker Stands Out

Research-Backed Architectures with Proven Performance

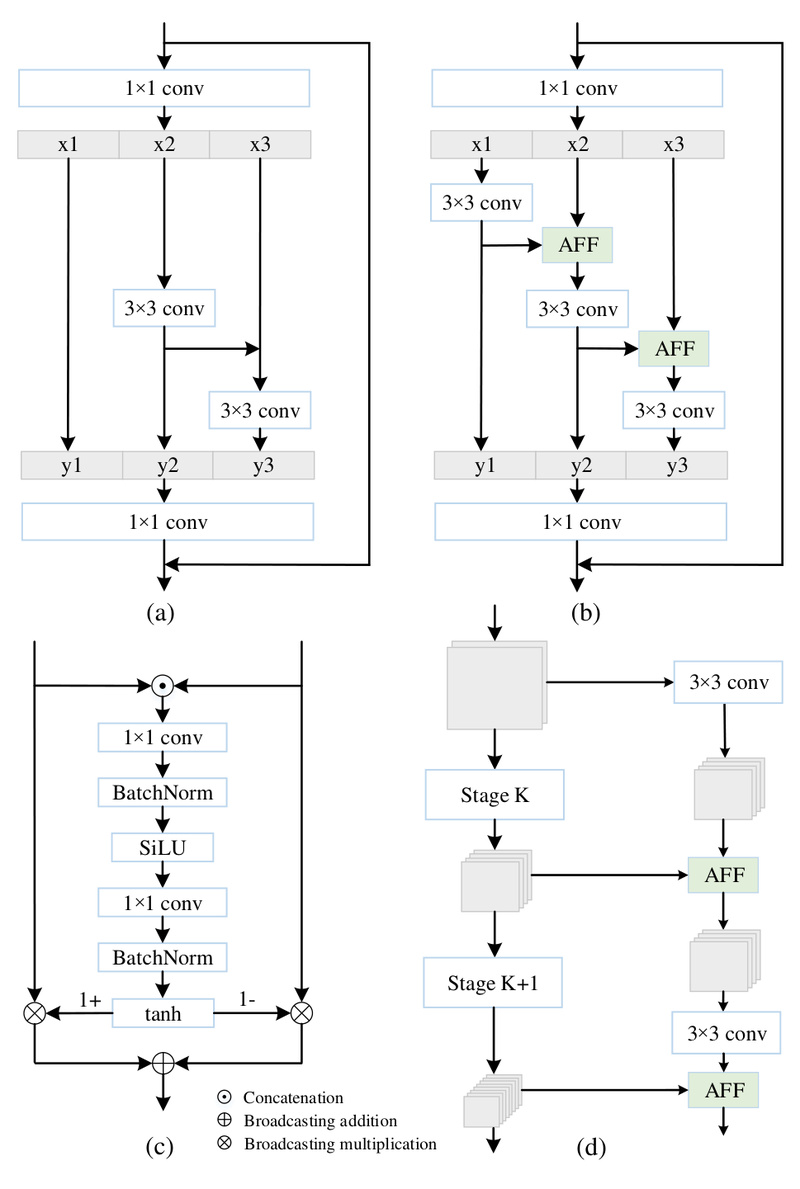

At the core of 3D-Speaker lies ERes2Net (Enhanced Res2Net), introduced in the paper “An Enhanced Res2Net with Local and Global Feature Fusion for Speaker Verification.” Unlike traditional approaches that fuse multi-scale features through simple summation or concatenation, ERes2Net employs Local Feature Fusion (LFF) and Global Feature Fusion (GFF)—both enhanced with attention mechanisms—to better capture speaker-discriminative cues across time and frequency.

The results speak for themselves:

- ERes2Net-large achieves 0.52% EER on VoxCeleb1-O, outperforming established baselines like ECAPA-TDNN (0.86%) and ResNet34 (1.05%).

- On the Chinese-centric CNCeleb benchmark, ERes2NetV2 hits 6.14% EER, the best among all listed models.

- For speaker diarization, 3D-Speaker’s system achieves 10.30% DER on Aishell-4—beating pyannote.audio (12.2%) and DiariZen_WavLM (11.7%).

These aren’t just academic numbers—they translate to fewer false accepts in security systems and cleaner speaker labels in meeting transcripts.

Full-Spectrum Speaker Analysis Toolkit

3D-Speaker isn’t limited to verification. It supports a complete pipeline for speaker-centric tasks:

- Supervised speaker verification (CAM++, ERes2Net, ECAPA-TDNN)

- Self-supervised verification via SDPN and RDINO, useful when labeled speaker data is scarce

- Audio- and video-based speaker diarization, including optional overlap detection

- Language identification, enhanced with phonetic-aware training for higher accuracy

This breadth means teams can reuse the same codebase and infrastructure across multiple product features—reducing integration overhead and maintenance costs.

Real-World Use Cases for Technical Decision-Makers

Secure Voice Authentication

Financial services, smart devices, and enterprise access systems increasingly rely on voice biometrics. With 3D-Speaker, you can deploy sub-1% EER models like ERes2NetV2 or CAM++ with just a few lines of inference code—ideal for low-friction, high-security user verification.

Meeting Intelligence and Call Analytics

For platforms recording multi-party conversations (e.g., Zoom, Teams, or internal corporate systems), speaker diarization is essential to turn raw audio into structured, speaker-attributed transcripts. 3D-Speaker’s diarization pipeline—featuring VAD, segmentation, embedding extraction, and clustering—delivers competitive DER across diverse datasets, including real-world Chinese meeting corpora.

Multilingual Voice Assistants and Content Moderation

The included language identification module helps route utterances to the correct downstream NLP model or flag non-compliant content. Trained on diverse speech from the 3D-Speaker dataset, it supports both Mandarin and English with enhanced phonetic modeling.

Research on Disentangled Speech Representations

The release of the 3D-Speaker-Dataset—a large-scale corpus designed for speech representation disentanglement—enables researchers to explore how speaker, content, and language factors can be separated in learned embeddings, a key challenge in generalizable speech AI.

Getting Started Is Effortless

3D-Speaker prioritizes usability without sacrificing depth. Here’s how to go from zero to inference in minutes:

Installation

git clone https://github.com/modelscope/3D-Speaker.git && cd 3D-Speaker conda create -n 3D-Speaker python=3.8 conda activate 3D-Speaker pip install -r requirements.txt

Run Preconfigured Experiments

The egs/ directory includes ready-to-run scripts for every major task:

sv-eres2netv2/→ supervised speaker verificationspeaker-diarization/→ audio or video diarizationlanguage-identification/→ detect spoken language

Just run bash run.sh in the relevant folder.

Use Pretrained Models via ModelScope

No training needed? Use official models directly:

pip install modelscope model_id=iic/speech_eres2netv2_sv_zh-cn_16k-common python speakerlab/bin/infer_sv.py --model_id $model_id

Batch inference, ONNX runtime support, and multimodal (audio+video) diarization are all supported—making deployment in production environments straightforward.

Limitations and Practical Considerations

While powerful, 3D-Speaker has constraints worth noting:

- Language Bias: Many high-performing models (e.g., ERes2Net-base) are trained primarily on Mandarin data. Performance on non-Chinese languages may vary unless using models like SDPN trained on VoxCeleb (English).

- Overlap Detection Dependency: The optional overlap-aware diarization requires a Hugging Face access token, adding an external dependency.

- Resource Requirements: Larger models like ERes2Net-large (22.46M params) demand significant GPU memory—consider ERes2NetV2 for a better speed/accuracy trade-off.

Always validate model performance on your target domain before full integration.

Summary

3D-Speaker bridges the gap between cutting-edge speaker modeling research and real-world engineering needs. With its high-accuracy architectures, comprehensive task support, easy-to-use recipes, and open pretrained models, it empowers technical teams to rapidly prototype and deploy robust speaker-aware systems—whether for security, analytics, or research. Backed by rigorous benchmarks and actively maintained by DAMO Academy, it’s a compelling choice for anyone working with spoken audio in multilingual or multi-speaker scenarios.