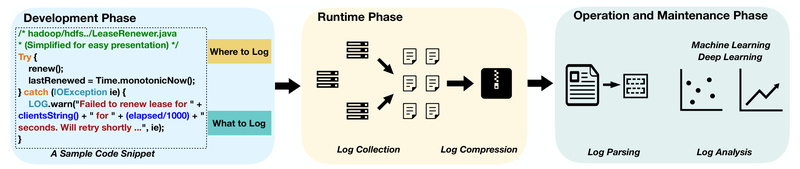

In the world of software systems—whether they’re cloud-native applications, distributed infrastructures, or legacy enterprise platforms—logs are the lifeblood of observability. They capture runtime behaviors, errors, performance metrics, and user interactions in real time. Yet, building intelligent log analysis tools—such as parsers, anomaly detectors, or root cause analyzers—has historically been bottlenecked by a critical problem: the lack of large-scale, diverse, and realistic public log datasets.

Enter Loghub: a curated, open-access repository of 19 real-world system log datasets spanning distributed systems, supercomputers, operating systems, mobile platforms, server applications, and standalone software. Developed by researchers in the LogPAI lab, Loghub directly addresses the data scarcity challenge that has hindered progress in AI-driven log analytics. By providing raw, unmodified logs from production and lab environments, Loghub enables researchers and engineers to develop, benchmark, and validate their log analysis techniques on data that mirrors real operational complexity—not synthetic toy examples.

With over 450 organizations in academia and industry already using Loghub, and datasets collectively downloaded nearly 90,000 times, it has become a de facto standard for reproducible research in log intelligence.

Why Loghub Matters for AI-Driven Log Analysis

The Data Gap in Log Intelligence Research

Many promising AI/ML approaches for log parsing, anomaly detection, or failure prediction never make it beyond academic papers. A primary reason? Without access to standardized, realistic log data, it’s nearly impossible to fairly compare methods or replicate results. Prior to Loghub, researchers often relied on private datasets or heavily sanitized logs that lacked the noise, volume, and structural diversity of real systems.

Loghub fills this gap by offering authentic, minimally processed logs—often in their original form—so models can be trained and tested under conditions that reflect actual deployment environments.

Breadth, Scale, and Realism in One Repository

Loghub’s strength lies in its diversity:

- 19 datasets across 6 major system categories, including Hadoop, Spark, ZooKeeper, Windows, Android, Apache, and supercomputer logs like Blue Gene/L and Thunderbird.

- Massive scale: The Thunderbird dataset alone contains over 211 million log lines, while Windows logs span 114 million lines over 226 days.

- Minimal preprocessing: Wherever possible, logs are not anonymized, sanitized, or altered, preserving the raw structure and semantic richness essential for robust model training.

- Metadata transparency: Each dataset includes clear information on time span, line count, and raw size, helping users assess fit-for-purpose quickly.

This combination makes Loghub uniquely valuable for tasks requiring generalization across system types—something most proprietary datasets cannot support.

Practical Use Cases for Engineers and Researchers

1. Developing and Evaluating Log Parsers

Log parsing—extracting structured templates and parameters from semi-structured log messages—is foundational to downstream analytics. Loghub provides the variance and volume needed to test parser robustness against real syntax drift, multi-language timestamps, and inconsistent formatting. The recent Loghub-2.0 benchmark study (ISSTA 2024) used these datasets to evaluate over a dozen state-of-the-art parsers, revealing significant performance gaps across domains.

2. Training Anomaly and Failure Detection Models

Logs encode signals of system health. With Loghub, teams can build supervised or unsupervised models to detect abnormal patterns—such as sudden error spikes, failed job retries, or security-relevant SSH anomalies—using data that reflects real operational dynamics. Though most datasets are unlabeled, the raw event sequences enable self-supervised or weakly supervised learning strategies.

3. Benchmarking and Reproducing Research

Reproducibility is a cornerstone of scientific progress. By standardizing on Loghub datasets, research teams can compare new algorithms against published baselines under identical conditions. This accelerates innovation and prevents “benchmark inflation” caused by cherry-picked or non-public data.

4. Teaching and Learning System Observability

For educators and students, Loghub serves as a hands-on resource to explore real system behaviors—how distributed frameworks log task failures, how mobile apps report crashes, or how OS kernels record hardware events—without needing access to production infrastructure.

Getting Started with Loghub

Accessing Loghub is straightforward:

- Visit the official GitHub repository: https://github.com/logpai/loghub

- Browse datasets by category (e.g., “Distributed systems” or “Mobile systems”)

- Review metadata—such as number of lines, time span, and file size—to select suitable datasets

- Download raw logs directly via the provided links

Most datasets are available as plain text files, ready for ingestion into data pipelines, Jupyter notebooks, or custom parsers. While labeling is limited (only a few datasets, like Hadoop, include partial labels—see GitHub issue #56), the raw logs are sufficient for unsupervised and template-based approaches.

Limitations and Important Considerations

While Loghub is a powerful resource, users should be aware of key constraints:

- No guaranteed sanitization: Since logs are often unmodified, they may contain sensitive or identifying information. Loghub is strictly intended for research and academic use, not production deployment without proper data governance.

- Limited ground truth: Most datasets are unlabeled, which limits direct supervised learning. Users must apply labeling strategies (e.g., heuristic tagging, manual annotation) if needed.

- Usage scope: The license permits non-commercial research and education only. Commercial applications require separate arrangements and careful legal review.

These limitations don’t diminish Loghub’s value—they simply define its appropriate context: accelerating research, education, and prototyping in AI-driven log analytics.

Summary

Loghub solves a foundational problem in log intelligence: the absence of realistic, diverse, and publicly available log data. By aggregating 19 real-world datasets from systems ranging from supercomputers to mobile apps—and keeping them raw and unfiltered—it empowers researchers and engineers to build, test, and benchmark AI models that actually generalize. Whether you’re developing a next-generation log parser, teaching system reliability, or exploring anomaly detection in distributed environments, Loghub provides the data backbone you need to move from theory to validated practice.

Start exploring Loghub today to ground your log analytics work in the reality of operational systems—not idealized simulations.