In a world where AI assistants increasingly mediate our interactions with apps, services, and even other people, a critical problem persists: you’re constantly repeating yourself. Whether it’s re-entering your preferences, re-explaining your context, or re-authenticating your identity across platforms, the cognitive friction is real—and it’s unnecessary.

Enter Second-Me: an open-source, locally hosted “AI self” designed to act as your persistent, intelligent memory extension. Built on the concept of AI-native memory, Second-Me doesn’t just store your data—it understands it, organizes it, and uses it autonomously to represent you in digital interactions. Unlike cloud-based AI agents that centralize control and risk privacy, Second-Me runs entirely on your machine, ensuring your personal context stays under your control while still enabling seamless, intelligent engagement with the outside world.

For technical decision-makers—developers, researchers, product architects—Second-Me offers a novel infrastructure for building truly personalized, privacy-preserving AI experiences. It’s not just another chatbot; it’s your digital identity interface, trained on your memories, aligned to your values, and deployable wherever you choose.

Core Capabilities That Solve Real Problems

Train an AI That Truly Reflects You

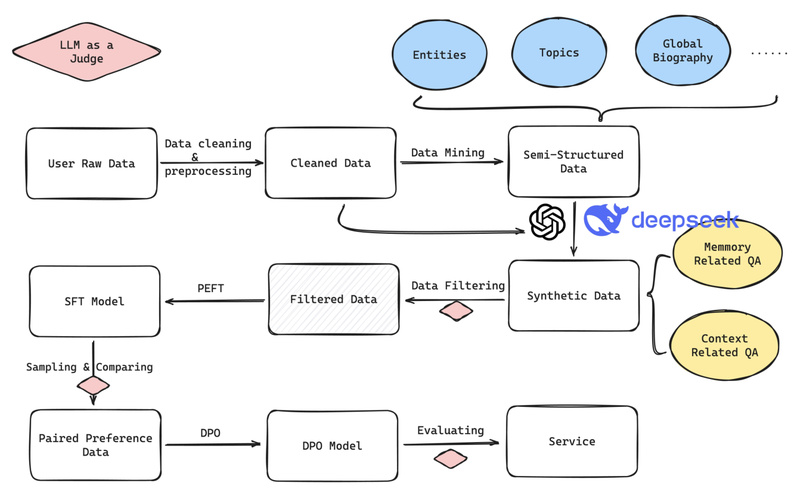

Second-Me leverages AI-Native Memory 2.0, a framework detailed in its research paper, to transform raw personal data—conversations, notes, preferences—into a structured, reasoning-capable memory system. Using techniques like Hierarchical Memory Modeling (HMM) and the Me-Alignment Algorithm, your AI self learns to capture your identity and generate responses that are consistent with your voice, values, and history.

This solves a key limitation of generic LLMs: they lack persistent personal context. Second-Me fills that gap by acting as a personal knowledge base that evolves with you.

Roleplay Across Contexts Without Losing Consistency

Need your AI to act as a professional negotiator in a business simulation, then switch to a travel-savvy companion for vacation planning? Second-Me supports context-aware roleplay, allowing your AI self to adapt its persona while maintaining core identity anchors. This ensures that even when representing you in diverse scenarios—from customer support mockups to social icebreakers—it never contradicts your true preferences or history.

Collaborate in a Decentralized Network of AI Selves

Second-Me isn’t just isolated—it’s networked. With user permission, your AI self can connect to other Second-Me instances in a decentralized ecosystem. Imagine brainstorming a 15-day European itinerary not with a generic planner, but with another user’s AI that knows their travel style, then synthesizing insights that respect both of your preferences. This “AI Space” model enables collaborative intelligence without surrendering data to a central server.

100% Local, 100% Private

Privacy isn’t an afterthought—it’s foundational. Second-Me is designed for local training and inference, using efficient backends like llama.cpp and supporting Apple’s MLX acceleration on M-series Macs. Your memories never leave your device unless you explicitly choose to share them. This stands in stark contrast to mainstream AI platforms that monetize user data or enforce opaque usage policies.

Practical Use Cases That Deliver Immediate Value

Second-Me shines where personal context matters and repetition drains productivity:

- Automated Personal Responses: In roleplay apps like “Felix AMA,” your AI self answers questions as you would—drawing on your real opinions and experiences—freeing you from manual replies.

- Personalized Planning: When generating complex outputs like itineraries or recommendations, Second-Me incorporates your dietary restrictions, budget sensitivities, or past trip feedback—no need to re-specify them every time.

- Digital Representation in Social or Professional Simulations: In speed-dating or networking apps, your AI can serve as an authentic proxy, initiating conversations based on your interests and communication style.

These aren’t futuristic abstractions—they’re working examples already demonstrated by the community.

Getting Started: Simple, Flexible, and Developer-Friendly

Deploying Second-Me takes just three steps:

git clone https://github.com/mindverse/Second-Me.git cd Second-Me make docker-up

Then open http://localhost:3000 in your browser. That’s it.

For those preferring integrated (non-Docker) setups or optimizing for specific hardware, detailed guides cover model selection, memory tuning, and platform nuances. Notably:

- Model size scales with your RAM: On a 16GB machine, you can run ~1.5B-parameter models via Docker (Windows/Linux); Mac users can leverage MLX for larger models via CLI.

- Models under 0.5B parameters may underperform on complex tasks, so choose wisely based on your use case and hardware.

- Cross-platform support is actively improving—community feedback via GitHub issues helps accelerate compatibility.

The project is built on the Qwen2.5 model series and integrates llama.cpp for efficient inference, making it accessible even on consumer-grade hardware.

Limitations and What’s Coming Next

Second-Me is a rapidly evolving prototype, and transparency is key:

- Hardware dependency: Performance is tightly coupled to local resources. Users with limited RAM may need to compromise on model size or task complexity.

- Platform disparities: Mac deployments currently support smaller models than Windows/Linux in Docker mode, though MLX offers a path forward for Apple silicon users.

- Advanced features are in progress: As of early 2025, capabilities like continuous training pipelines, memory version control, and cloud-assisted deployment are slated for the May 2025 roadmap—meaning today’s release is powerful but not yet feature-complete.

This is open-source software in the truest sense: built by the community, for the community.

Summary

Second-Me represents a bold reimagining of how humans interact with AI: not as passive consumers of a “Super AI,” but as active curators of their own AI-native identity. By offloading repetitive context-sharing to a locally hosted, memory-aware AI self, it reduces cognitive load, enhances personalization, and restores user sovereignty over digital intelligence.

For technical evaluators building the next generation of AI-augmented workflows, Second-Me offers more than a tool—it provides an open infrastructure for AI identity that prioritizes privacy, personalization, and interoperability. If you’re exploring ways to embed persistent, user-aligned intelligence into your projects without compromising control, Second-Me is worth investigating today.