Time series forecasting remains a core challenge across industries—from retail and energy to finance and logistics. While deep learning has unlocked significant advances in prediction accuracy, many existing implementations are either too complex to use, lack robust defaults, or fail to outperform classical statistical models in practice. NeuralForecast was built to change that.

Developed by the team at Nixtla and backed by peer-reviewed research like Hierarchically Coherent Multivariate Mixture Networks, NeuralForecast delivers over 30 state-of-the-art neural forecasting models—including NBEATS, NHITS, TimesNet, TFT, PatchTST, and iTransformer—through a clean, consistent, and practitioner-friendly API. It empowers data scientists and engineers to achieve high-accuracy forecasts without wrestling with framework complexities or sacrificing computational efficiency.

Whether you’re forecasting product demand across thousands of SKUs, predicting electricity load with weather covariates, or working with sparse hierarchical data, NeuralForecast provides a unified, scalable, and production-ready solution.

Why NeuralForecast Stands Out

Designed for Practitioners, Not Just Researchers

Unlike many academic codebases that prioritize novelty over usability, NeuralForecast is built with real-world deployment in mind. It adopts a familiar scikit-learn-style interface: .fit() to train and .predict() to generate forecasts. This drastically lowers the learning curve and integrates seamlessly into existing ML pipelines.

Proven Performance with SOTA Models

NeuralForecast includes official implementations of models that have demonstrated top-tier results in forecasting benchmarks:

- NHITS: A hierarchical, interpretable architecture published at AAAI 2023.

- NBEATSx: An enhanced version of N-BEATS that incorporates exogenous variables and achieves strong results on real-world datasets.

- TiDE, TFT, PatchTST, and iTransformer: Cutting-edge architectures adapted for scalability and accuracy in multivariate and long-horizon settings.

In evaluations on hierarchical datasets, the library’s coherent multivariate mixture approach achieved 13.2% average accuracy improvements over prior baselines—without sacrificing speed.

Built-In Support for Real-World Forecasting Needs

Time series in practice rarely exist in isolation. NeuralForecast natively supports:

- Exogenous (external) variables: Incorporate temporal features like price, temperature, or promotions.

- Static covariates: Account for fixed attributes such as store location or product category.

- Probabilistic forecasting: Generate prediction intervals using quantile regression or parametric distributions—essential for risk-aware decision making.

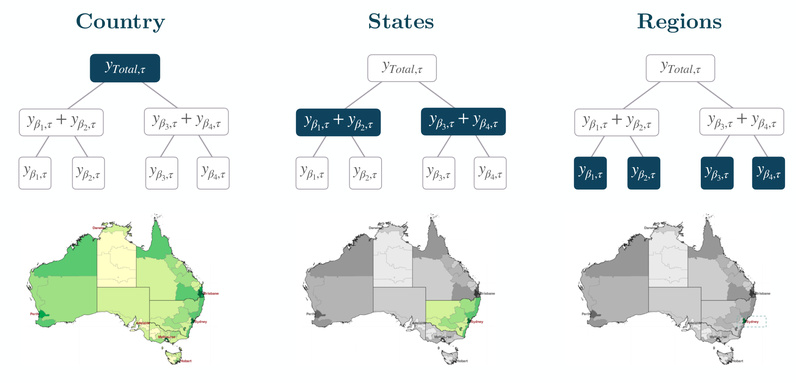

- Hierarchical coherence: Ensure forecasts are consistent across aggregation levels (e.g., total sales vs. regional breakdowns), thanks to its foundation in coherent mixture modeling.

Seamless Ecosystem Integration

NeuralForecast isn’t a siloed tool—it’s part of the broader Nixtla forecasting ecosystem, which includes:

- StatsForecast: For fast statistical baselines (e.g., ARIMA, ETS).

- MLForecast: For gradient-boosted tree-based forecasting.

- HierarchicalForecast: For post-processing and reconciliation.

All share the same input/output format (Y_df DataFrames) and syntax, enabling hybrid workflows and easy model comparison.

Automated Tuning and Scalability

Hyperparameter tuning is often a bottleneck. NeuralForecast integrates with Ray and Optuna to enable distributed, automatic hyperparameter optimization via its Auto model wrappers (e.g., AutoNHITS). This allows users to scale tuning across clusters with minimal code changes.

Additionally, the library leverages PyTorch for GPU acceleration—optional for small datasets but invaluable for large-scale training.

Ideal Use Cases

NeuralForecast excels in scenarios where accuracy, scalability, and ease of use intersect:

- Retail & E-commerce: Forecast demand for thousands of products across stores, with promotions and holiday indicators as exogenous inputs.

- Energy Forecasting: Predict hourly load or renewable generation using weather forecasts and historical patterns.

- Finance & Anomaly Monitoring: Generate reliable baseline forecasts for transaction volumes or market indicators (note: it does not perform anomaly detection directly).

- Sparse or Hierarchical Series: Leverage transfer learning to forecast new products with little history, or ensure coherence between national, regional, and store-level forecasts.

It handles both univariate and multivariate time series and scales from single-series experiments to enterprise-level deployments.

Getting Started in Under 5 Minutes

Installation is straightforward:

pip install neuralforecast

Then, run a minimal forecasting workflow:

from neuralforecast import NeuralForecast from neuralforecast.models import NBEATS from neuralforecast.utils import AirPassengersDF # Initialize model and forecasting pipeline nf = NeuralForecast( models=[NBEATS(input_size=24, h=12, max_steps=100)], freq='ME' ) # Fit on historical data (DataFrame with 'ds', 'y', 'unique_id') nf.fit(df=AirPassengersDF) # Generate forecasts forecasts = nf.predict()

The input DataFrame follows a standard format: unique_id (optional for single series), ds (datetime), and y (target value). Exogenous variables can be added as additional columns.

This simplicity—combined with powerful defaults—means you can go from zero to production-ready forecasts faster than with most alternatives.

Limitations and Considerations

While NeuralForecast is highly capable, it’s important to understand its scope:

- Data Requirements: Some deep models (e.g., Transformers) benefit from longer historical windows. Very short series may perform better with statistical methods from

StatsForecast. - Not a General-Purpose Time Series Toolkit: It focuses exclusively on point and probabilistic forecasting—not anomaly detection, clustering, or causal inference.

- Compute Considerations: GPU acceleration is optional but recommended for large models or datasets. Training on CPU is supported but slower.

- Deployment Footprint: While efficient, it’s not optimized for ultra-low-latency or embedded environments (e.g., microcontrollers).

That said, for cloud-based or server-side forecasting at scale, it strikes an excellent balance between performance, flexibility, and usability.

Summary

NeuralForecast bridges the gap between cutting-edge neural forecasting research and practical, real-world application. By unifying over 30 high-performance models under a simple, consistent API—and integrating tightly with the broader Nixtla ecosystem—it enables practitioners to deploy accurate, probabilistic, and scalable forecasts with minimal friction.

If you’re evaluating time series libraries for your next project, NeuralForecast deserves serious consideration—especially if you value both state-of-the-art accuracy and developer productivity.