If you’ve worked on computer vision tasks like object detection or instance segmentation, you’ve likely encountered the challenge of modeling long-range dependencies across an image. Traditional convolutional networks are inherently local—they struggle to capture global relationships between distant pixels. Non-Local Networks (NLNets) attempted to solve this by computing query-specific global context, but they came with a steep computational cost and surprising redundancy: in practice, the global context they computed barely varied across query positions.

Enter GCNet (Global Context Network)—a smarter, lighter alternative that rethinks global modeling from the ground up. By recognizing that query-specific computation is often unnecessary, GCNet replaces it with a query-independent mechanism that delivers nearly identical performance at a fraction of the cost. Published in 2019 and later extended in TPAMI, GCNet has proven itself across multiple benchmarks, consistently outperforming both NLNet and Squeeze-and-Excitation (SE) networks while remaining simple enough to plug into existing vision pipelines.

For technical decision-makers evaluating architectural enhancements for production or research systems, GCNet offers a rare combination: higher accuracy, lower latency, and minimal implementation overhead.

Why GCNet Works: Simplicity Meets Effectiveness

The Key Insight Behind GCNet

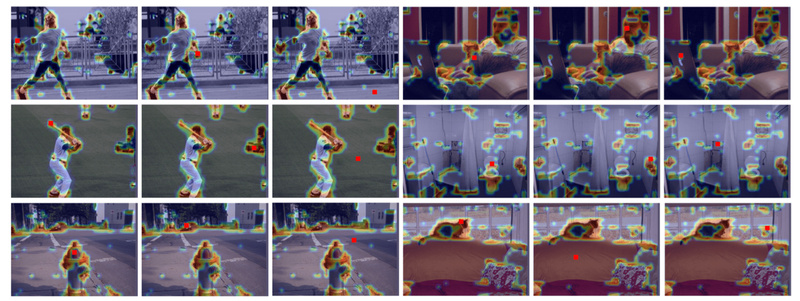

The original paper revealed a critical observation: despite NLNet’s design to generate unique global context for each pixel query, the resulting context vectors were nearly identical across all positions in real-world images. This redundancy meant the heavy attention-style computation was largely wasted.

GCNet capitalizes on this by decoupling global context modeling from per-query computation. Instead of recomputing attention for every location, it:

- Aggregates global features once (via global average pooling),

- Transforms them through a lightweight bottleneck (inspired by SE blocks),

- Broadcasts the resulting context signal back to all spatial positions.

This design merges the best of both worlds: the global receptive field of Non-Local Networks and the parameter efficiency of Squeeze-and-Excitation modules.

Core Advantages

- Computational Efficiency: GCNet reduces FLOPs significantly compared to NLNet by eliminating pairwise similarity computations.

- Consistent Gains: Experiments on COCO show steady improvements in both box AP (+1.2 to +2.3 points) and mask AP (+1.0 to +2.5 points) across ResNet-50, ResNet-100, and ResNeXt-101 backbones.

- Plug-and-Play Integration: The GC block can be inserted into multiple stages (e.g., c3–c5) of standard backbones without architectural overhauls.

- Scalable Design: The bottleneck ratio (e.g., r=4 or r=16) allows trade-offs between model size and performance.

Ideal Use Cases

GCNet shines in scenarios where global semantic understanding directly impacts task performance:

- Object Detection: Helps disambiguate overlapping or partially occluded objects by enriching feature maps with scene-level context.

- Instance Segmentation: Improves mask quality by ensuring consistent object boundaries through global reasoning.

- Resource-Constrained Environments: When deploying vision models on edge devices or under latency budgets, GCNet’s lightweight design offers a high return on added computation.

It’s particularly valuable when you’re already using standard CNN backbones (like ResNet-FPN) and want a drop-in upgrade that doesn’t require redesigning your entire pipeline.

How to Use GCNet in Your Projects

The official implementation is built on mmdetection, one of the most widely used object detection frameworks. Here’s how to get started:

Setup Requirements

- Linux (Ubuntu 16.04+ tested)

- Python 3.6+

- PyTorch 1.1.0

- CUDA 9.0+

- NVIDIA GPUs (training tested on V100)

Installation Steps

- Clone the repository:

git clone https://github.com/xvjiarui/GCNet.git

- Install dependencies, including apex for SyncBN support.

- Compile CUDA extensions:

cd GCNet ./compile.sh

- Install the custom mmdetection version:

pip install -e .

Integration Tips

- GC blocks are inserted after the 1×1 convolution in backbone stages (e.g., c3, c4, c5).

- Predefined configs are available in

configs/gcnet/for ResNet and ResNeXt backbones. - For training, use the standard mmdetection distributed launcher:

./tools/dist_train.sh configs/gcnet/mask_rcnn_r50_fpn_syncbn-backbone_r4_1x_coco.py 8

- Pre-trained models are provided for quick evaluation or fine-tuning.

Limitations and Practical Notes

While GCNet is highly effective, consider these real-world constraints:

- Training Stability: The authors note that both PyTorch’s official SyncBN and Apex SyncBN can exhibit instability—occasionally causing mAP to drop to zero in late epochs before recovering. Monitoring training curves is advised.

- Memory Overhead: Adding GC blocks increases GPU memory usage (e.g., +0.6–1.2 GB for R50-FPN), which may affect large-batch training.

- Dependency Requirements: The setup requires older PyTorch (1.1.0) and CUDA 9.0, which may conflict with newer environments. Containerization (e.g., Docker) can mitigate this.

Summary

GCNet redefines global context modeling by proving that simplicity often outperforms complexity. By replacing redundant query-specific computations with a unified, lightweight mechanism, it delivers measurable gains in accuracy without sacrificing speed. For teams working on object detection, instance segmentation, or any vision task requiring scene-level understanding, GCNet offers a low-friction, high-impact upgrade path—backed by strong empirical results and seamless integration with industry-standard tooling. If you’re looking to boost performance without overhauling your architecture, GCNet deserves a spot in your evaluation pipeline.