NVIDIA NeMo is a cloud-native, open-source framework designed for developers, research engineers, and technical decision-makers who need to build, customize, and deploy high-performance AI models at scale. Unlike experimental or academic toolkits, NeMo is purpose-built for real-world applications—offering optimized implementations for Automatic Speech Recognition (ASR), Text-to-Speech (TTS), Large Language Models (LLMs), Vision-Language Models (VLMs), and even world foundation models for robotics and simulation.

If your team is evaluating frameworks for enterprise-grade AI deployment, NeMo stands out by combining NVIDIA’s hardware-aware optimizations with modular, PyTorch-native design. It eliminates much of the boilerplate and infrastructure overhead typically required to train or fine-tune state-of-the-art models—especially in speech and generative AI domains.

Why NeMo Solves Real Engineering Challenges

1. Dramatically Faster Inference Without Sacrificing Accuracy

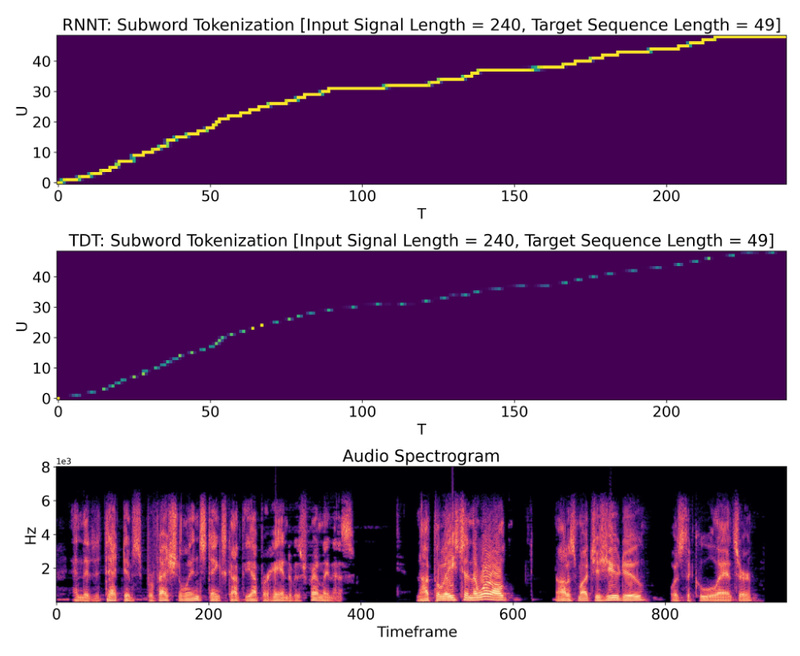

One of NeMo’s standout innovations is the Token-and-Duration Transducer (TDT) architecture, introduced for sequence transduction tasks like speech recognition. Unlike traditional RNN-T models that process every input frame sequentially, TDT jointly predicts both the output token and its duration (i.e., how many input frames it spans). This enables the model to skip irrelevant frames during inference—yielding up to 2.82× faster ASR inference while improving accuracy. In production environments where latency and throughput are critical (e.g., real-time call centers or live captioning), this is a game-changer.

Recent optimizations have pushed NeMo’s ASR models to achieve over 6,000× real-time factor (RTFx)—meaning a 1-hour audio file can be transcribed in under a second on supported hardware.

2. Seamless Scalability from Prototype to Thousands of GPUs

NeMo is built on PyTorch Lightning and integrates tightly with NVIDIA’s ecosystem, including Megatron Core and Transformer Engine. This allows models to scale effortlessly across multi-node, multi-GPU clusters—up to 11,616 H100 GPUs in MLPerf benchmarks—while maintaining near-linear scaling efficiency.

Features like Tensor Parallelism (TP), Pipeline Parallelism (PP), Fully Sharded Data Parallelism (FSDP), and Mixture-of-Experts (MoE) are natively supported. Combined with FP8 and BFloat16 mixed-precision training, NeMo reduces memory footprint and accelerates training without compromising model quality—critical for LLMs and VLMs with billions of parameters.

3. Production-Ready Model Zoo and Fine-Tuning Flexibility

NeMo ships with a curated collection of state-of-the-art, pre-trained models that work out of the box:

- Parakeet-TDT: A high-accuracy ASR model with 64% faster inference than its RNN-T predecessor.

- Canary: A multilingual ASR and speech translation model supporting English, Spanish, German, and French—with punctuation, capitalization, and bidirectional translation.

- Llama 3.1, Nemotron, Qwen2-VL, Gemma3: Full support for leading open LLMs and VLMs via NeMo AutoModel, which enables instant integration of Hugging Face models with NVIDIA-optimized training and inference.

Moreover, NeMo supports modern parameter-efficient fine-tuning (PEFT) methods like LoRA, P-Tuning, Adapters, and IA3, allowing teams to adapt massive models to domain-specific tasks with minimal compute and data. For alignment, it includes DPO (Direct Preference Optimization), SteerLM, and RLHF pipelines.

Where NeMo Delivers Immediate Value

Speech AI at Enterprise Scale

For teams building voice assistants, transcription services, or voice analytics platforms, NeMo’s ASR and TTS models—combined with NVIDIA Riva for deployment—offer a complete, GPU-accelerated pipeline from training to real-time inference. The TDT architecture is particularly valuable for reducing cloud inference costs while maintaining or improving accuracy.

Custom Generative AI with Minimal Overhead

If you’re fine-tuning LLMs for legal, financial, or healthcare applications, NeMo’s AutoModel and NeMo-Run simplify the process: load a Hugging Face model, apply LoRA adapters, and scale training across a Slurm cluster or Kubernetes (EKS/GKE) with just a few configuration changes. The shift to Python-based configs in NeMo 2.0 (instead of YAML) also makes experimentation more programmable and maintainable.

Physical AI and Synthetic Data Generation

Through integration with NVIDIA Cosmos, NeMo enables training of world foundation models that generate realistic synthetic videos from text prompts. This is especially useful for robotics simulation, autonomous driving, or any domain where real-world data is scarce or expensive to collect. The NeMo Curator library further accelerates video processing by up to 89× compared to CPU pipelines.

Getting Started: A Practical Workflow

- Installation: Choose between lightweight

pip install "nemo_toolkit[asr]"for speech tasks or use the NGC NeMo container (nvcr.io/nvidia/nemo:25.02) for full performance and feature support. - Model Loading: Load pre-trained models directly from Hugging Face Hub or NVIDIA NGC—no custom code needed.

- Fine-Tuning: Apply PEFT methods or full fine-tuning using NeMo’s trainer, which automatically handles distributed strategies.

- Scaling: Use NeMo-Run to orchestrate large-scale jobs on Slurm or cloud Kubernetes.

- Deployment: Export models to NeMo Microservices (for LLMs/VLMs) or Riva (for ASR/TTS) for low-latency, production-grade serving.

This workflow is especially accessible to PyTorch-native teams—NeMo avoids abstraction layers that obscure model behavior, giving developers full control when needed.

Limitations and Strategic Considerations

While NeMo is powerful, it’s important to understand its current scope:

- NeMo 2.0 is pivoting: As of late 2025, the main NeMo repository will focus exclusively on speech models (ASR/TTS). LLM and VLM capabilities are migrating to NeMo Megatron-Bridge and NeMo AutoModel under the broader NeMo Framework organization. Plan accordingly if building generative AI systems.

- Platform support is Linux-centric: Windows and macOS are either unsupported or deprecated for training. Linux (x86_64 or ARM64) is the primary target.

- NeMo Framework Launcher no longer supports speech: For ASR/TTS, use direct pip/container installs; the Launcher is now LLM/VLM-focused (and being phased out in favor of NeMo-Run).

- Deprecation timeline: NeMo 2.0’s LLM/VLM components will be deprecated by November 2025. New projects should adopt the NeMo Framework’s modular repos from the start.

Summary

NVIDIA NeMo is not just another research framework—it’s a production-engineered toolkit that solves tangible bottlenecks in AI development: slow inference, scaling complexity, and the high cost of custom model training. Whether you’re deploying real-time speech systems, fine-tuning domain-specific LLMs, or generating synthetic environments for robotics, NeMo provides optimized, open-source building blocks backed by NVIDIA’s hardware and software stack.

For technical leads evaluating AI frameworks, NeMo offers a rare combination: cutting-edge performance, enterprise readiness, and active community support—all under an Apache 2.0 license. If your project involves speech, language, or multimodal AI on NVIDIA GPUs, NeMo deserves serious consideration.