In the rapidly evolving landscape of multimodal artificial intelligence, developers and technical decision-makers need models that go beyond basic image captioning—they need systems that can read text in images, localize objects through natural language, reason across multiple visuals, and do all this reliably without stitching together external OCR engines or detection pipelines. Enter Qwen-VL, a versatile open-source vision-language model (VLM) developed by Alibaba Cloud that delivers state-of-the-art performance across a wide range of real-world multimodal tasks—while remaining accessible, commercially usable, and easy to deploy.

Unlike many generalist models that struggle with fine-grained visual details or require post-processing tools for text extraction, Qwen-VL natively integrates high-resolution image understanding, multilingual text recognition, and visual grounding into a single, unified architecture. Whether you’re building a document-processing assistant, a multilingual visual chatbot, or an AI-powered retail analyst, Qwen-VL offers a compelling alternative to closed, expensive, or fragmented solutions.

Why Qwen-VL Stands Out Among Open-Source VLMs

End-to-End Text Understanding Without External OCR

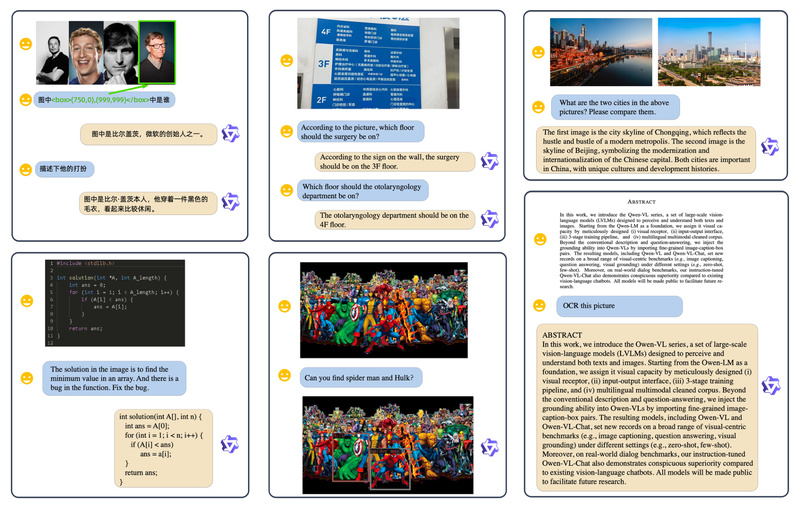

One of Qwen-VL’s most practical advantages is its ability to read and reason about text inside images without relying on external optical character recognition (OCR) tools. Most open-source vision-language models either ignore text or treat it as generic visual patterns. Qwen-VL, by contrast, is explicitly trained on image-caption-box triplets, enabling it to recognize, extract, and answer questions about text in documents, charts, signs, and forms—all through pure pixel input.

This capability is validated on benchmarks like DocVQA, TextVQA, and ChartQA, where Qwen-VL consistently outperforms other open-source models of comparable scale. For instance, the base Qwen-VL model achieves 65.1% on DocVQA and 75.7% on OCR-VQA, surpassing models like BLIP-2 and even competing with specialized architectures.

High-Resolution Visual Perception

While many open-source VLMs process images at 224×224 resolution—too coarse for reading small text or detecting fine details—Qwen-VL operates at 448×448 resolution by default. This higher fidelity enables accurate text recognition and precise bounding box localization, critical for tasks like form parsing or diagram interpretation.

Even more impressively, the newer Qwen-VL-Plus and Qwen-VL-Max variants support ultra-high-resolution inputs of over one million pixels and extreme aspect ratios (e.g., long receipts or panoramic screenshots), making them suitable for enterprise-grade document and image analysis.

Native Multilingual Support with Strong Chinese Capability

Qwen-VL isn’t just another English-centric model. It natively supports both English and Chinese in vision-language tasks, including bilingual text recognition in images and grounded object detection via Chinese prompts. Notably, Qwen-VL-Max outperforms GPT-4V and Gemini Ultra on Chinese multimodal benchmarks like MM-Bench-CN, a rare feat for an open model.

This makes Qwen-VL especially valuable for global applications requiring multilingual visual assistants, cross-lingual content moderation, or international customer support systems that process invoices, IDs, or product labels in multiple languages.

Built-In Visual Grounding and Bounding Box Output

Qwen-VL is the first generalist open-source model to support visual grounding in Chinese, but it also excels in English. You can ask it to “highlight the woman playing with the dog” and receive a response like <ref>Woman</ref><box>(451,379),(731,806)</box>, which can be rendered directly onto the image.

This grounding capability is evaluated on standard datasets like RefCOCO, RefCOCO+, and GRIT, where Qwen-VL achieves state-of-the-art results among generalist models, even without Chinese grounding data during training—thanks to its strong cross-lingual transfer from English grounding and Chinese captioning.

Multi-Image Interleaved Conversations

Unlike models limited to single-image inputs, Qwen-VL allows multiple images to be interleaved within a single conversation. You can upload two product photos and ask, “Which laptop has a better screen-to-body ratio?” or compare satellite images of urban development over time. This enables rich applications in e-commerce, remote sensing, medical imaging, and educational tools.

Real-World Applications That Benefit from Qwen-VL

Qwen-VL shines in scenarios where text, vision, and reasoning intersect:

- Automated Document QA: Extract answers from invoices, contracts, or government forms without OCR pipelines.

- Chart and Diagram Understanding: Interpret financial reports, scientific figures, or engineering schematics.

- E-Commerce Visual Search: Enable users to ask questions like “Show me the price tag in this photo” or “Compare these two smartphones.”

- Content Moderation with Localization: Detect and box prohibited items or sensitive content described in natural language.

- Multilingual Visual Assistants: Power customer service bots that understand screenshots of error messages or product labels in Chinese or English.

- Educational AI Tools: Solve math problems from handwritten worksheets or explain biological diagrams using grounded references.

Because Qwen-VL handles text reading and visual reasoning in one pass, it eliminates the need for complex, error-prone multimodal pipelines—reducing latency, cost, and maintenance overhead.

Getting Started: Simple, Flexible, and Developer-Friendly

Qwen-VL is designed for rapid adoption. You can run inference in just a few lines of code using Hugging Face Transformers or ModelScope:

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen-VL-Chat", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen-VL-Chat", device_map="cuda", trust_remote_code=True).eval()

query = tokenizer.from_list_format([{'image': 'https://example.com/photo.jpg'},{'text': 'What does the sign say?'}

])

response, _ = model.chat(tokenizer, query=query, history=None)

print(response)

For low-resource environments, the Qwen-VL-Chat-Int4 quantized version cuts GPU memory usage by over 50% (from ~22GB to ~11GB) with minimal performance loss—ideal for edge deployment or cost-sensitive cloud workloads.

Beyond code, Qwen-VL is also accessible via web demo, mobile app, API, and Discord, enabling non-developers to test its capabilities instantly.

Fine-Tuning and Customization

Need to adapt Qwen-VL to your domain? The project supports full-parameter fine-tuning, LoRA, and Q-LoRA, with scripts provided for DeepSpeed and FSDP. Training data is formatted as JSON conversations with special tokens like <img>, <ref>, and <box>, making it straightforward to inject custom grounding or text-reading logic.

For example, you can teach the model to recognize your company’s invoice layout or localize specific UI elements in mobile app screenshots—extending its out-of-the-box capabilities without architectural changes.

Limitations and Practical Considerations

While powerful, Qwen-VL has a few constraints to consider:

- Hardware Requirements: The base model (~7B parameters) benefits from a GPU with ≥24GB VRAM (though Int4 reduces this to ~12GB).

- Language Coverage: Although strong in English and Chinese, support for other languages (e.g., Arabic or Cyrillic scripts) is limited.

- Reasoning Depth: On extremely complex multimodal reasoning (e.g., advanced physics diagrams), proprietary models like GPT-4V may still hold an edge—though Qwen-VL-Max closes this gap significantly.

- Zero-Shot Grounding in Non-English Languages: Chinese grounding works surprisingly well without direct training, but performance may vary for other languages.

Summary

Qwen-VL redefines what’s possible with open-source vision-language models. By delivering high-resolution text reading, precise visual grounding, multilingual support, and multi-image reasoning in a single, commercially usable package, it removes major barriers to building real-world multimodal applications.

For technical decision-makers, Qwen-VL offers a future-proof, vendor-lock-in-free foundation that combines state-of-the-art performance, flexible deployment options, and end-to-end multimodal understanding—all without hidden costs or external dependencies. Whether you’re prototyping a research idea or scaling an enterprise AI product, Qwen-VL is a smart, capable, and accessible choice.