Stereo matching—the task of estimating depth from a pair of rectified images—is foundational in applications like autonomous driving, robotics, and 3D scene reconstruction. However, many state-of-the-art deep learning approaches rely heavily on 3D convolutions, which bring cubic computational complexity and high memory demands. These characteristics make them impractical for real-time or resource-constrained environments.

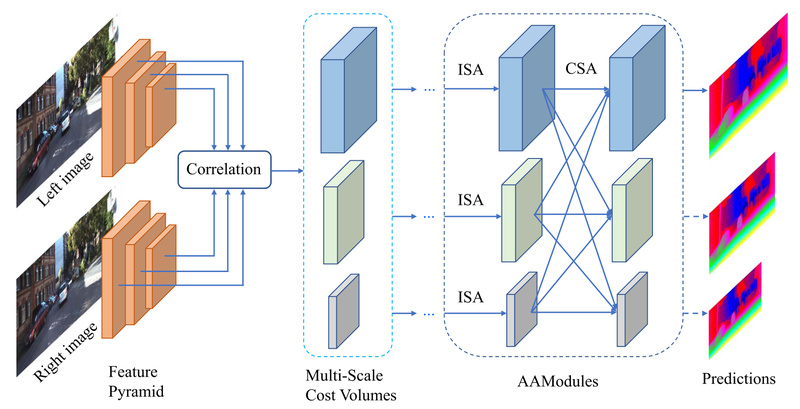

Enter AANet (Adaptive Aggregation Network for Efficient Stereo Matching). Introduced at CVPR 2020, AANet rethinks cost aggregation—the core step in stereo matching—by completely eliminating 3D convolutions. Instead, it uses two lightweight, complementary modules: a sparse points–based intra-scale cost aggregation (ISA) to preserve sharp disparity edges, and a learned cross-scale cost aggregation (CSA) to handle large textureless regions. The result? A stereo matching model that runs at 60ms per KITTI-resolution image pair (384×1248) while delivering accuracy competitive with much slower 3D-convolution-based methods.

For practitioners who need to deploy stereo vision in latency-sensitive or memory-limited systems, AANet offers a rare combination: high speed, strong accuracy, and full architectural flexibility—all without the computational overhead of 3D convolutions.

Why AANet Solves a Real-World Problem

Traditional stereo matching pipelines, even when powered by deep learning, often bottleneck on 3D cost volume processing. Models like PSMNet or GA-Net achieve high accuracy but at the cost of inference speeds measured in seconds—not milliseconds. This makes them unsuitable for real-time applications.

AANet directly addresses this gap. By replacing 3D convolutions with efficient 2D operations and adaptive aggregation strategies, it achieves:

- 41× speedup over GC-Net

- 4× speedup over PSMNet

- 38× speedup over GA-Net

Yet it doesn’t sacrifice performance. In fact, when integrated into fast baselines like StereoNet, AANet improves their accuracy. On standard benchmarks like Scene Flow and KITTI, AANet delivers results that rival top models—all while running in under 62ms on a single GPU.

This efficiency makes AANet particularly valuable in edge deployments, such as automotive perception stacks, drone navigation, or mobile robotics, where every millisecond and megabyte counts.

Modular Design for Customization and Experimentation

One of AANet’s most underappreciated strengths is its modular architecture. The stereo matching pipeline is explicitly decomposed into five interchangeable components:

- Feature extraction

- Cost volume construction

- Cost aggregation (where AANet’s ISA and CSA shine)

- Disparity computation

- Disparity refinement

This design allows researchers and engineers to mix and match modules—swap in a new feature extractor, test alternative refinement heads, or plug AANet’s aggregation into an existing pipeline. The codebase supports this flexibility: adding a new component often requires only creating a Python file and registering it in the main model definition.

Moreover, the repository includes both the original AANet and the enhanced AANet+, which offers slightly better accuracy with marginally improved speed—giving users an immediate upgrade path.

Getting Started Is Straightforward

AANet’s GitHub repository is unusually complete for an academic project. It provides end-to-end tooling:

- Installation: A single

environment.ymlfile sets up dependencies via Conda (PyTorch 1.2, CUDA 10.0, Python 3.7). A simple shell script compiles the required deformable convolution layer. - Data handling: Clear instructions for organizing KITTI 2012/2015 and Scene Flow datasets. Support for pseudo ground truth labels is included to boost training quality.

- Inference & Prediction: Run pre-trained models on official test sets or your own rectified stereo pairs with provided shell scripts (

aanet_inference.sh,aanet_predict.sh). - Training & Evaluation: Full training scripts for multi-GPU setups, TensorBoard integration, and on-the-fly validation. The code even guides you on how to train on custom datasets.

This “batteries-included” approach lowers the barrier to entry, letting practitioners move from code clone to depth map in minutes—not days.

Ideal Use Cases

AANet excels in scenarios where efficiency, reliability, and deployment readiness matter more than marginal accuracy gains:

- Autonomous vehicles: Real-time disparity estimation for obstacle detection and free-space segmentation.

- Embedded vision systems: Stereo depth on drones, robots, or AR/VR headsets with limited compute.

- Industrial inspection: Fast 3D profiling of objects on conveyor belts using calibrated stereo cameras.

- Academic prototyping: A high-performance, easy-to-modify baseline for stereo matching research.

Crucially, AANet assumes rectified stereo image pairs as input—standard in most calibrated setups. It is not designed for uncalibrated images, monocular depth, or non-stereo modalities.

Limitations and Practical Notes

While AANet is highly efficient, it’s important to understand its boundaries:

- Input constraint: Only works with rectified stereo pairs. Uncalibrated or single-image inputs are unsupported.

- Hardware dependency: Designed for GPU execution. CPU inference is possible but significantly slower.

- Software stack: Originally built for PyTorch 1.2 and CUDA 10.0. Newer environments may require minor adaptation (though the architecture itself is framework-agnostic).

- Accuracy trade-off: While competitive, AANet may not match the absolute peak accuracy of slower 3D-convolution models in extremely challenging textureless or reflective regions. However, for most practical applications, its speed-accuracy balance is optimal.

Also note: the authors have since released GMStereo, a more advanced successor that unifies stereo, flow, and depth estimation. But for those seeking a lightweight, well-documented, and standalone stereo matcher, AANet remains a robust and production-ready choice.

Summary

AANet redefines what’s possible in efficient stereo matching. By eliminating 3D convolutions and introducing adaptive, lightweight aggregation modules, it delivers real-time performance without compromising accuracy. Its modular design, full tooling suite, and strong results on standard benchmarks make it an excellent choice for engineers and researchers building depth-aware systems under real-world constraints. If your project demands fast, deployable stereo matching—AANet deserves a place in your toolkit.