As autonomous AI agents become central to real-world applications—from customer service bots to robotic process automation—the demand for Large Action Models (LAMs) that reliably execute complex, multi-step tasks is surging. Yet practitioners face significant bottlenecks: agent data comes in wildly inconsistent formats, training pipelines are slow and hard to scale, and tool-calling behavior often lacks robustness.

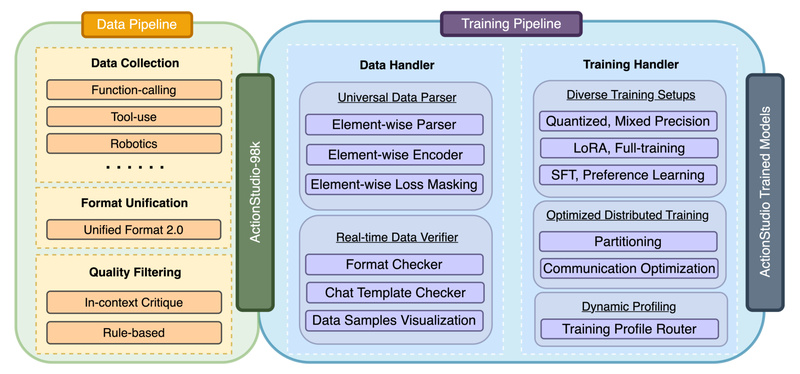

Enter ActionStudio, a lightweight, open-source framework from Salesforce AI Research designed specifically to streamline the data processing, training, and deployment of Large Action Models. Built to address the fragmentation and inefficiency plaguing agentic AI development, ActionStudio offers a unified infrastructure that accelerates model training by up to 9x compared to existing frameworks—while ensuring high data quality and compatibility with industry-standard inference engines like vLLM and Hugging Face Transformers.

For technical decision-makers, research teams, and developers building next-generation autonomous agents, ActionStudio isn’t just another toolkit—it’s a foundational layer for scalable, reproducible, and high-performance agent training.

Why ActionStudio Solves Real Pain Points

Traditional workflows for training action-capable models often involve stitching together ad-hoc scripts to convert agent trajectories from web navigation logs, API call sequences, or simulated environments into a format suitable for fine-tuning. This leads to:

- Data silos: Each environment (e.g., WebShop, ToolBench, custom APIs) produces trajectories with different structures.

- Training instability: Without standardized preprocessing, models overfit to specific data sources or fail to generalize across tasks.

- Low throughput: Distributed training setups are poorly optimized for agentic workloads, wasting GPU resources.

ActionStudio directly tackles these issues through a purpose-built architecture centered on three pillars: unification, efficiency, and verification.

Core Features That Set ActionStudio Apart

Unified Format 2.0: One Standard for All Agent Trajectories

At the heart of ActionStudio is Unified Format 2.0, a schema that normalizes diverse agent trajectories—whether from GUI interactions, function-calling dialogues, or multi-turn planning sequences—into a consistent, structured representation. This eliminates the need for custom data loaders per environment and ensures balanced exposure during training.

By converting raw logs into this canonical format, teams can mix data from public benchmarks (e.g., BFCL, t-bench) with proprietary agent traces without format conflicts. The result? Models that generalize across tasks and tools.

Optimized Multi-Node Training with 9x Throughput Gain

ActionStudio’s training pipeline is engineered for speed and scalability. Leveraging DeepSpeed and multi-node distributed strategies, it achieves up to 9x higher throughput than competing agentic training frameworks.

Key optimizations include:

- Dynamic batch construction from unified trajectories

- Device-independent random sampling to preserve training fairness

- Automatic checkpoint merging and training-step calculation

These features significantly reduce time-to-model, enabling rapid iteration on agent capabilities.

Built-In Data Preprocessing and Real-Time Verification

Garbage in, garbage out—especially in agentic systems where a single malformed tool call can derail an entire task. ActionStudio includes integrated preprocessing modules and a real-time data verifier that flags inconsistencies (e.g., mismatched tool arguments, invalid state transitions) before they reach the training loop.

This ensures that only high-quality, executable trajectories make it into the final dataset—such as the included actionstudio-98k, a curated collection of 98,000 verified agent trajectories released to support community research.

Seamless Deployment with vLLM and Transformers

Trained models are only useful if they can be deployed reliably. ActionStudio-trained xLAM models (e.g., the xLAM-2-fc series) are fully compatible with:

- Hugging Face Transformers for simple inference

- vLLM (v0.6.5+) for high-throughput serving with native tool-calling support via a dedicated parser plugin

This interoperability means teams can move smoothly from research to production without rewriting inference logic.

Ideal Use Cases for ActionStudio

ActionStudio shines in scenarios requiring robust, tool-aware agents:

- Function-calling assistants: Train models to invoke APIs accurately across domains (e.g., weather, finance, CRM).

- Multi-turn agent-human simulations: Leverage datasets like APIGen-MT-5k to fine-tune models on realistic interaction flows.

- Web or GUI automation agents: Standardize navigation traces from environments like WebShop into trainable sequences.

- Agentic dataset curation: Use ActionStudio’s conversion and verification tools to build high-quality internal datasets.

Whether you’re a startup prototyping an AI copilot or a research lab benchmarking next-gen agents, ActionStudio provides the scaffolding to go from raw logs to deployable models faster and more reliably.

Getting Started: From Installation to Inference

ActionStudio is designed for ease of adoption:

-

Install in editable mode:

conda create --name actionstudio python=3.10 bash requirements.sh pip install -e .

-

Explore data: The

datasets/directory includes open-sourced unified trajectories like actionstudio-98k. -

Configure training: Use YAML files in

examples/data_configs/to define data mixtures and DeepSpeed configs for distributed runs. -

Fine-tune: Launch training via provided bash scripts (

examples/trainings/), which auto-handle checkpointing and step calculation. -

Deploy: Load trained models via Transformers or serve via vLLM with the

xlam_tool_call_parser.pyplugin for accurate function calling.

Example inference with Transformers supports standard chat templates and tool definitions, while vLLM enables OpenAI-compatible API serving—ideal for integrating into agent orchestration frameworks.

Key Limitations and Practical Considerations

While powerful, ActionStudio comes with important boundaries:

- Research use only: The framework and associated xLAM models are released under research licenses (Apache 2.0 for code, CC-BY-NC-4.0 for data).

- Partial data availability: Due to internal policies, not all xLAM training data is public—though key datasets like actionstudio-98k and APIGen-MT are fully open.

- Prompt and temperature sensitivity: For deterministic tool calling, lower temperature settings and explicit formatting instructions are recommended.

- Hardware requirements: Larger models (e.g., 70B) require multi-GPU setups (e.g., 4×80GB GPUs) for training or inference.

Teams should plan accordingly and validate model behavior on their specific tool schemas.

Summary

ActionStudio redefines how we build Large Action Models by replacing fragmented, inefficient workflows with a unified, high-performance framework. From standardizing chaotic agent data to enabling 9x faster training and seamless deployment, it directly addresses the core challenges facing AI practitioners developing autonomous agents.

For those evaluating infrastructure to support agentic AI—whether for research, prototyping, or production—ActionStudio offers a compelling, open-source foundation that accelerates progress without sacrificing rigor or scalability.