In modern machine learning workflows, teams often face a tough trade-off: spend days or weeks manually tuning architectures and hyperparameters, or settle for suboptimal performance from off-the-shelf models. AdaNet addresses this dilemma head-on by offering a lightweight, TensorFlow-native AutoML framework that automatically constructs high-performing ensembles of models—with minimal human intervention.

Built on the theoretical foundations of the AdaNet algorithm introduced at ICML 2017, this open-source library seamlessly integrates with TensorFlow’s ecosystem to deliver fast, flexible, and theoretically grounded ensemble learning. Whether you’re prototyping on a new dataset, scaling across hardware, or looking to boost accuracy without deep architecture expertise, AdaNet provides a practical path forward—especially for structured data tasks where rapid iteration and reliability matter more than bleeding-edge complexity.

Why AdaNet? Solving Real Pain Points in Model Development

Many teams struggle with the “expert bottleneck”: the need for highly specialized knowledge to design, combine, and tune neural architectures. AdaNet eliminates much of this friction by automatically learning both model structure and ensemble composition in a single training call.

Instead of manually testing whether a linear model, a shallow network, or a deep architecture works best—or worse, averaging their predictions post-hoc—AdaNet evaluates candidate subnetworks on the fly, freezes promising ones, and incrementally builds a diverse, high-quality ensemble. This adaptive process is guided by an objective function with learning guarantees, meaning you get performance improvements backed by theory, not just heuristics.

For organizations lacking dedicated ML researchers but still needing robust models, AdaNet offers a rare combination: automation plus interpretability, speed plus rigor, and simplicity plus extensibility.

Key Features That Deliver Practical Value

Unified AutoML and Ensemble Learning

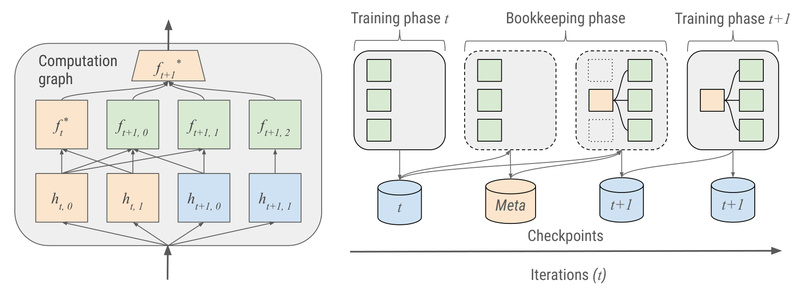

AdaNet doesn’t just select the best model—it learns how to combine models. Its core innovation lies in treating ensemble construction as part of the learning process. At each iteration, it trains new candidate subnetworks while reusing (and freezing) previously selected ones, dynamically growing an ensemble that balances diversity and accuracy.

Familiar TensorFlow Integration

AdaNet speaks the language of TensorFlow practitioners. It offers:

- A

tf.estimator.Estimator-based API (adanet.AutoEnsembleEstimator) - Compatibility with Keras-style layer definitions via

adanet.subnetwork - Full support for regression, binary classification, multi-class classification, and even multi-head tasks

This means you can plug AdaNet into existing pipelines without rewriting your data input functions or evaluation logic.

Hardware and Scale Ready

Whether you’re running on a laptop CPU or a TPU pod, AdaNet scales gracefully. It supports distributed training across multiple servers and leverages TensorFlow’s native acceleration for GPUs and TPUs—critical for teams that need to iterate quickly on large datasets.

Built-in Observability

With native TensorBoard integration, you can track ensemble growth, subnetwork losses, and final metrics in real time. This transparency helps you understand why AdaNet chose certain models—making it easier to debug, explain, or refine results.

Getting Started: A Minimal, Real-World Example

Using AdaNet is intentionally straightforward. Here’s how you’d combine a linear model and a deep neural network for a 10-class classification task:

import adanet

import tensorflow as tf

# Define your task head

head = tf.estimator.MultiClassHead(n_classes=10)

# Assume feature_columns is already defined

feature_columns = ...

# Provide candidate models as a pool

estimator = adanet.AutoEnsembleEstimator(head=head,candidate_pool={"linear": tf.estimator.LinearEstimator(head=head,feature_columns=feature_columns,optimizer=tf.keras.optimizers.Ftrl()),"dnn": tf.estimator.DNNEstimator(head=head,feature_columns=feature_columns,optimizer=tf.keras.optimizers.Adagrad(),hidden_units=[1000, 500, 100])},max_iteration_steps=50

)

# Train, evaluate, and predict—just like any TensorFlow Estimator

estimator.train(input_fn=train_input_fn, steps=100)

metrics = estimator.evaluate(input_fn=eval_input_fn)

predictions = estimator.predict(input_fn=predict_input_fn)

In this example, AdaNet automatically decides—based on validation performance—whether to keep the linear model, the DNN, both, or even variants of them across iterations. No manual blending, no cross-validation scripts, no architecture search scripts: just one train() call.

Ideal Use Cases: Where AdaNet Excels

AdaNet shines in scenarios where:

- Speed-to-prototype matters: You need a strong baseline model on a new tabular or semi-structured dataset within hours, not weeks.

- Expertise is limited: Your team has data engineering skills but lacks deep ML architecture experience.

- Diversity improves robustness: You suspect that combining simple and complex models (e.g., linear + neural) will yield better generalization—common in financial, healthcare, or operational forecasting tasks.

- Hardware flexibility is required: You train on CPUs during development but switch to GPUs or TPUs in production, and want a framework that handles both seamlessly.

It’s particularly well-suited for structured data problems—think customer churn, fraud detection, inventory forecasting—where interpretability, speed, and ensemble diversity often outweigh the need for massive vision or language transformers.

Limitations and Practical Considerations

While powerful, AdaNet isn’t a universal solution:

- TensorFlow 2.1+ dependency: You must use TensorFlow 2.1 or newer; older versions aren’t supported.

- Not actively maintained: The project notes, “This is not an official Google product,” and development has slowed in recent years. It’s stable but unlikely to receive frequent updates.

- Not designed for cutting-edge modalities: AdaNet focuses on general-purpose subnetworks (via

tf.layers) and works best with tabular or moderately complex inputs. It’s not optimized for vision transformers, diffusion models, or large language architectures.

That said, for its intended domain—automated, guaranteed ensemble learning in TensorFlow—AdaNet remains a rare blend of theoretical soundness and engineering pragmatism.

Summary

AdaNet empowers teams to automate high-quality model ensembling without sacrificing control, speed, or theoretical grounding. By unifying neural architecture search and ensemble learning into a single, TensorFlow-native workflow, it reduces reliance on manual tuning while delivering robust, explainable results. If your project involves structured data, limited ML expertise, or a need for rapid, reliable prototyping, AdaNet offers a compelling—and still relevant—AutoML solution that’s worth evaluating today.