Overview

Imagine an AI agent that can sit at your computer, look at the screen, understand what it sees, and complete complex tasks—just like you would. Whether it’s booking a flight, organizing files, or testing software across operating systems, Agent-S makes this possible. As an open-source framework for computer use agents (CUAs), Agent-S enables autonomous, human-like interaction with graphical user interfaces (GUIs) on real machines running Linux, macOS, Windows, and even Android.

Unlike traditional automation tools that rely on brittle script-based rules or single-model AI systems that struggle with long tasks and imprecise actions, Agent-S introduces a compositional generalist-specialist architecture that combines strategic reasoning with pixel-perfect grounding. The result? Reliable, scalable, and generalizable automation for real-world digital workflows.

Why Existing Computer Use Agents Fall Short

Most current AI agents that interact with GUIs suffer from three critical limitations:

- Imprecise grounding: They misidentify where to click or type because their visual understanding is coarse or inconsistent.

- Poor long-horizon planning: They get stuck or lose context on tasks requiring more than a few steps—like “fill out this form, save the PDF, and email it to your manager.”

- One-model-fits-all bottlenecks: A single large language model (LLM) tries to handle everything—reasoning, vision, action generation—leading to suboptimal performance across diverse tasks.

These issues cause frequent failures in practice: wrong buttons clicked, workflows aborted mid-task, or agents that work only in narrow, controlled environments.

Agent-S, particularly in its latest Agent S2 and Agent S3 iterations, directly addresses these pain points through architectural innovation and task-aware specialization.

Core Innovations That Make Agent-S Stand Out

Mixture-of-Grounding for Pixel-Accurate GUI Interaction

Agent-S decouples what to do from where to do it. Instead of relying solely on the main reasoning model to guess coordinates, it employs a dedicated grounding model (e.g., UI-TARS-1.5-7B) fine-tuned for GUI element detection. The system uses a Mixture-of-Grounding technique that fuses multiple visual cues to pinpoint interactive elements with high fidelity—ensuring clicks land exactly where intended, even on cluttered screens.

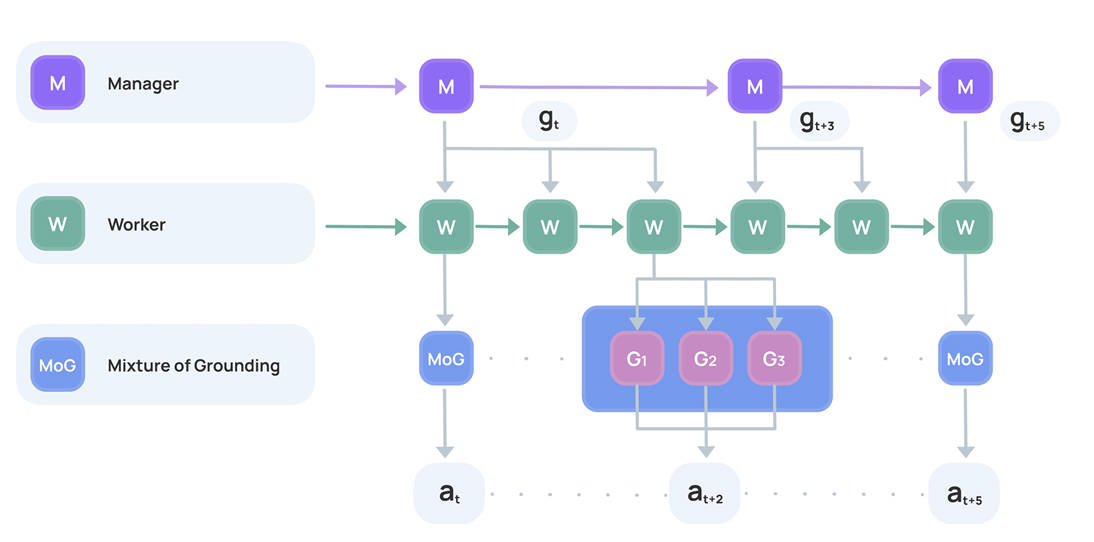

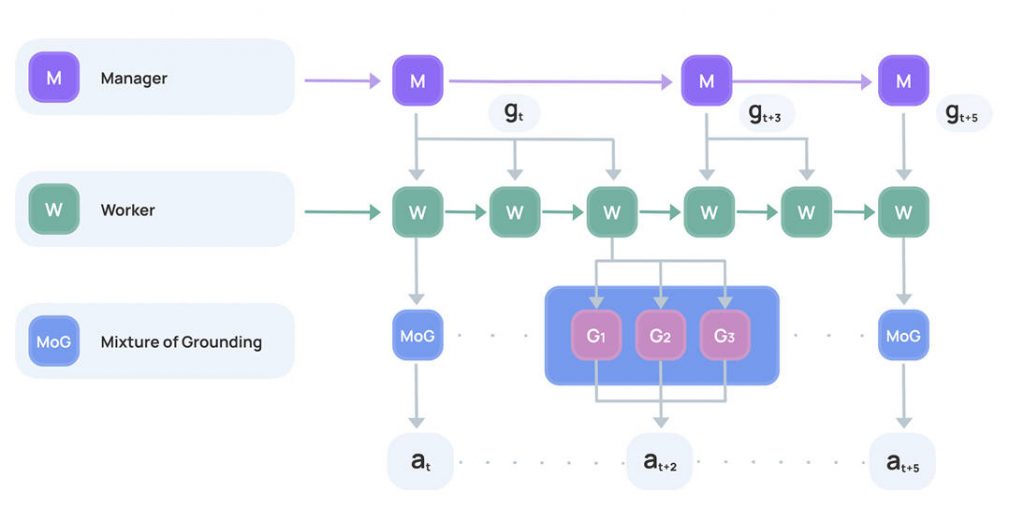

Proactive Hierarchical Planning for Long, Complex Tasks

Agent-S doesn’t just react—it plans. Its Proactive Hierarchical Planning mechanism creates multi-level action plans (e.g., high-level goals like “submit expense report” and low-level steps like “click ‘Attach Receipt'”) and dynamically refines them as the environment changes. This allows the agent to recover from unexpected UI shifts, handle branching logic, and complete tasks spanning dozens of steps.

Compositional Generalist-Specialist Design

Rather than forcing one model to do everything, Agent-S assigns roles:

- A generalist LLM (e.g., GPT-5) handles high-level reasoning, instruction parsing, and strategic decision-making.

- A specialist grounding model focuses exclusively on visual localization.

- An optional local coding environment executes Python or Bash for file operations, data processing, or system automation—bypassing GUI interaction when code is faster and safer.

This modular approach boosts both accuracy and efficiency.

Real-World Use Cases Where Agent-S Delivers Value

Agent-S excels in scenarios that demand visual understanding, sequential reasoning, and cross-platform adaptability:

- Cross-platform desktop automation: Perform identical workflows on macOS, Linux, and Windows without rewriting scripts.

- End-to-end software testing: Simulate user journeys through applications, validating UI behavior across OS versions.

- Data entry and file management: Extract data from emails, populate spreadsheets, rename files in bulk, or organize folders based on content.

- Research in human-like AI: Study how agents perceive, plan, and act in real operating system environments—bridging the gap between simulation and reality.

Crucially, Agent-S operates on real machines, not simulated environments, making its capabilities directly transferable to production settings.

Getting Started: Simple Setup, Powerful Results

Agent-S is designed for developers and researchers who want to integrate computer-use automation quickly. Installation is straightforward:

pip install gui-agents brew install tesseract # Required for OCR support

You’ll need API access to:

- A reasoning model (e.g., OpenAI’s

gpt-5-2025-08-07) - A grounding model (e.g.,

UI-TARS-1.5-7Bhosted via Hugging Face Inference Endpoints)

Then, run via CLI:

agent_s \ --provider openai \ --model gpt-5-2025-08-07 \ --ground_provider huggingface \ --ground_url http://localhost:8080 \ --ground_model ui-tars-1.5-7b \ --grounding_width 1920 \ --grounding_height 1080

For advanced use, the gui_agents Python SDK lets you embed Agent-S into custom applications, with full control over observation inputs and action execution.

Security Note: The optional

--enable_local_envflag allows code execution (Python/Bash). Use only in trusted environments—it runs with your user permissions.

Performance That Proves Real-World Readiness

Agent-S isn’t just innovative—it’s state-of-the-art:

- 69.9% success rate on OSWorld (100-step tasks), approaching 72% human performance.

- 52.8% relative improvement over prior methods on WindowsAgentArena.

- 16.5% relative gain on AndroidWorld, demonstrating strong zero-shot generalization.

- Handles 15-step and 50-step tasks with 18.9% and 32.7% improvements over Claude Computer Use and UI-TARS baselines.

These benchmarks confirm Agent-S works reliably across operating systems, applications, and task lengths—without retraining.

Important Considerations Before Adoption

While powerful, Agent-S has practical constraints:

- Single-monitor setup only: Multi-display environments aren’t supported.

- Requires external model APIs: You must provide access to both a reasoning and grounding model.

- Grounding resolution must match: For UI-TARS-1.5-7B, use

1920x1080; for UI-TARS-72B, use1000x1000. - Local code execution is risky: Only enable

--enable_local_envin secure, controlled settings.

Summary

Agent-S redefines what’s possible for AI-driven computer automation. By combining precise GUI grounding, hierarchical planning, and a flexible generalist-specialist architecture, it overcomes the core limitations that have held back previous agents. With proven state-of-the-art results across Linux, Windows, macOS, and Android—and an open-source, developer-friendly design—Agent-S is the most capable framework available for building real-world, human-like computer use agents.

Whether you’re automating repetitive tasks, testing software at scale, or researching agentic AI, Agent-S gives you the tools to act—not just think—like a human on a real computer.