AgentCPM-GUI is an open-source, on-device large language model (LLM) agent designed to understand smartphone screenshots and autonomously perform user-specified tasks in both English and Chinese mobile applications. Built on the MiniCPM-V architecture with 8 billion parameters, it operates directly on-device—eliminating the need for cloud connectivity—making it ideal for privacy-sensitive, low-latency, or offline mobile automation scenarios.

Unlike many GUI agents that focus exclusively on English interfaces, AgentCPM-GUI is the first open-source agent explicitly fine-tuned for Chinese apps, supporting popular platforms like Amap, Dianping, Bilibili, and Xiaohongshu. This bilingual capability addresses a critical gap in the open-source ecosystem, where non-English mobile automation tools are scarce.

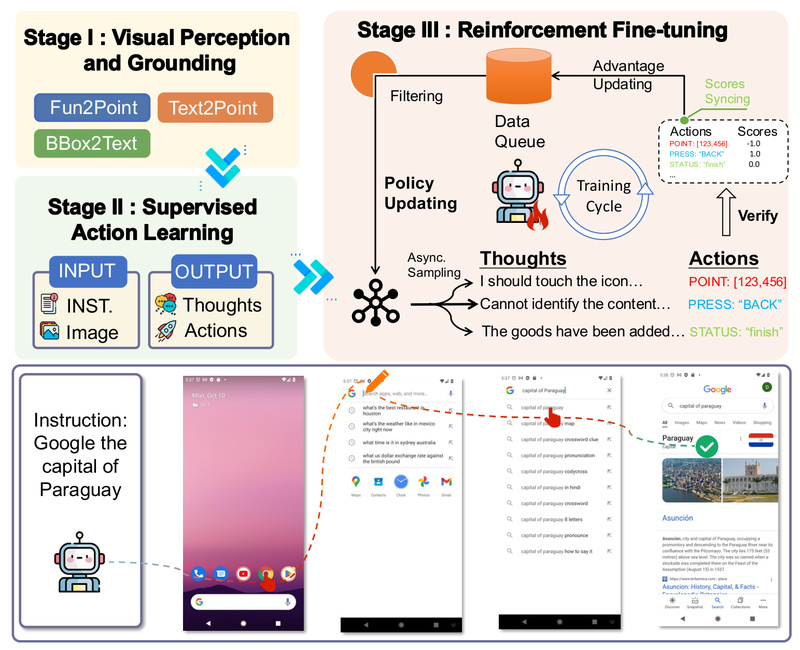

The system combines high-fidelity visual grounding, structured action output, and reinforcement fine-tuning (RFT) to deliver robust performance across diverse and complex mobile UIs—achieving state-of-the-art results on five public benchmarks and a newly introduced Chinese GUI benchmark called CAGUI.

Key Strengths That Solve Real Problems

High-Quality GUI Grounding Through Bilingual Pre-Training

AgentCPM-GUI is pre-trained on a large-scale dataset of Android screenshots spanning both English and Chinese applications. This grounding-aware pre-training enables the model to accurately locate and interpret common GUI elements—such as buttons, input fields, labels, and icons—even in visually dense or language-specific layouts. This directly tackles the “noisy and semantically shallow” data problem that plagues many imitation-only training pipelines.

Native Support for Chinese Mobile Apps

Most existing open-source GUI agents assume English-centric interfaces. AgentCPM-GUI breaks this barrier by including high-quality supervised fine-tuning (SFT) trajectories from over 30 Chinese apps. This makes it uniquely suited for developers targeting the Chinese mobile ecosystem—a rapidly growing market with distinct UI/UX patterns and linguistic nuances.

Reinforcement Fine-Tuning for Smarter Decision-Making

To move beyond rote imitation, AgentCPM-GUI employs GRPO-based reinforcement fine-tuning. This enables the agent to “think before acting,” generating a reasoning trace (the thought field) before outputting a concrete action. As a result, it handles multi-step or ambiguous tasks more reliably than purely supervised models, especially in out-of-distribution scenarios.

Compact Action Space for On-Device Efficiency

Every action is encoded in a minimal JSON format with an average length of just 9.7 tokens. The action space includes only seven primitives: Click, Long Press, Swipe, Press key, Type text, Wait, and STATUS. This design reduces token generation overhead and accelerates inference—critical for real-time performance on resource-constrained mobile devices.

Ideal Use Cases for Practitioners

AgentCPM-GUI excels in several practical contexts:

- Mobile App Testing Automation: Automate end-to-end user flows across bilingual apps without maintaining brittle script-based test suites.

- On-Device Assistive Technologies: Build accessibility tools that help users navigate apps via voice or high-level intent, all processed locally for privacy.

- Multilingual Research Platforms: Enable reproducible research on GUI agents in non-English contexts, thanks to the open-sourced CAGUI benchmark and training data.

- Edge AI Deployments: Deploy intelligent automation in environments with limited or no internet connectivity, leveraging the model’s on-device inference capability.

Because it operates purely on screenshots (no accessibility API required), AgentCPM-GUI is also compatible with emulators, real devices, and recorded screen sessions—offering flexibility in deployment.

How to Get Started Quickly

Setting up AgentCPM-GUI is straightforward for developers familiar with Hugging Face Transformers or vLLM:

-

Install Dependencies

Clone the repository and create a Python 3.11 environment with the providedrequirements.txt. -

Download the Model

Obtain the model checkpoint from Hugging Face and place it in themodel/AgentCPM-GUIdirectory. -

Prepare Inputs

Provide a natural language instruction (e.g., “Qing Dian Ji Ping Mu Shang De ‘Hui Yuan ‘An Niu”) and a screenshot. The image should be resized so its longer side is at most 1120 pixels to balance quality and efficiency. -

Run Inference

Using either the Hugging Face or vLLM interface, pass the instruction and image to the model along with a system prompt that enforces the required JSON action schema. The model returns a compact JSON object such as:{"thought":"...","POINT":[729,69]}Coordinates are normalized to a 0–1000 scale relative to the image dimensions, enabling resolution-agnostic execution.

-

Map Output to Device Actions

Convert normalized coordinates back to absolute screen positions using the original image width and height, then simulate the corresponding touch or input event.

Limitations and Considerations

While AgentCPM-GUI offers strong capabilities, users should be aware of its current constraints:

- Visual-Only Perception: The agent relies solely on pixel input. It cannot access underlying UI metadata (e.g., element IDs or accessibility trees), which may limit robustness in visually similar but functionally distinct widgets.

- Preprocessing Required: Screenshots must be properly resized and formatted. Real-world deployment may require additional image normalization or error handling.

- Fixed Action Primitives: The agent cannot invent new interaction types beyond the seven predefined actions. Complex gestures (e.g., pinch-to-zoom) are unsupported.

- Layout Sensitivity: Performance may degrade on highly dynamic or animated interfaces where widget positions shift rapidly between frames.

These limitations are common among vision-based GUI agents, but the project’s open-source nature allows practitioners to adapt or extend the pipeline for specialized needs.

Summary

AgentCPM-GUI stands out as a practical, efficient, and multilingual on-device GUI agent that bridges the gap between research and real-world mobile automation. By combining bilingual grounding, reinforcement-enhanced reasoning, and a lightweight action format, it delivers high accuracy and low latency—particularly in the under-served Chinese app ecosystem. With all code, models, and evaluation data publicly released, it lowers the barrier for developers, researchers, and product teams to explore, deploy, and innovate with intelligent mobile agents.