In today’s fast-moving AI landscape, organizations and researchers increasingly need intelligent systems that don’t just respond to commands—but plan, collaborate, remember, and even learn from experience. Yet building such autonomous agents has traditionally required deep expertise in large language models (LLMs), complex orchestration logic, and custom infrastructure. Enter Agents, an open-source framework designed to democratize the development of autonomous language agents for both practitioners and researchers. With its intuitive design, modular structure, and groundbreaking symbolic learning capability in version 2.0, Agents lowers the barrier to entry while enabling sophisticated agent behaviors—no PhD required.

Why Agents Matters: Solving Real-World Automation Challenges

Modern workflows—from customer service to scientific research—demand automation that understands nuance, adapts over time, and coordinates across systems. Traditional scripted bots fall short because they lack reasoning, memory, or the ability to use external tools dynamically. Agents directly addresses these pain points by offering a unified framework where language-powered agents can:

- Plan multi-step actions

- Remember past interactions via built-in memory

- Use tools like APIs, databases, or calculators

- Communicate with other agents or humans

- Self-improve through experience (in Agents 2.0)

This eliminates the need for developers to stitch together disparate components or reinvent agent logic from scratch. For technical decision-makers, that means faster prototyping, lower development costs, and quicker iteration cycles.

Key Features That Set Agents Apart

Agents isn’t just another LLM wrapper—it’s a purpose-built environment for autonomous agent development, engineered with both usability and extensibility in mind.

Planning, Memory, and Tool Usage Out of the Box

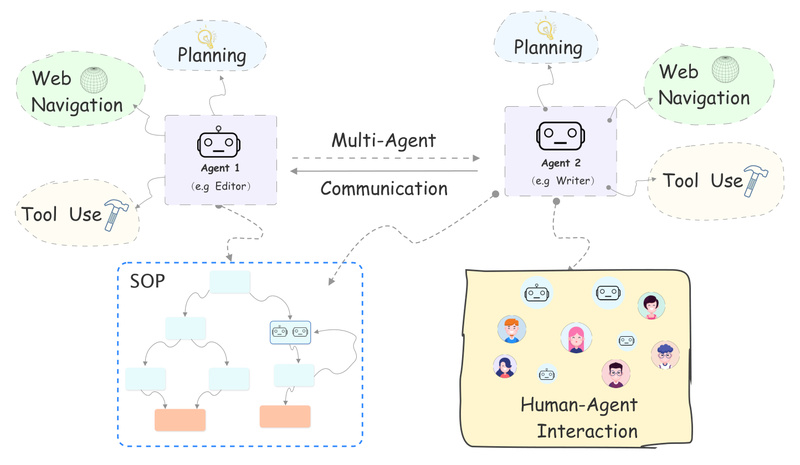

The framework natively supports core agent capabilities: decomposing complex goals into subtasks (planning), retaining and retrieving relevant context (memory), and invoking external functions (tool usage). These aren’t add-ons—they’re integrated into the agent’s execution pipeline.

Multi-Agent Communication

Agents can be configured to work in teams. Whether simulating a customer support squad or modeling collaborative research agents, the framework handles message passing, role assignment, and synchronized execution.

Fine-Grained Symbolic Control

Unlike black-box agent systems, Agents exposes every component—from prompts to tool selections—as symbolic, editable elements. This transparency enables precise debugging and customization, even for non-specialists.

User-Friendly for Rapid Prototyping

You don’t need to write hundreds of lines of code to get started. With high-level APIs and pre-built templates, you can define, test, and deploy a functional agent with minimal coding—ideal for product teams, educators, or solo developers.

Modular and Research-Friendly Architecture

For researchers, Agents’ clean separation of components (planner, memory, executor, etc.) makes it easy to swap in new algorithms, test novel coordination strategies, or extend the framework for domain-specific tasks.

From Execution to Self-Improvement: The Power of Symbolic Learning in Agents 2.0

The most exciting leap in Agents 2.0 is symbolic learning—a paradigm that lets agents learn from their own experiences, much like neural networks learn from data, but using language instead of gradients.

Here’s how it works:

- Forward Pass: The agent executes a task, and every decision—prompts used, tools called, outputs generated—is recorded as a “trajectory.”

- Language Loss: A prompt-based evaluator assesses the final outcome, producing a textual “loss” that describes what went wrong.

- Backpropagation in Language: This loss is propagated backward through the trajectory, generating “language gradients”—natural language reflections on how each step could improve.

- Symbolic Update: Using another set of prompts, the agent refines its internal prompts, tool selections, and even workflow structure based on those reflections.

The result? Agents that self-evolve without manual reprogramming. A support agent that initially fails to resolve a billing inquiry can analyze its missteps and adjust its strategy for next time. This capability is especially powerful in multi-agent settings, where entire teams can co-adapt their coordination protocols.

Practical Use Cases: Where and How Agents Delivers Value

Agents shines in scenarios that require adaptive, language-driven automation:

- Task Automation: Deploy agents that handle multi-turn workflows (e.g., scheduling meetings, processing refund requests) and improve over time via symbolic learning.

- Collaborative Simulations: Model team dynamics with multiple specialized agents—like a product manager, engineer, and tester—working together to debug a feature.

- Research Prototyping: Test hypotheses about agent architectures, memory mechanisms, or communication protocols with minimal boilerplate.

- Education & Demonstration: Teach concepts of autonomy, planning, and LLM limitations through interactive, modifiable agent examples.

The framework doesn’t replace human oversight—but it dramatically reduces the effort needed to build systems that approach human-like reasoning in structured environments.

Getting Started Is Easy—Here’s How

Installing Agents takes seconds. Choose one of two methods:

Install directly from GitHub:

pip install git+https://github.com/aiwaves-cn/agents@master

Or clone for local development:

git clone -b master https://github.com/aiwaves-cn/agents cd agents pip install -e .

Once installed, you can define agents using simple configuration files or Python scripts. The framework handles the heavy lifting—orchestrating steps, managing memory, and invoking tools—while letting you focus on defining goals and evaluation criteria.

Limitations and Considerations Before Adoption

While powerful, Agents isn’t a magic bullet. Keep these considerations in mind:

- LLM Dependency: Agent performance hinges on the underlying language model. Costs, latency, and reliability are tied to your LLM provider.

- Prompt-Based Learning: Symbolic learning relies on the quality of reflection and update prompts. It may not generalize as robustly as gradient-based neural training.

- Tool and Environment Design: Real-world deployment requires careful design of available tools, safety constraints, and evaluation metrics. Agents provides the framework—but you define the boundaries.

These aren’t flaws, but realistic trade-offs. For teams ready to invest in thoughtful agent design, Agents offers an unmatched combination of accessibility and depth.

Summary

Agents bridges the gap between cutting-edge autonomous agent research and practical, real-world application. By combining intuitive design with advanced features like symbolic learning and multi-agent coordination, it empowers developers, researchers, and technical leaders to build, test, and evolve language agents faster than ever. If you’re evaluating frameworks for LLM-powered automation, Agents deserves a serious look.