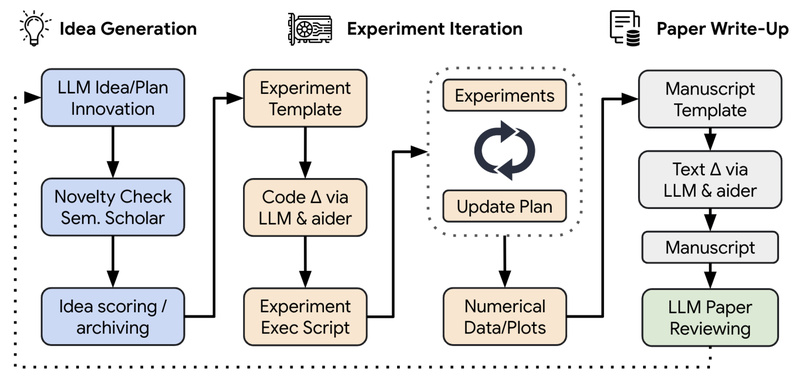

Imagine a system that doesn’t just assist scientists—but acts as one. It generates novel research hypotheses, writes executable code, runs experiments, visualizes results, drafts a full scientific paper in LaTeX, and even simulates peer review to evaluate its own work. That’s precisely what AI-Scientist delivers: the first open-source framework for fully automated, open-ended scientific discovery in machine learning.

Built by Sakana AI and introduced in the paper “The AI Scientist: Towards Fully Automated Open-Ended Scientific Discovery,” this system leverages frontier large language models (LLMs) to close the entire research loop—without human intervention. While existing AI tools help with isolated tasks like brainstorming or coding, AI-Scientist is designed to operate autonomously across the full scientific workflow, making it a powerful asset for technical teams seeking rapid exploration, low-cost prototyping, or scalable research ideation.

Why AI-Scientist Matters for Technical Decision-Makers

In fast-moving fields like machine learning, the bottleneck isn’t just compute or data—it’s the time and expertise required to iterate through ideas. AI-Scientist directly addresses this by turning high-level research directions into validated, documented experiments at a fraction of the usual cost.

Each full research cycle—including code execution, result analysis, paper writing, and simulated review—costs under $15 when using models like Claude 3.5 Sonnet. This affordability democratizes access to high-quality research prototyping, enabling small teams, startups, or individual practitioners to explore ideas that would otherwise require weeks of engineering and writing effort.

Moreover, because the system is open-source and built on modular templates, it can be adapted to new domains beyond the three included in the original study: diffusion modeling, transformer-based language modeling, and grokking dynamics.

Key Capabilities That Enable True Autonomy

AI-Scientist stands out not just for what it does, but how comprehensively it does it. Here are the core features that make it uniquely powerful:

1. End-to-End Automated Paper Generation

From a single prompt, the system produces a complete scientific paper in PDF format—complete with abstract, methodology, results, figures, and references. The output mimics the structure and rigor of submissions to top-tier ML conferences.

2. Safe(ish) Execution of LLM-Written Code

The framework executes code generated by the LLM within user-controlled environments. While this introduces real security considerations (discussed below), it’s essential for validating ideas empirically—moving beyond speculation to actual experimental evidence.

3. Built-In Automated Peer Review

AI-Scientist includes a validated reviewer module that evaluates generated papers on criteria like novelty, soundness, and clarity. Benchmarked against human judgments, this reviewer achieves near-human performance and can classify papers as “Accept” or “Reject” relative to conference standards.

4. Multi-Model and Multi-API Support

The system supports a wide range of frontier models:

- OpenAI: GPT-4o, GPT-4o-mini, o1 series

- Anthropic: Claude 3.5 Sonnet (via API, Bedrock, or Vertex AI)

- DeepSeek, Gemini, and OpenRouter models

This flexibility allows teams to choose cost-performance tradeoffs or comply with internal model policies.

5. Extensible Template Architecture

Research domains are encapsulated in templates, each containing:

experiment.py: Runs the core scientific testplot.py: Generates visual resultsprompt.jsonandseed_ideas.json: Guide idea generationlatex/template.tex: Formats the final paper

The project ships with three validated templates (NanoGPT, 2D Diffusion, Grokking), and the community has already contributed extensions for MobileNetV3, earthquake prediction, quantum chemistry, and more.

Practical Use Cases for Engineers and Researchers

AI-Scientist isn’t meant to replace human scientists—but to amplify their capacity. Here’s how technical leaders can leverage it:

- Rapid Baseline Exploration: Test dozens of small modifications to a diffusion model or transformer architecture in parallel, identifying promising directions before committing engineering resources.

- Internal R&D Kickstarts: Generate draft papers as starting points for team discussion, reducing the “blank page” problem in early-stage research.

- Training and Education: Simulate the full research process for students or new hires to learn scientific writing, experimental design, and critical evaluation.

- Conference-Quality Benchmarking: Use the automated reviewer to assess how a human-written paper might fare against AI-generated baselines.

Crucially, AI-Scientist works best in code-executable domains—areas where hypotheses can be tested through runnable experiments. It is not suited for purely theoretical, philosophical, or non-computational research.

Getting Started Without the Headache

Despite its sophistication, AI-Scientist is designed for straightforward adoption:

-

Set up the environment:

conda create -n ai_scientist python=3.11 conda activate ai_scientist sudo apt-get install texlive-full # for LaTeX/PDF generation pip install -r requirements.txt

-

Configure API keys for your chosen LLM (e.g.,

OPENAI_API_KEY,ANTHROPIC_API_KEY). -

Initialize a template:

For example, in the NanoGPT template:python data/enwik8/prepare.py cd templates/nanoGPT python experiment.py --out_dir run_0 # establishes baseline

-

Launch autonomous research:

python launch_scientist.py --model "claude-3-5-sonnet-20241022" --experiment nanoGPT_lite --num-ideas 2

You don’t need deep expertise in the underlying science—just the ability to run the setup scripts and interpret the resulting papers. The system handles the rest.

Important Limitations and Safety Notes

AI-Scientist is powerful, but it comes with clear boundaries and responsibilities:

- Model Capability Requirement: Only frontier models (roughly GPT-4 level or better) produce reliable results. Weaker models often generate non-functional code or incoherent papers.

- Variable Success Rates: Not every idea leads to a complete paper. Success depends on model choice, template complexity, and prompt quality. Claude 3.5 Sonnet currently shows the highest reliability.

- Security Risk from Code Execution: The system runs LLM-generated code, which may include unsafe operations, external network calls, or unintended subprocesses. Always run in a containerized environment with restricted permissions and no internet access unless absolutely necessary.

- Domain Constraint: Research questions must be expressible as executable code with quantifiable outcomes. The system cannot handle open-ended theoretical proofs or qualitative social science inquiries.

The project maintainers explicitly warn: “This codebase will execute LLM-written code. Use at your own discretion.” Responsible deployment is non-negotiable.

Summary

AI-Scientist represents a significant leap toward autonomous scientific discovery in machine learning. By integrating idea generation, experimentation, writing, and evaluation into a single pipeline, it offers technical teams an unprecedented tool for scalable, low-cost research exploration. While not a silver bullet—and requiring careful attention to safety and scope—it enables practitioners to prototype, iterate, and validate ideas at a pace previously impossible without large research teams.

For those working in experimental ML subfields, adopting AI-Scientist could mean turning weeks of effort into hours—and unlocking a new mode of AI-augmented innovation.