Imagine deploying an autonomous robot in a warehouse that shifts from bright daylight to dim artificial lighting—or a drone navigating from open skies into shadowed urban canyons. Traditional visual SLAM (Simultaneous Localization and Mapping) systems often fail under such illumination shifts because they rely heavily on texture and intensity-consistent features. AirSLAM directly addresses this critical limitation.

Accepted to IEEE Transactions on Robotics (TRO) in 2025, AirSLAM is an open-source, high-performance visual SLAM system engineered for resilience against both short-term (e.g., glare, shadows) and long-term (e.g., day-night cycles) lighting variations. It achieves this by fusing deep learning with classical geometric optimization in a novel point-line framework, delivering not only robustness but also real-time efficiency—up to 73 Hz on a standard PC and 40 Hz on embedded platforms like NVIDIA Jetson.

Built as a dual-mode system supporting both V-SLAM (vision-only) and VI-SLAM (vision-inertial), AirSLAM is designed from the ground up for real-world robotics applications where reliability under dynamic lighting is non-negotiable.

Why Illumination Robustness Matters in Visual SLAM

Most visual SLAM systems—whether based on ORB, SIFT, or even learned features—assume consistent illumination between frames. In practice, this assumption breaks down frequently: sunlight moves across a room, headlights flash at night, or indoor lighting flickers. These changes cause feature detectors to miss correspondences, leading to tracking failure or map drift.

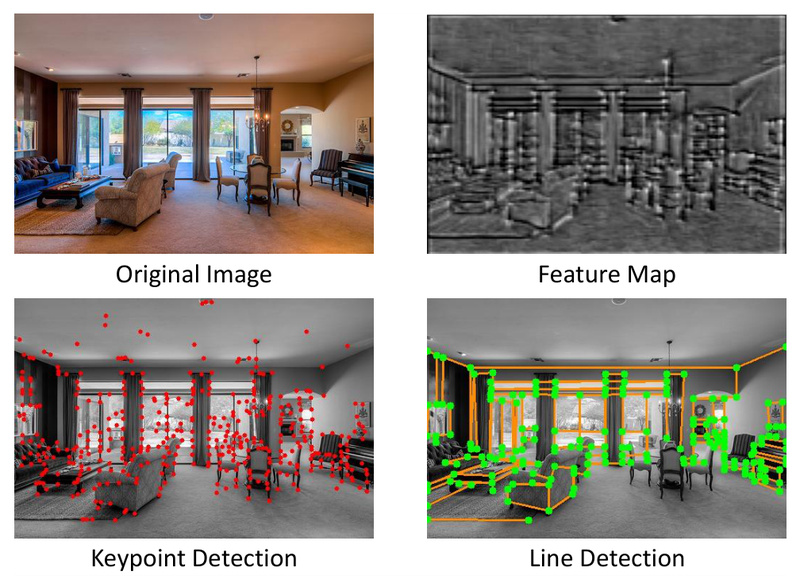

AirSLAM tackles this by moving beyond points alone. Natural and built environments contain abundant structural lines—edges of walls, door frames, road markings—that remain geometrically stable even when appearance changes drastically. By jointly leveraging keypoints and lines, AirSLAM maintains tracking continuity where point-only methods falter.

Core Innovations Behind AirSLAM’s Performance

Hybrid Architecture: Best of Deep Learning and Classical Optimization

AirSLAM adopts a hybrid architecture: a lightweight convolutional neural network (CNN) handles front-end perception, while traditional backend optimizers (Ceres, g2o) manage bundle adjustment and pose graph refinement. This combination ensures both robustness (from deep features) and accuracy/efficiency (from well-tuned geometric solvers).

Unified Point-Line Feature Extraction with PLNet

At the heart of AirSLAM is PLNet, a single CNN that simultaneously detects keypoints and structural lines in a unified representation. This avoids the inefficiency of running separate detectors and enables coupled matching and triangulation, improving consistency between point and line constraints during optimization.

The network is trained to be illumination-invariant, making it reliable across lighting conditions that confuse classical detectors like ORB or FAST.

Real-Time Deployment via TensorRT and C++

To bridge the gap between research and deployment, AirSLAM compiles PLNet into NVIDIA TensorRT and integrates it into a C++ ROS pipeline. This enables high-speed inference without Python overhead—critical for resource-constrained robots. The system achieves 40 Hz on embedded Jetson platforms, proving its suitability for fielded systems.

Lightweight Relocalization Using Reusable Maps

When tracking is lost (e.g., due to motion blur or occlusion), AirSLAM doesn’t require map reconstruction. Its lightweight relocalization pipeline matches a new query frame against a pre-built map using keypoints, lines, and a structural graph, enabling fast recovery with minimal computation.

Ideal Use Cases

AirSLAM excels in scenarios where lighting is unpredictable but geometric structure is rich:

- Autonomous drones flying from outdoor to indoor environments

- Mobile robots in warehouses with windows or mixed lighting

- Inspection systems in tunnels, factories, or underground facilities

- Delivery robots operating across daytime and nighttime cycles

Its dual-mode support means you can deploy it with just a monocular or stereo camera (V-SLAM) or enhance it with an IMU for faster initialization and better motion handling (VI-SLAM).

Getting Started: Practical Integration

AirSLAM follows the widely used Autonomous Systems Lab (ASL) dataset format, making it compatible with standard benchmarks like EuRoC. The workflow is ROS-based and divided into three stages:

- Mapping: Build a point-line map from synchronized image (and optional IMU) sequences.

- Map Optimization: Refine the map offline using global bundle adjustment.

- Relocalization: Reuse the optimized map to localize new monocular sequences without prior poses.

For rapid setup, AirSLAM provides a Docker image with all dependencies (OpenCV, Eigen, Ceres, TensorRT 8.6.1.6, CUDA 12.1, ROS Noetic). This eliminates environment conflicts and accelerates onboarding.

While Jetson deployment is supported, it requires careful handling of ROS and TensorRT compatibility—detailed guidance is available in the repository’s documentation.

Current Limitations and Practical Notes

Despite its strengths, AirSLAM has a few constraints to consider:

- ROS Noetic dependency: Not compatible with ROS 2 or older ROS versions.

- Hardware specificity: Requires NVIDIA GPUs with CUDA 12.1 and TensorRT 8.6.1.6.

- Calibration format: Cameras must be calibrated in ASL format; custom datasets need proper configuration files.

- Matcher limitation: Currently uses a custom matcher; integration with SuperGlue is planned but not yet implemented.

- Relocalization requirement: Needs a pre-built map—online-only operation without prior mapping isn’t supported.

These are typical trade-offs for a system optimized for both performance and robustness, and the active development roadmap suggests ongoing improvements.

Summary

AirSLAM stands out as a practical, illumination-robust, and real-time visual SLAM solution for robotics practitioners facing real-world lighting challenges. By unifying point and line features through a deployable deep learning pipeline and classical optimization backend, it delivers reliability where many alternatives fail. Its open-source nature, Docker support, and TRO-level validation make it a compelling choice for researchers and engineers building vision-based autonomous systems that must operate beyond controlled lab conditions.

If your project involves navigation in dynamic lighting—and you need a system that won’t break when the sun goes down or the lights flicker—AirSLAM is worth serious consideration.