aiXcoder-7B is a 7-billion-parameter open-source large language model (LLM) purpose-built for code processing. Unlike larger models that trade inference speed for marginal accuracy gains, aiXcoder-7B delivers state-of-the-art code completion performance—outperforming not only peers of similar size but also significantly larger models such as CodeLlama-34B and StarCoder2-15B—while remaining lightweight enough for real-time use in integrated development environments (IDEs).

This balance of accuracy, efficiency, and open accessibility makes aiXcoder-7B uniquely valuable for engineering teams, researchers, and tool developers who need reliable, low-latency code intelligence without the computational overhead of 15B+ parameter models. Whether you’re building an AI-powered coding assistant, enhancing an internal developer tool, or researching efficient code LLMs, aiXcoder-7B offers a compelling foundation.

Key Technical Innovations Behind Its Performance

aiXcoder-7B’s superior performance stems from three carefully engineered components that directly address real-world coding challenges:

Structured Fill-In-the-Middle (SFIM) Training

Traditional Fill-In-the-Middle (FIM) tasks treat code as plain text, often splitting it at arbitrary points. aiXcoder-7B introduces Structured FIM (SFIM), which leverages abstract syntax trees (ASTs) to identify complete syntactic nodes—such as entire if-blocks, loops, or function bodies—and constructs FIM tasks around them. This ensures the model learns to generate code that is not only contextually relevant but also structurally sound, reducing syntax errors and hallucinated fragments.

For example, when predicting the middle of a loop, SFIM guarantees the generated code aligns with the expected control-flow structure, leading to more reliable completions in real editing scenarios.

Cross-File Contextual Awareness via Smart Data Sampling

Real software projects span multiple interdependent files. To capture this, aiXcoder-7B’s training pipeline incorporates cross-file data sampling strategies that preserve relationships between files (e.g., via call graphs, file path similarity, and TF-IDF clustering). This enables the model to better understand project-level context—such as which utility functions are imported or how classes interact—resulting in more accurate completions that respect the broader codebase architecture.

Massive, High-Quality, and Rigorously Filtered Training Data

The model is trained on 1.2 trillion unique tokens drawn from a curated mix of 100+ programming languages and natural language sources (including Stack Overflow, technical blogs, and documentation). Crucially, the dataset undergoes a 7-stage cleaning pipeline:

- License-compliant project filtering

- Deduplication via MinHash

- Removal of auto-generated and commented-out code

- Syntax validation across top 50 languages

- Static analysis to eliminate 163 bug patterns and 197 vulnerability types

- Sensitive information scrubbing

- Project-level quality scoring (based on stars, commits, test coverage)

This ensures aiXcoder-7B learns from clean, secure, and representative code—minimizing noise and maximizing signal.

Ideal Use Cases for Developers and Technical Teams

aiXcoder-7B excels in practical, everyday development workflows:

- Real-Time Code Completion in IDEs: Its 7B footprint enables fast inference on a single consumer-grade GPU (or even CPU with quantization), making it ideal for embedding into VS Code or JetBrains plugins.

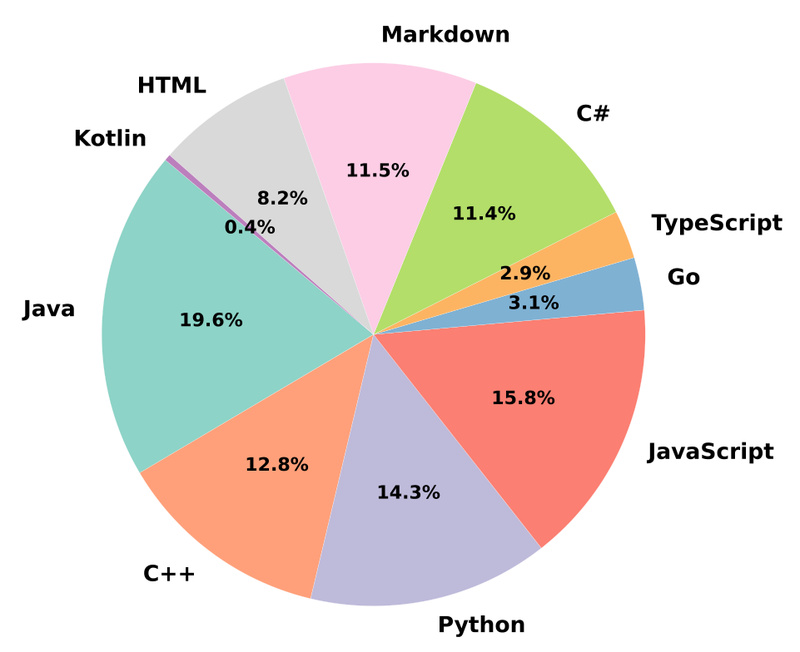

- Multilingual Code Generation: Strong performance across Python, Java, C++, JavaScript, and more allows teams with polyglot codebases to use a single model.

- Context-Aware Snippet Prediction: Whether you need a full function from a docstring, a missing loop body, or a conditional block between existing code, aiXcoder-7B leverages both prefix and suffix context via FIM for precise generation.

- Research and Tooling Foundation: As an open base model (with weights available on Hugging Face), it serves as a high-quality starting point for fine-tuning or building specialized coding agents.

Notably, the model is optimized for code completion and generation, not general-purpose chat or high-level tasks like test case synthesis or debugging—areas where instruction-tuned variants (planned for future release) will be more appropriate.

Getting Started: Practical Deployment Options

aiXcoder-7B is designed for easy integration. Here’s how to begin:

Environment Setup

Minimum requirements:

- Python 3.8+

- PyTorch 2.1+

transformers≥ 4.34.1 andsentencepiece≥ 0.2.0

For best performance, install FlashAttention to accelerate attention computation on NVIDIA GPUs. Alternatively, use the provided Docker image for a reproducible environment.

Inference Methods

You can run aiXcoder-7B in multiple ways:

- Via Hugging Face Transformers: Load the model directly from the Hub (

aiXcoder/aixcoder-7b-base) and use standardgenerate()calls. - Command Line: Use the provided

sess_megatron.pyorsess_huggingface.pyscripts for quick testing. - Quantized Inference: With

bitsandbytes, run 4-bit or 8-bit quantized versions to reduce memory usage—4-bit inference requires under 6 GB of GPU memory, enabling deployment on modest hardware.

Fine-Tuning for Domain-Specific Code

While the base model performs strongly out-of-the-box, you can adapt it to proprietary codebases using PEFT (Parameter-Efficient Fine-Tuning). The repository includes a finetune.py script that supports FIM-style training on custom datasets (e.g., internal repositories), allowing you to specialize the model without full retraining.

Current Limitations and Considerations

To set realistic expectations:

- No Instruction Tuning: The current base model is not optimized for interactive or task-oriented prompts (e.g., “Write a unit test for this function”). It excels at completion-style tasks but may underperform on higher-level reasoning without fine-tuning.

- Commercial Use Requires Licensing: While free for academic research, commercial deployment requires a license from aiXcoder.

- Language Coverage Imbalance: Performance is strongest in major languages (Python, Java, etc.); less common languages may see reduced accuracy.

- Not a Chatbot: aiXcoder-7B is a code-focused model—not suitable as a general assistant for non-programming queries.

Summary

aiXcoder-7B redefines what’s possible in lightweight code LLMs. By combining structured training objectives, cross-file context awareness, and massive high-quality data, it achieves best-in-class completion accuracy—surpassing models more than twice its size—while remaining efficient enough for real-time developer use.

Its open availability, flexible deployment options (including 4-bit quantization), and strong multilingual support make it an excellent choice for engineering teams building intelligent coding tools, researchers exploring efficient LLM architectures, or developers seeking a fast, accurate local code assistant. If you need high-performance code intelligence without infrastructure bloat, aiXcoder-7B deserves serious consideration.