As AI systems grow more capable across diverse data types—text, images, audio, and video—the challenge of aligning them with human intent becomes increasingly complex. Traditional alignment methods like Reinforcement Learning from Human Feedback (RLHF) have excelled in the text-only domain, but they fall short when applied to models that must understand and generate across multiple modalities. Enter Align Anything, the first open-source framework explicitly designed to align any-to-any multimodal models using human preference data spanning all major modalities. Developed by the PKU-Alignment team, Align Anything tackles the core problem of cross-modality alignment head-on by introducing a unified, modular, and scalable approach that works across vision, language, audio, video, and even action spaces.

Whether you’re fine-tuning a vision-language assistant, training a robot to follow multimodal instructions, or building a research model that generates audio from text prompts, Align Anything provides the tools, datasets, and algorithms needed to ensure your model behaves as intended—safely, reliably, and in line with human values.

Why Cross-Modality Alignment Is Hard—and Why It Matters

Most existing alignment frameworks assume a text-only world. But real-world applications rarely fit that mold. Consider a household robot that must interpret a spoken command (“find the red cup”) while processing live video feeds—or a creative assistant that generates a short video in response to a written prompt. These systems require joint understanding and coherent generation across modalities, and aligning their behavior demands feedback that reflects the nuances of each modality.

Yet until now, the community lacked three critical ingredients:

- Large-scale human preference data covering all modalities,

- Algorithms that can learn from rich, non-binary feedback across modalities, and

- A standardized evaluation framework to measure progress.

Align Anything delivers all three.

Core Capabilities That Make Align Anything Unique

Unified Language Feedback Instead of Binary Choices

Rather than relying solely on “preferred vs. rejected” pairs (binary feedback), Align Anything introduces language feedback—natural language explanations of why one output is better than another. This approach captures far richer human intent, especially in complex multimodal scenarios where a simple thumbs-up/thumbs-down is insufficient. For example, feedback might say, “The generated image matches the prompt but misplaces the object,” enabling the model to learn fine-grained corrections.

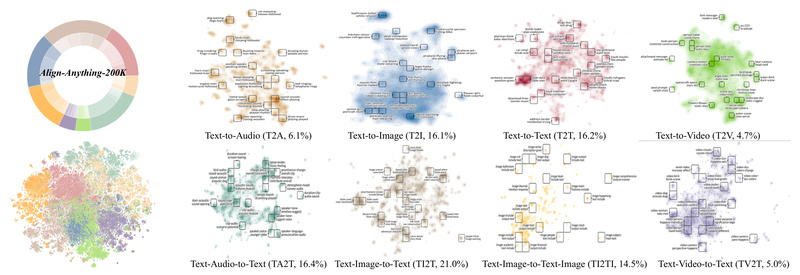

200K+ Multimodal Human Preference Annotations

The project includes Align-Anything-Instruction-100K (for text) and an expanded 200K+ all-modality human preference dataset, covering combinations like text+image, text+audio, text+video, and even vision-language-action (VLA). All data is meticulously curated and available for research use—filling a critical gap in open multimodal alignment resources.

Support for Any-to-Any Modality Alignment

Align Anything isn’t limited to “text + image → text.” It supports a full spectrum of input-output combinations:

- Text → Image / Audio / Video

- Text + Image → Text + Image

- Text + Video → Action (for robotics)

- And more

This flexibility makes it ideal for next-generation multimodal AI systems that must both perceive and act across sensory domains.

Modular Algorithms for Every Alignment Strategy

The framework includes plug-and-play implementations of:

- Supervised Fine-Tuning (SFT)

- Direct Preference Optimization (DPO)

- Proximal Policy Optimization (PPO) with optional vLLM acceleration (cutting PPO training time from ~150 to ~22 minutes)

- GRPO (as used in DeepSeek-R1)

- Rule-based RL and O1-like reasoning training

You can switch between methods without rewriting your pipeline—ideal for experimentation or production deployment.

Built-In Evaluation with eval-anything

To measure whether alignment actually improves model behavior, the team spun off eval-anything, a dedicated evaluation suite for any-to-any models. It assesses capabilities like modality synergy, instruction fidelity, and safety—ensuring your aligned model isn’t just smarter, but also more trustworthy.

Practical Use Cases Where Align Anything Excels

Training Vision-Language-Action (VLA) Agents for Robotics

Align Anything includes a VLA Trainer for embodied AI. By aligning models on tasks like “navigate to a basketball” using human feedback, it significantly improves policy safety and instruction compliance—critical for real-world deployment.

Fine-Tuning Multimodal Assistants

Need a model that generates high-quality images from prompts and explains its choices? Or one that converts lecture audio into summarized text with key visuals? Align Anything supports models like LLaVA, MiniCPM-o, Janus-Series, and Emu3, with ready-to-run scripts for SFT, DPO, and PPO across modalities.

Academic Research and Coursework

Already adopted as the official homework platform for Peking University’s Large Language Models Basics and Alignment course, Align Anything runs on both NVIDIA GPU and Huawei Ascend NPU—making it accessible in varied institutional environments.

Getting Started Is Easier Than You Think

You don’t need deep RL expertise to use Align Anything. The project provides:

- Cookbooks (in English and Chinese) for SFT and DPO training on text-image and text-text models

- Pre-configured shell scripts (e.g.,

llava_dpo.sh,vla/spoc_sft.sh) that auto-download models and datasets - Slurm cluster support for large-scale training

- Docker images for hassle-free Ascend NPU setup

- Optional vLLM integration for lightning-fast PPO rollout

Installation takes minutes:

git clone https://github.com/PKU-Alignment/align-anything.git cd align-anything conda create -n align-anything python=3.11 conda activate align-anything pip install -e .

And within hours, you can be aligning your own multimodal model.

Current Limitations and Practical Considerations

While powerful, Align Anything does come with caveats:

- Compute demands: Multimodal training (especially with video or action outputs) requires significant GPU/NPU resources.

- Hugging Face dependency: Models and datasets are fetched from Hugging Face, which may be inaccessible in some regions (though mirrors like

HF_ENDPOINT=https://hf-mirror.comhelp). - Rapid evolution: Support for video and action modalities is still expanding—check the GitHub repo for the latest compatibility matrix.

- Hardware specificity: Ascend NPU support is tested only on specific ARM-based configurations (e.g., Ascend-SNT9B); custom setups may require debugging.

Nonetheless, the project’s modular design ensures you can adopt only the components you need—without being locked into an all-or-nothing architecture.

Summary

Align Anything solves a fundamental problem in modern AI: how to align models that operate across any combination of modalities with human intent. By providing the first open, unified framework for all-modality alignment—complete with language-based feedback, 200K+ preference examples, modular algorithms, and rigorous evaluation—it empowers researchers and engineers to build safer, more capable multimodal systems. Whether you’re prototyping a new assistant, training a robot, or pushing the boundaries of cross-modal understanding, Align Anything gives you the foundation to do it right.