Graph-based machine learning has become essential across domains—from social network analysis and fraud detection to drug discovery and recommendation systems. Yet, building high-performing models for graph-structured data remains a significant challenge. Designing the right combination of feature engineering, graph neural network (GNN) architecture, hyperparameters, and ensembling strategies often demands deep expertise, extensive experimentation, and considerable time.

Enter AutoGL, the first open-source library specifically built for Automated Machine Learning (AutoML) on graphs. Developed by researchers at THUMNLab, AutoGL abstracts away the complexity of crafting graph learning pipelines by automatically selecting and optimizing every component—from input features to final predictions—tailored to your dataset and task. Whether you’re working on node classification, link prediction, or graph-level tasks, AutoGL enables you to achieve competitive results with minimal manual intervention.

Why Manual Graph Learning Pipelines Fall Short

Traditional graph learning requires practitioners to:

- Choose or design a GNN architecture (e.g., GCN, GAT, GraphSAGE)

- Engineer or select relevant node/graph features

- Tune hyperparameters like learning rate, layer depth, and hidden dimensions

- Decide on training strategies and post-processing ensembles

This process is not only time-consuming but also error-prone and heavily dependent on domain knowledge. For teams without dedicated GNN experts—or for researchers exploring new graph datasets—this bottleneck can delay progress or lead to suboptimal models.

AutoGL directly addresses this pain point by offering a fully automated, end-to-end pipeline that handles all these decisions intelligently and efficiently.

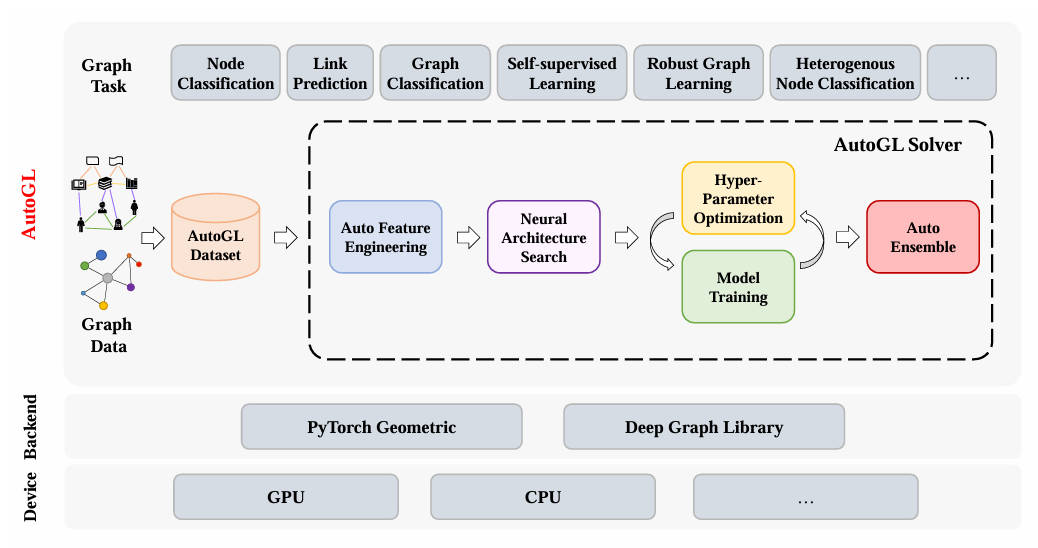

A Modular Architecture Built for Flexibility and Automation

AutoGL’s strength lies in its clean, three-layer design:

- Backend Layer: Supports both PyTorch Geometric (PyG) and Deep Graph Library (DGL), giving users flexibility in their preferred graph framework.

- AutoML Pipeline Layer: Integrates five core functional modules:

- Auto Feature Engineering: Automatically generates or selects features using methods like Graphlets, EigenGNN, NetLSD, or GBDT-based selectors.

- Neural Architecture Search (NAS): Explores architectures via algorithms like Random, RL, Evolution, GASSO, or AutoAttend within configurable search spaces.

- Hyperparameter Optimization (HPO): Supports Bayesian optimization, TPE, simulated annealing, and more to fine-tune model and training parameters.

- Model Training: Handles training with robust defaults and supports decoupled encoder-decoder designs for multi-task reuse.

- Auto Ensemble: Combines predictions using voting or stacking to boost final performance.

- Application Layer: Provides ready-to-use solvers for standard tasks—homogeneous and heterogeneous node classification, link prediction, and graph classification.

This modular structure ensures that users can either run everything out-of-the-box or customize individual components as needed.

Real-World Use Cases Where AutoGL Delivers Value

AutoGL shines in practical scenarios where speed, robustness, and performance matter:

- Social Network Analysis: Automatically classify user roles or detect communities without hand-crafting graph features.

- Recommendation Systems: Improve link prediction for user-item interaction graphs with minimal engineering.

- Fraud Detection: Rapidly deploy GNN models on transaction graphs to flag suspicious patterns.

- Molecular Property Prediction: Accelerate drug discovery by auto-optimizing GNNs on molecular graphs.

Recent updates also enable self-supervised learning and graph robustness (e.g., defending against adversarial attacks), broadening AutoGL’s applicability to cutting-edge research problems.

Getting Started Is Simple

AutoGL is designed for ease of adoption:

- Install via pip:

pip install autogl - Load your graph dataset (compatible with PyG or DGL formats)

- Instantiate a task-specific solver (e.g.,

NodeClassifier) - Call

solver.fit(dataset)—AutoGL handles the rest

Extensive tutorials cover common tasks, including handling heterogeneous graphs and leveraging NAS-Bench-Graph, a benchmark for fast architecture evaluation. For advanced users, AutoGL-light offers a streamlined version to build custom AutoML pipelines without the full framework overhead.

Limitations and Practical Considerations

While AutoGL dramatically lowers the barrier to entry, users should note:

- Python ≥3.6, PyTorch ≥1.6, and either PyG ≥1.7 or DGL ≥0.7 are required.

- Basic familiarity with graph data structures (nodes, edges, attributes) and task types is still necessary to prepare data and interpret results.

- Full customization (e.g., adding a new NAS algorithm) requires subclassing provided base classes—but the API is designed for extensibility.

Summary

AutoGL is a strategic tool for anyone—researcher, engineer, or data scientist—who needs to apply graph learning quickly and effectively without months of manual architecture search and tuning. By automating feature engineering, model selection, hyperparameter optimization, and ensembling, it delivers state-of-the-art performance while reducing reliance on deep GNN expertise. Whether you’re prototyping in academia or deploying in production, AutoGL accelerates your graph ML workflow from idea to result.