Feature upsampling is a critical but often overlooked component in modern computer vision pipelines. Whether you’re building an object detector, a semantic segmentation model, or an image inpainting system, how you reconstruct high-resolution feature maps from coarse representations can dramatically impact performance. Traditional approaches—like bilinear interpolation or transposed convolutions—fall short because they either ignore contextual information or apply rigid, content-agnostic operations across all inputs.

Enter CARAFE (Content-Aware ReAssembly of FEatures), a smart, efficient, and plug-and-play upsampling operator designed specifically for dense prediction tasks. Introduced in 2019 and now integrated into the widely used MMDetection toolbox, CARAFE rethinks upsampling by making it adaptive, context-sensitive, and computationally lean.

This article explains what CARAFE is, why it outperforms conventional methods, where it delivers the most value, and how you can start using it today—without heavy engineering or model redesign.

Why Traditional Upsampling Falls Short

Before diving into CARAFE, it helps to understand the limitations of standard upsampling techniques:

- Bilinear interpolation is fast and parameter-free but only considers immediate neighboring pixels. It lacks awareness of broader semantic context, leading to blurry or misaligned feature reconstructions.

- Transposed convolutions (deconvolutions) introduce learnable parameters, but they use fixed kernels across all spatial locations and input samples. This one-size-fits-all approach fails to account for the varying content in different regions of an image—e.g., edges vs. textures vs. smooth backgrounds.

These shortcomings become especially problematic in dense prediction tasks, where pixel-level accuracy matters.

How CARAFE Solves These Problems

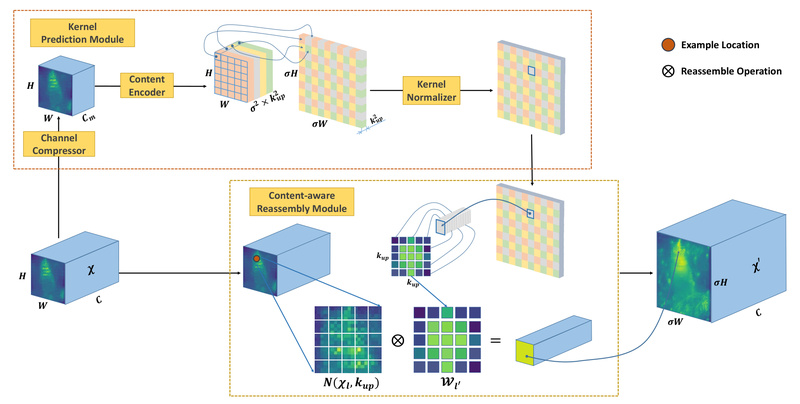

CARAFE introduces a content-aware, dynamic upsampling mechanism that addresses both issues simultaneously:

- Large Receptive Field: Instead of relying on a tiny neighborhood (e.g., 2×2), CARAFE gathers information from a much larger local region (e.g., 7×7 or more), enabling richer contextual reasoning during upsampling.

- Content-Aware Kernels: For each output location, CARAFE dynamically generates a custom upsampling kernel based on the input features themselves. This means the operator adapts in real time—sharp edges get sharpened, smooth areas stay smooth.

- Minimal Overhead: Despite its sophistication, CARAFE is remarkably lightweight. It adds negligible latency and memory cost, making it practical for real-world deployment.

In benchmark evaluations across object detection, instance segmentation, semantic segmentation, and image inpainting, CARAFE consistently delivered performance gains of 1.1–1.8% (or +1.1 dB in inpainting PSNR) with almost no computational penalty.

Ideal Use Cases

CARAFE excels in any scenario that requires high-fidelity reconstruction of spatial feature maps. It’s particularly valuable for:

- Object detection (e.g., in FPN-based detectors like Faster R-CNN or RetinaNet)

- Instance and semantic segmentation (e.g., Mask R-CNN, SOLO, or Mask2Former)

- Image inpainting or restoration tasks where structural coherence matters

If your model uses a feature pyramid or multi-scale fusion neck (like PAFPN), swapping in CARAFE can be a simple yet powerful upgrade.

Getting Started with CARAFE

The good news? You don’t need to implement CARAFE from scratch. It’s already available in MMDetection, a leading open-source object detection framework built on PyTorch.

To use it:

- Configure your model’s neck component (e.g., in an FPN variant) to use

CARAFEas the upsampling method. - No special dependencies are required beyond standard MMDetection installation (PyTorch 1.8+).

- Pre-trained models and configs with CARAFE are included in the model zoo, allowing immediate evaluation.

Integration is seamless—often just a single-line change in the config file—and compatible with most backbones and heads.

Limitations and When to Avoid CARAFE

While powerful, CARAFE isn’t a universal solution:

- It’s designed specifically for dense prediction. For tasks like image classification—where upsampling isn’t needed—it offers no benefit.

- Though lightweight, it still introduces some overhead compared to bilinear interpolation. In ultra-low-latency systems (e.g., real-time embedded vision with strict FPS requirements), the simplest method may still be preferable.

- CARAFE operates on feature maps, not raw pixels, so it’s meant to be used within neural networks—not as a standalone image processing tool.

Summary

CARAFE redefines feature upsampling by making it intelligent, adaptive, and efficient. By dynamically reassembling features based on local content and leveraging a wide receptive field, it consistently boosts accuracy in dense prediction tasks without slowing down your pipeline. With native support in MMDetection and proven gains across multiple benchmarks, CARAFE is a low-risk, high-reward upgrade for practitioners working on object detection, segmentation, or related vision problems. If your project involves reconstructing detailed spatial representations from coarse features, CARAFE is worth a try.