Chinese-BERT-wwm is a family of pre-trained language models specifically engineered to overcome a key limitation of the original BERT when processing Chinese text: the mismatch between subword tokenization and linguistic word boundaries. While standard Chinese BERT treats each character as an independent unit—ignoring how real Chinese words are formed—Chinese-BERT-wwm introduces Whole Word Masking (WWM) during pre-training. This seemingly small change leads to significant improvements in downstream NLP tasks that require deeper word-level semantic understanding.

Developed by researchers at the Harbin Institute of Technology and iFLYTEK, Chinese-BERT-wwm builds directly on Google’s official BERT codebase but rethinks the masking strategy for Chinese. The project offers multiple model variants—including BERT-wwm, BERT-wwm-ext, RoBERTa-wwm-ext, and their large counterparts—trained on up to 5.4 billion tokens of diverse Chinese text. All models are openly available and fully compatible with Hugging Face Transformers and PaddleHub, making them accessible to researchers and developers worldwide.

Whether you’re building a question-answering system for legal documents, analyzing sentiment in news articles, or fine-tuning a classifier for formal Chinese text, Chinese-BERT-wwm provides a robust, well-evaluated foundation backed by extensive benchmarking across 10+ standard Chinese NLP datasets.

What Makes Chinese-BERT-wwm Different?

Whole Word Masking Tailored for Chinese

In standard BERT, Chinese text is tokenized character-by-character because Chinese lacks explicit whitespace between words. During masked language modeling (MLM), individual characters may be randomly masked—even if they belong to the same multi-character word. This can confuse the model, as it never learns to reconstruct complete words as coherent units.

Chinese-BERT-wwm solves this by applying Whole Word Masking (WWM): whenever any character from a word is selected for masking, all characters in that word are masked together. For example, if the word “模型” (model) appears in a sentence, both “模” and “型” are masked simultaneously. This forces the model to learn contextual representations at the word level rather than the character level—a critical alignment with how Chinese is actually used and understood.

This strategy, originally proposed by Google for English, is adapted here using word segmentation tools (like LTP) to identify word boundaries in Chinese. The result? Richer semantic representations that better capture real-world language structure.

A Full Suite of Optimized Models

The project doesn’t stop at one model. It provides a comprehensive suite:

- BERT-wwm: Trained on Chinese Wikipedia (0.4B tokens), using WWM.

- BERT-wwm-ext: Same architecture but trained on an extended corpus (5.4B tokens) including encyclopedia entries, news, and Q&A forums.

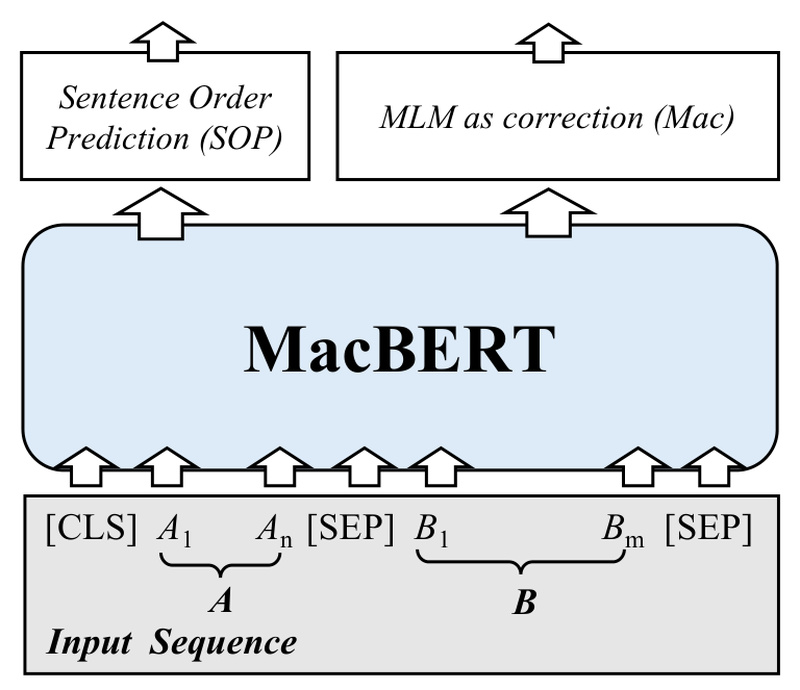

- RoBERTa-wwm-ext: Removes the Next Sentence Prediction (NSP) task, trains longer with larger batches, and uses dynamic sequence lengths—all while retaining WWM.

- RoBERTa-wwm-ext-large: A 24-layer, 330M-parameter variant offering state-of-the-art performance on demanding tasks.

- RBT3 / RBTL3: Lightweight 3-layer models for resource-constrained environments, derived from base and large architectures respectively.

This variety lets you choose the right balance of performance, speed, and memory footprint for your use case.

Where Chinese-BERT-wwm Excels: Ideal Use Cases

Chinese-BERT-wwm shines in tasks involving formal, structured, or long-form Chinese text, especially where word-level semantics matter. Based on published results across 10+ benchmarks, the models consistently outperform standard Chinese BERT and often rival or exceed ERNIE on key metrics.

Reading Comprehension & QA

On datasets like CMRC 2018 (Simplified Chinese) and DRCD (Traditional Chinese), RoBERTa-wwm-ext-large achieves F1 scores of 90.6 and 94.5, respectively—significantly higher than baseline BERT. This makes it ideal for building document-based QA systems in legal, educational, or enterprise settings.

Sentiment and Text Classification

For ChnSentiCorp (sentiment analysis), the large model reaches 95.8% accuracy. On THUCNews (10-class news categorization), it maintains top-tier performance (>97% accuracy), proving its strength in formal media content.

Natural Language Inference & Semantic Similarity

On XNLI, RoBERTa-wwm-ext-large hits 81.2% accuracy, demonstrating robust reasoning. For sentence-pair tasks like LCQMC and BQ Corpus, it delivers reliable performance in detecting semantic equivalence—useful for chatbot intent matching or duplicate question detection.

Crucially, these results are reported with 10 independent runs, including both average and peak scores, ensuring reliability and reproducibility.

Getting Started: Simple Integration for English-Speaking Developers

You don’t need to be a Chinese NLP expert to use these models. Thanks to Hugging Face integration, loading a model takes just two lines:

from transformers import BertTokenizer, BertModel

tokenizer = BertTokenizer.from_pretrained("hfl/chinese-bert-wwm")

model = BertModel.from_pretrained("hfl/chinese-bert-wwm")

Important: Even for RoBERTa-wwm-ext variants, always use BertTokenizer and BertModel—not RobertaTokenizer. These are BERT-style models with RoBERTa-inspired training, not true RoBERTa architectures.

For PaddlePaddle users, PaddleHub offers one-liner loading:

import paddlehub as hub module = hub.Module(name="chinese-bert-wwm")

Both TensorFlow and PyTorch checkpoints are available via Hugging Face. Model cards include full configuration files (bert_config.json) and vocabularies (vocab.txt), identical to Google’s original Chinese BERT—ensuring drop-in compatibility.

Limitations and Practical Considerations

While powerful, Chinese-BERT-wwm isn’t a universal solution. Keep these points in mind:

- No built-in MLM head weights: The released checkpoints exclude the masked language modeling head. If you need to perform MLM (e.g., for fill-in-the-blank tasks), you’ll need to retrain the head on your data—just like with standard BERT.

- Optimized for formal text: Trained primarily on Wikipedia and news, these models may underperform on informal social media text (e.g., Weibo) compared to models like ERNIE, which incorporates web forum data.

- Base-sized by default: Except for the “large” variant, most models are 12-layer (base) architectures. For highly complex reasoning tasks, the large model may be necessary—but it demands more GPU memory.

- Traditional Chinese support: Models work well on Traditional Chinese (e.g., DRCD), but avoid ERNIE for such texts—its vocabulary lacks Traditional characters.

Also note: learning rate matters. The authors provide task-specific recommendations (e.g., 2e-5 for sentiment analysis, 3e-5 for reading comprehension)—deviating significantly may hurt performance.

Why Choose Chinese-BERT-wwm Over Alternatives?

If your project involves Chinese language understanding, you’re likely choosing between standard BERT, ERNIE, or Chinese-BERT-wwm. Here’s how to decide:

-

Choose Chinese-BERT-wwm if:

- You work with formal, document-level Chinese (news, legal, academic).

- Your task relies on accurate word-level semantics (e.g., named entity recognition, reading comprehension).

- You value transparent, reproducible results across standard benchmarks.

- You want open, well-maintained models with Hugging Face support.

-

Consider ERNIE if:

- Your data comes from informal domains like social media.

- You can tolerate lower performance on Traditional Chinese.

-

Stick with standard BERT only if:

- You’re constrained by legacy systems or need maximum compatibility without fine-tuning.

Chinese-BERT-wwm bridges the gap between linguistic realism and deep learning efficiency—delivering measurable gains where word boundaries truly matter.

Summary

Chinese-BERT-wwm rethinks pre-training for Chinese by aligning the masking strategy with actual word structure. Its family of models—rigorously evaluated, openly shared, and easy to integrate—offers a compelling upgrade over standard Chinese BERT for tasks requiring nuanced language understanding. If your work involves processing formal Chinese text and you need a reliable, high-performance foundation, Chinese-BERT-wwm is a decision you can confidently make.