Despite massive advances in large language models (LLMs) for coding, a silent crisis persists: debugging remains largely unsolved. Top models like GPT-4.1 and Claude Opus achieve over 70% success on code synthesis benchmarks—but plummet to under 15% when faced with real-world debugging tasks. This stark “debugging gap” reveals a fundamental flaw in current AI tooling: models are trained to write code, not fix it.

Enter Chronos—the world’s first debugging-first language model, purpose-built from the ground up to understand, diagnose, and repair complex bugs across million-line codebases. Developed by Kodezi, Chronos isn’t another code completion engine with a fancy prompt. It’s a specialized AI system trained exclusively on debug workflows, delivering a 67.3% autonomous fix rate and slashing debugging time by 40%. For engineering teams drowning in flaky tests, concurrency bugs, and opaque stack traces, Chronos represents a paradigm shift: AI that finally acts like a skilled debugger, not just a code copier.

Why General-Purpose Models Fail at Debugging

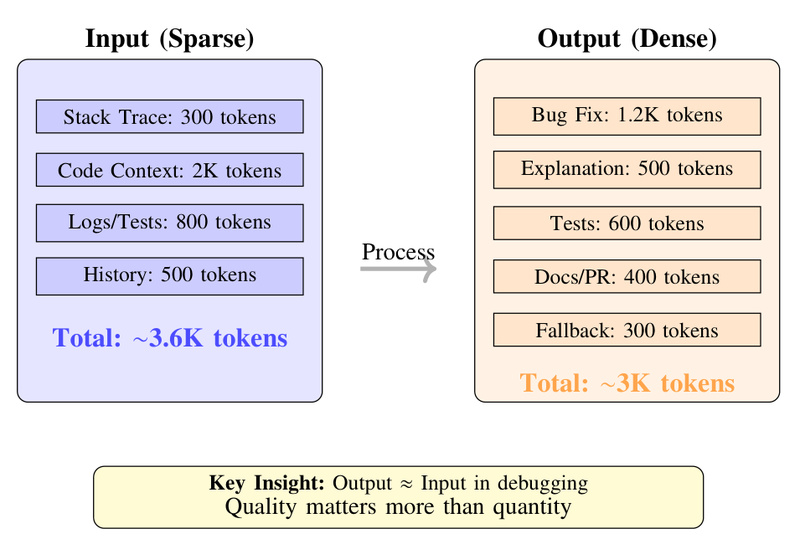

Most coding LLMs excel at generating syntactically correct code from clear prompts. But debugging is a fundamentally different task—one that demands contextual reasoning across files, temporal awareness of code history, and the ability to test, hypothesize, and refine fixes iteratively.

The data speaks clearly:

- Claude 4.1 Opus: 72.5% on code generation, 14.2% on debugging

- GPT-4.1: 54.6% on code generation, 13.8% on debugging

This 50+ percentage point drop isn’t a bug—it’s a feature of architectures trained on completion, not diagnosis. When bugs span multiple files or involve subtle logic errors, generic models lack the structured reasoning and repository-scale awareness needed to trace root causes.

Chronos closes this gap by rejecting the “one model fits all” approach. Instead, it’s engineered for one mission: debugging.

Core Innovations That Make Chronos Work

Chronos isn’t just fine-tuned—it’s rearchitected. Its seven-layer system integrates domain-specific components that general models lack:

Trained on Real Debugging, Not Code Completion

Chronos learns from 42.5 million real debugging sessions, including GitHub issues with verified fixes, CI/CD logs, production error traces, and curated benchmarks like Defects4J. This means it understands not just what code looks like, but how bugs manifest and get resolved in practice.

Adaptive Graph-Guided Retrieval (AGR)

Debugging often requires jumping across dozens of files. Chronos uses multi-hop graph traversal to navigate codebases up to 10 million lines with 92% precision and 85% recall. Unlike flat retrieval or brute-force context stuffing, AGR dynamically expands its search based on code dependencies, reducing retrieval time by 3.8x while maintaining high relevance.

Persistent Debug Memory (PDM)

Chronos builds a repository-specific memory from 15M+ past debugging sessions. As it works on a codebase, it learns patterns—like common anti-patterns in a team’s React components or typical memory leaks in their C++ modules. This “debugging memory” improves success rates from 35% to 65%+ over time and achieves an 87% cache hit rate for recurring bug types.

Autonomous Fix-Test-Refine Loop

Instead of guessing once and hoping, Chronos runs iterative debugging cycles: propose a fix → execute in a sandbox → analyze test output → refine. On average, it takes 7.8 iterations to land a verified patch—far more thorough than competitors that typically make 1–2 attempts. This loop enables 67.3% fully autonomous fix accuracy, even on cross-file logic errors.

Where Chronos Delivers Real-World Value

Chronos shines in scenarios where generic AI fails:

- Multi-file logic bugs: In Django web apps, Chronos achieves 90.4% success by tracing data flow from views to models to templates.

- Concurrency and memory issues: It fixes 58.3% of race conditions and 61.7% of memory leaks—problems that stump most LLMs (<5% success).

- Large legacy codebases: On repos over 1 million lines, Chronos is 15.7x more effective than the best baseline (59.7% vs. 3.8%).

- Onboarding acceleration: The Explainability Layer generates human-readable root cause analyses, helping junior engineers understand why a bug occurred, not just how to patch it.

For teams using monorepos, microservices, or inherited codebases, Chronos transforms debugging from a chaotic hunt into a systematic, AI-guided process.

How to Use Chronos Today—and Tomorrow

While the full Chronos model is proprietary, Kodezi provides immediate access to validation tools:

- Open research repository: The github.com/Kodezi/chronos repo includes the SWE-bench Lite evaluation (80.33% success), the Multi-Random Retrieval (MRR) benchmark (500-sample dataset available), and reference implementations of AGR and PDM algorithms. Teams can run these benchmarks against their own models or codebases to verify claims.

- Integration-ready: Chronos will be embedded in Kodezi OS (beta in Q4 2025, GA in Q1 2026) and accessible via API. It already integrates with Kodezi’s CLI and Web IDE, requiring no custom pipeline engineering—just point it at your repo.

This means engineering leads can evaluate Chronos’s capabilities before committing, using real-world benchmarks rather than marketing slides.

Known Limitations: Setting Realistic Expectations

Chronos is powerful—but not magic. Its current constraints include:

- Hardware-dependent bugs: Only 23.4% success on issues requiring physical device interaction (e.g., GPU driver quirks).

- Dynamic language errors: 41.2% success on runtime exceptions in highly dynamic languages (e.g., monkey-patched Python).

- Access model: The Chronos model itself is not open-source; only research artifacts (papers, benchmarks, docs) are publicly available under MIT License. Enterprise access begins Q4 2025.

These limitations reflect the frontier of AI debugging—not flaws in design. For teams primarily dealing with application-layer logic, concurrency, or API misuse bugs, Chronos already delivers transformative results.

Summary

Chronos solves a critical, overlooked problem: AI that can debug like a human expert. By combining debugging-specific training, intelligent code retrieval, persistent memory, and autonomous refinement loops, it achieves 4–7x higher accuracy than general-purpose models on real bugs. If your team wastes hours on elusive logic errors, struggles with large codebases, or needs AI that understands failure—not just writes code—Chronos is the first tool purpose-built for that mission. With benchmarks publicly available and enterprise access coming late 2025, it’s time to move beyond code-generation-only AI and embrace debugging as a first-class capability.