In today’s fast-paced software development landscape, the ability to translate natural language instructions into functional code is no longer science fiction—it’s a practical necessity. Enter CodeGen, an open-source family of large language models (LLMs) developed by Salesforce AI Research specifically for program synthesis. Designed to understand both human intent and programming syntax, CodeGen bridges the gap between what developers say and what machines do.

Unlike proprietary code-generation tools that operate as black boxes, CodeGen offers full transparency: its models, training frameworks, and research are publicly available. This empowers researchers, engineers, and technical teams to inspect, adapt, and deploy code-generation capabilities without vendor lock-in or licensing restrictions. With versions ranging from 350M to 16B parameters—including the highly efficient CodeGen2.5 that outperforms larger models—CodeGen delivers remarkable performance while remaining accessible to a broad technical audience.

Why CodeGen Stands Out

Unified Architecture and Training Strategy

CodeGen isn’t just another code LLM trained on GitHub dumps. The CodeGen2 series introduced a deliberate unification of four critical components:

- A prefix-LM architecture that blends encoder-decoder strengths into a single causal model

- A unified learning objective combining causal language modeling, span corruption, and infilling

- Intelligent infill sampling that enables models to fill in missing code segments mid-function

- Carefully curated data mixtures of programming and natural languages, trained over multiple epochs

This holistic approach ensures that CodeGen doesn’t just predict the next token—it comprehends context, structure, and intent across both natural and programming languages.

Efficiency That Defies Scale

Perhaps the most compelling evidence of CodeGen’s effectiveness is CodeGen2.5: a 7B-parameter model that outperforms earlier 16B models in code-generation benchmarks. This leap wasn’t achieved by brute force, but through smarter data composition, refined training recipes, and architectural insights distilled from extensive experimentation. For teams operating under compute or latency constraints, this means high-quality code generation is now achievable with smaller, faster, and more deployable models.

Open and Ready to Use

All CodeGen models are available on Hugging Face, and the full training pipeline is open-sourced via the Jaxformer library. This openness accelerates experimentation, fine-tuning, and integration into custom toolchains—something closed alternatives simply can’t match.

Ideal Use Cases

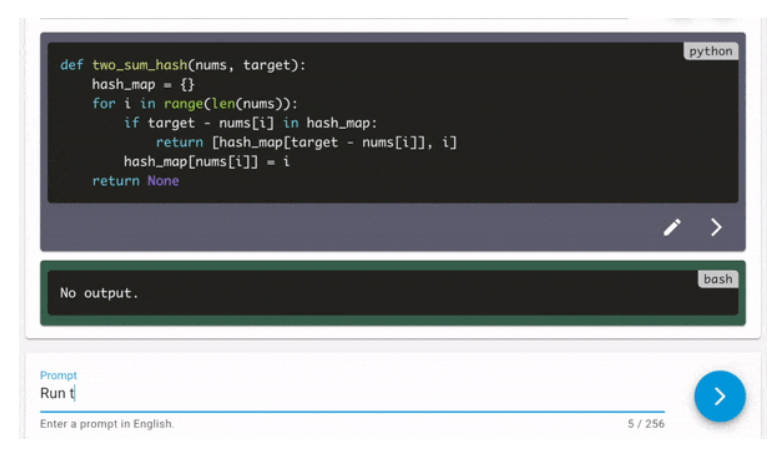

CodeGen shines in scenarios where natural language and code must interact fluidly:

- Intelligent Code Completion: Suggest entire functions from comments like

# this function prints hello world. - Multi-Turn Program Synthesis: Iteratively refine code through conversational prompts—ideal for interactive development environments.

- Code Infilling: Fill in missing logic inside existing functions, a critical feature for modern IDE plugins.

- Research & Education: Explore how LLMs learn programming semantics without proprietary barriers.

Whether you’re building an AI pair programmer, automating boilerplate code, or prototyping new programming assistants, CodeGen provides a robust, interpretable foundation.

Getting Started Is Simple

Using CodeGen requires only basic Python and PyTorch knowledge. Here’s how to generate code with each major version:

CodeGen1 (e.g., codegen-2B-mono)

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("Salesforce/codegen-2B-mono")

model = AutoModelForCausalLM.from_pretrained("Salesforce/codegen-2B-mono")

inputs = tokenizer("# this function prints hello world", return_tensors="pt")

sample = model.generate(**inputs, max_length=128)

print(tokenizer.decode(sample[0], truncate_before_pattern=[r"nn^#", "^'''", "nnn"]))

CodeGen2 (e.g., codegen2-7B)

tokenizer = AutoTokenizer.from_pretrained("Salesforce/codegen2-7B")

model = AutoModelForCausalLM.from_pretrained("Salesforce/codegen2-7B", trust_remote_code=True)

# ... rest identical to above

CodeGen2.5 (e.g., codegen25-7b-mono)

tokenizer = AutoTokenizer.from_pretrained("Salesforce/codegen25-7b-mono", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("Salesforce/codegen25-7b-mono")

# ... rest identical, with simpler decoding

Note the addition of trust_remote_code=True for newer versions—this enables custom model logic provided by the CodeGen team.

Limitations and Responsible Use

While powerful, CodeGen models come with important caveats:

- Research-Focused: These models are released to support academic work, not as production-ready SaaS solutions.

- Not Safety-Hardened: Generated code may contain bugs, vulnerabilities, or licensing issues. Always review outputs critically.

- Language Coverage: Pretrained variants like

-monoare optimized for specific languages (e.g., Python); multilingual support varies. - Ethical Deployment: Per Salesforce’s disclaimer, users must evaluate fairness, accuracy, and safety—especially in high-stakes environments like healthcare or finance.

In short: CodeGen is a tool for augmentation, not automation. Pair it with human oversight.

Summary

CodeGen delivers on a critical promise: high-quality, open, and efficient program synthesis grounded in rigorous research. By unifying architecture, training, and data strategies, it achieves performance that rivals or exceeds larger closed models—all while remaining freely accessible. For developers building next-gen coding tools, researchers probing LLM capabilities, or educators demystifying AI for programmers, CodeGen offers a transparent, capable, and community-driven path forward.

If you’re evaluating code-generation models for integration, experimentation, or learning, CodeGen deserves a serious look—not just for what it does today, but for how openly it invites you to shape what it can do tomorrow.