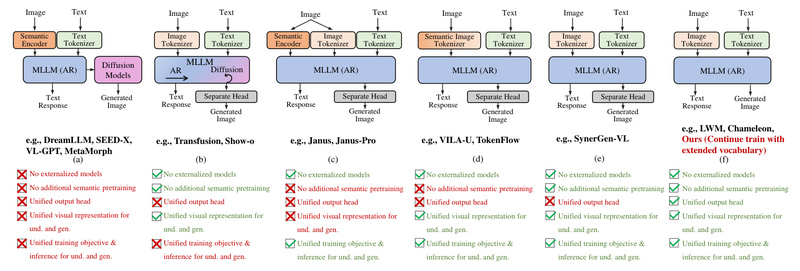

What if a single large language model (LLM) could both understand and generate high-quality images—without relying on external vision encoders…

Multimodal Generation

Emu3.5: A Native Multimodal World Model for Unified Vision-Language Generation and Reasoning 1372

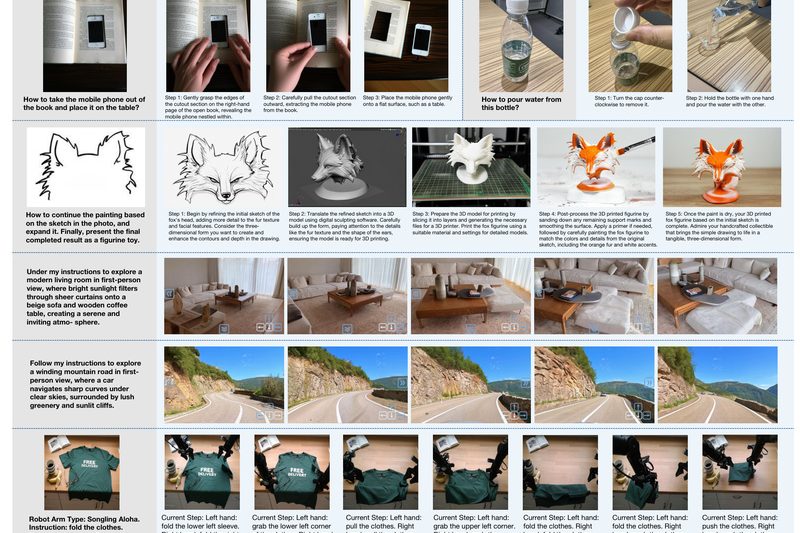

Imagine a single AI model that doesn’t just “see” or “read”—but seamlessly blends images and text in both input and…

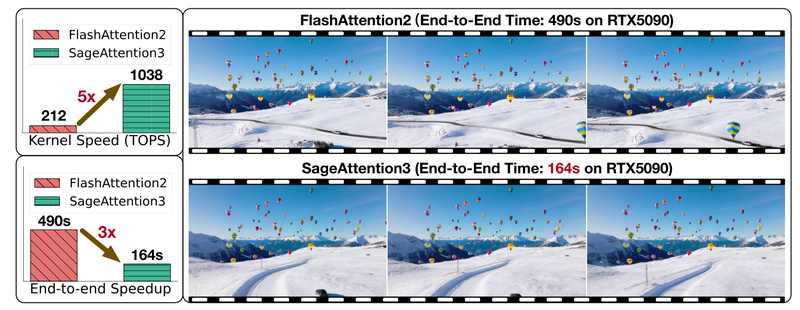

SageAttention3: 5x Faster LLM Inference on Blackwell GPUs with Plug-and-Play FP4 Attention and First-Ever 8-Bit Training Support 2814

Attention mechanisms lie at the heart of modern large language models (LLMs) and multimodal architectures—but their quadratic computational complexity remains…