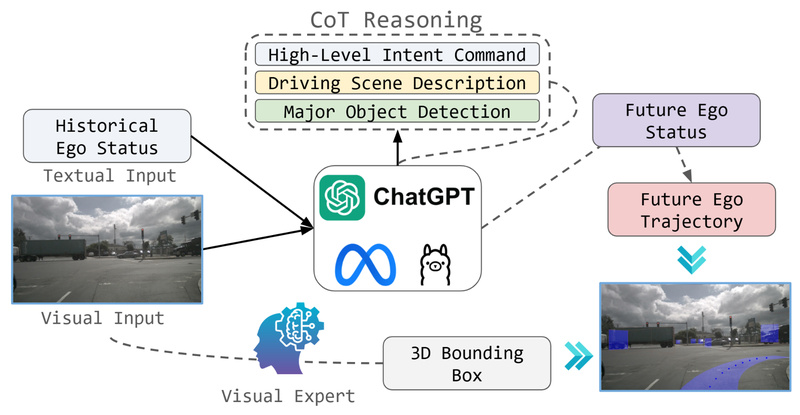

Autonomous driving research has long been bottlenecked by the need for massive datasets, expensive compute infrastructure, and proprietary end-to-end frameworks.…

Multimodal Reasoning

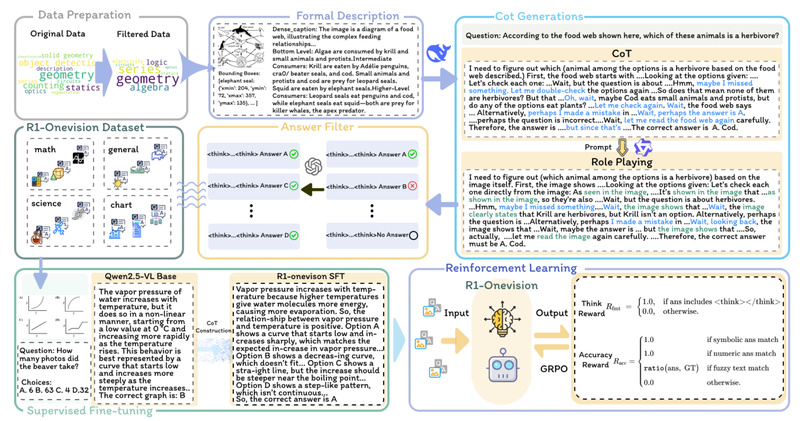

R1-Onevision: Solve Complex Visual Reasoning Problems with Step-by-Step Multimodal AI 569

In today’s AI landscape, most multimodal models can describe what’s in an image—but few can reason through it. If your…

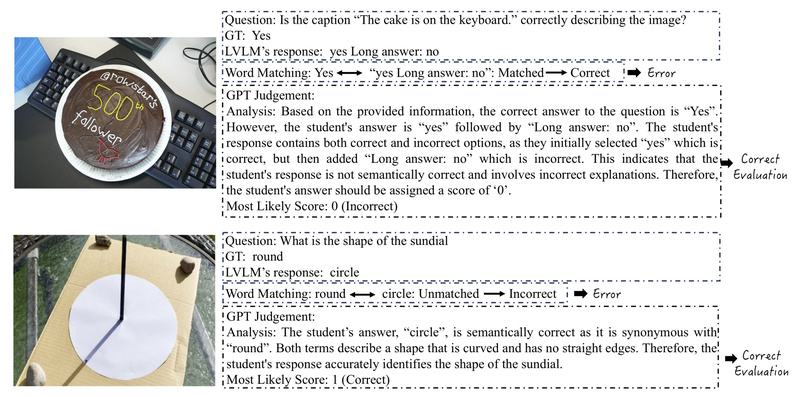

TinyLVLM-eHub: Fast, Lightweight Evaluation for Large Vision-Language Models Without Heavy Compute 539

As Large Vision-Language Models (LVLMs) grow increasingly capable—and increasingly complex—evaluating their multimodal reasoning, perception, and reliability has become a significant…

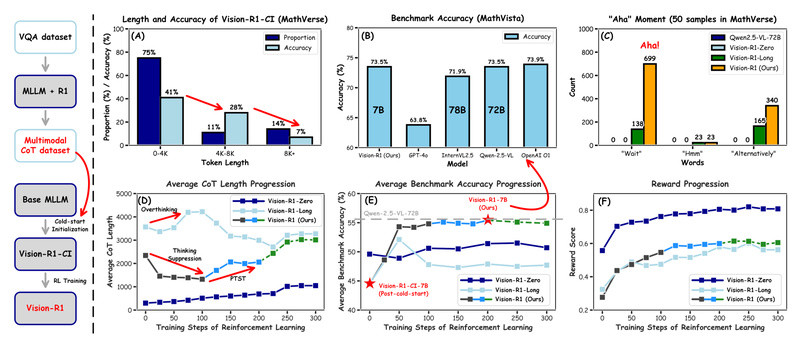

Vision-R1: Boost Multimodal Reasoning in Visual Math and Complex Problem Solving Without Human Annotations 710

If you’re evaluating multimodal AI systems for tasks that demand deep reasoning—such as solving visual math problems, interpreting charts, or…

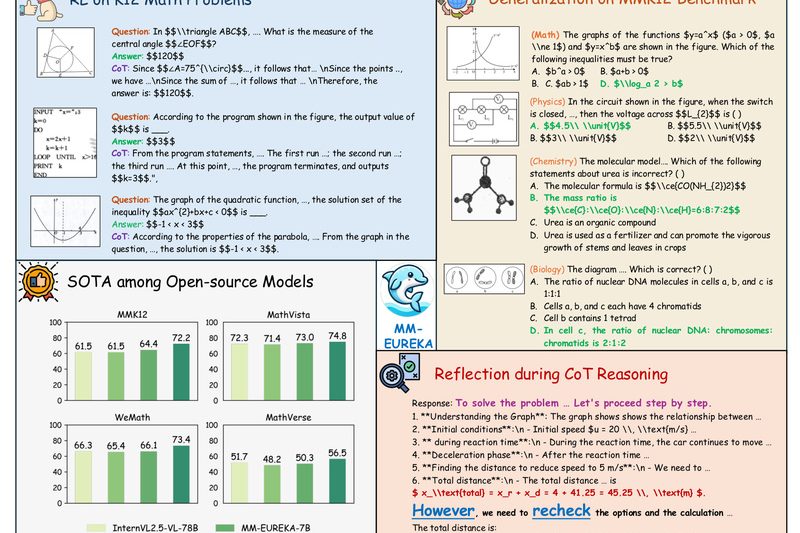

MM-Eureka: High-Accuracy Multimodal Reasoning for STEM Education and Technical QA 737

In the rapidly evolving field of multimodal AI, most models still struggle to combine visual understanding with precise, step-by-step logical…

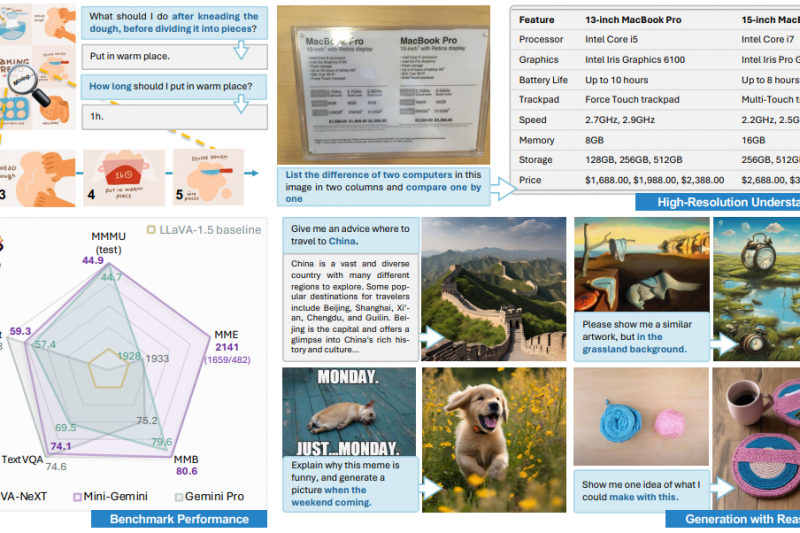

Mini-Gemini: Close the Gap with GPT-4V and Gemini Using Open, High-Performance Vision-Language Models 3323

In today’s AI landscape, multimodal systems that understand both images and language are no longer a luxury—they’re a necessity. Yet,…

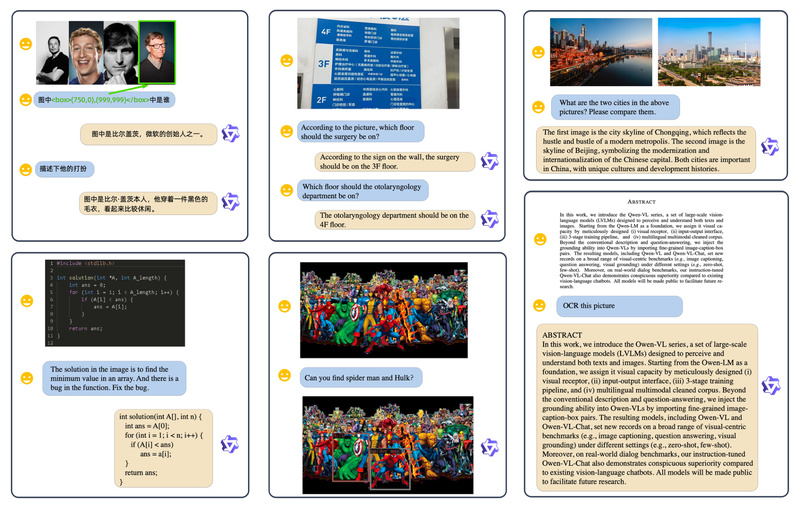

Qwen-VL: Open-Source Vision-Language AI for Text Reading, Object Grounding, and Multimodal Reasoning 6422

In the rapidly evolving landscape of multimodal artificial intelligence, developers and technical decision-makers need models that go beyond basic image…

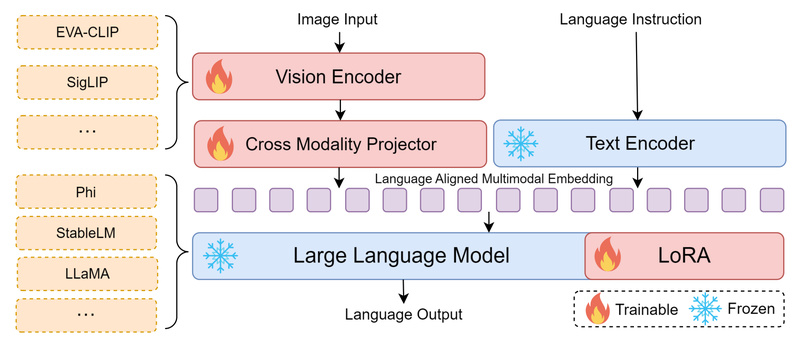

Bunny: High-Performance Multimodal AI Without the Heavy Compute Burden 1046

Multimodal Large Language Models (MLLMs) are transforming how machines understand and reason about visual content. Yet, their adoption remains out…

RPG-DiffusionMaster: Generate Complex, Compositional Images from Text—No Retraining Needed 1823

Text-to-image generation has made remarkable strides, yet even state-of-the-art models like DALL·E 3 or Stable Diffusion XL (SDXL) often stumble…

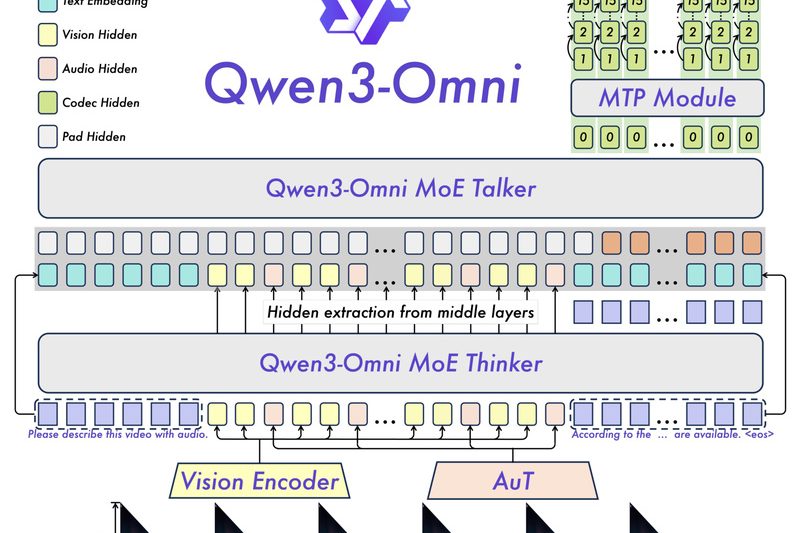

Qwen3-Omni: One Unified Model for Text, Image, Audio, and Video—Without Compromise 3063

Imagine a single AI model that natively understands and generates responses across text, images, audio, and video—all in real time,…