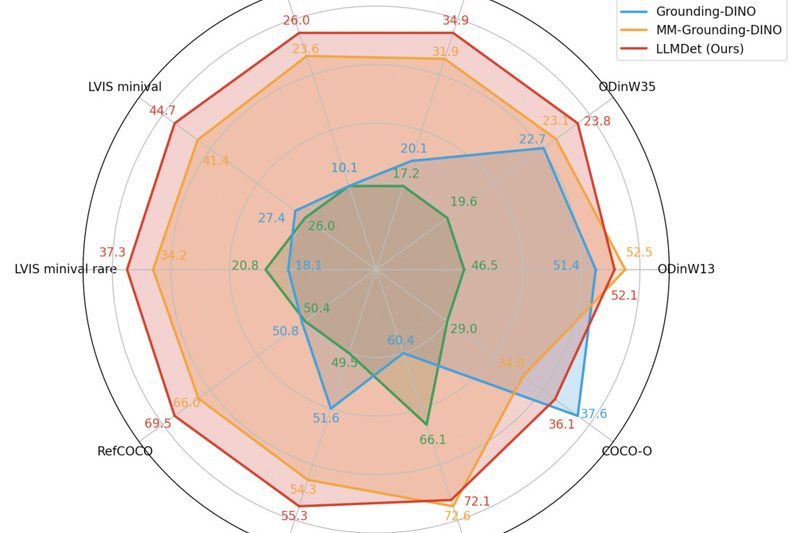

Imagine building a vision system that can detect not just pre-defined classes like “car” or “dog,” but any object described…

vision-language modeling

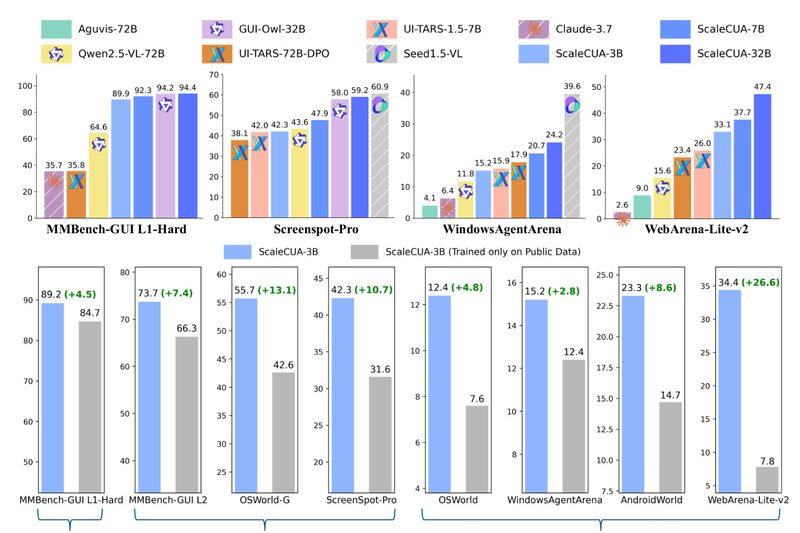

ScaleCUA: Cross-Platform GUI Automation Powered by Large-Scale Open Data 616

Building reliable computer use agents (CUAs)—systems that can autonomously interact with graphical user interfaces (GUIs)—has long been hindered by a…

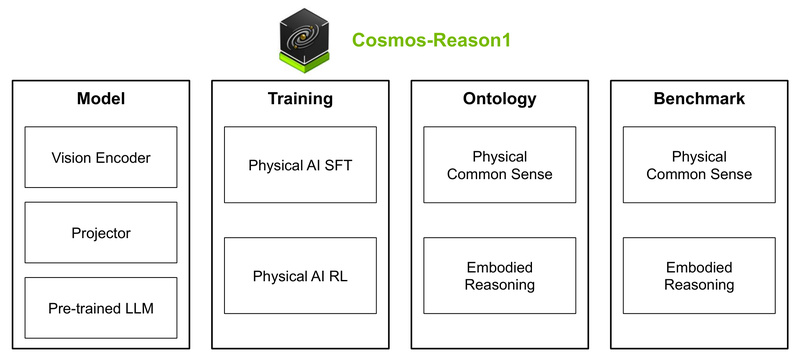

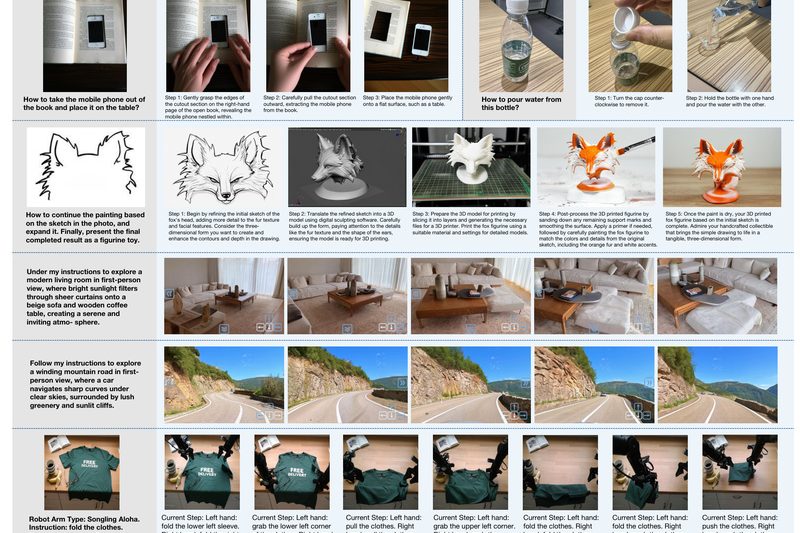

Cosmos-Reason1: Enable Physical AI Agents to Reason Like Humans Using Physics, Space, and Time 746

Building intelligent systems that can understand and act in the real physical world remains one of the toughest challenges in…

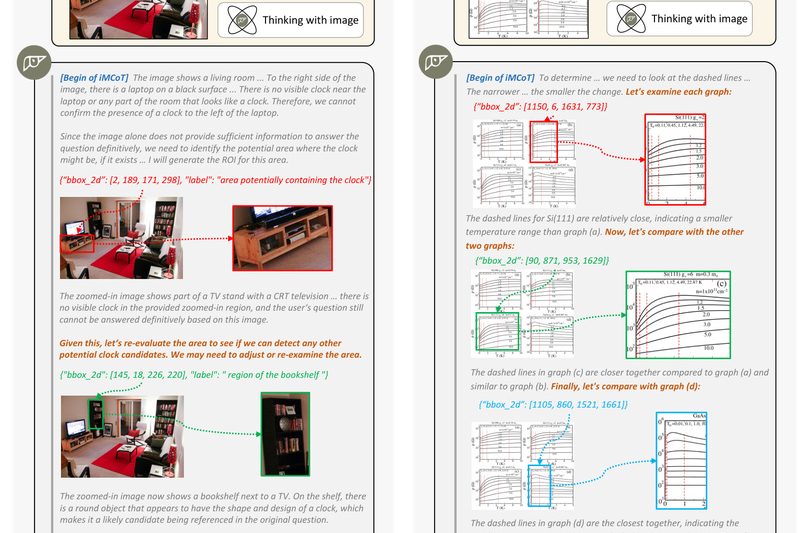

DeepEyes: Enable Vision-Language Models to “Think with Images” and Solve Complex Visual Reasoning Tasks 858

Most modern Vision-Language Models (VLMs) treat images as static inputs—processed once, then reasoned about using purely text-based logic. But humans…

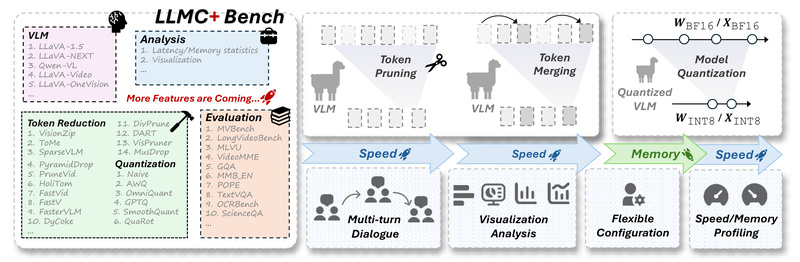

LLMC+: Plug-and-Play Compression for Vision-Language and Large Language Models Without Retraining 577

Deploying large vision-language models (VLMs) and large language models (LLMs) in real-world applications is often bottlenecked by their massive size,…

Emu3.5: A Native Multimodal World Model for Unified Vision-Language Generation and Reasoning 1372

Imagine a single AI model that doesn’t just “see” or “read”—but seamlessly blends images and text in both input and…

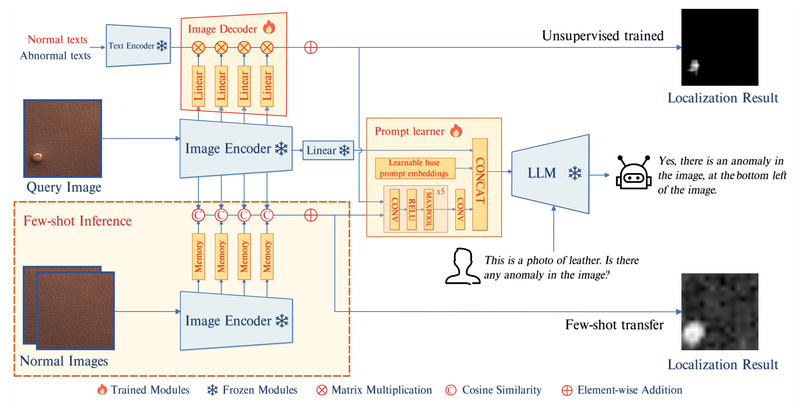

AnomalyGPT: Industrial Anomaly Detection Without Manual Thresholds or Labeled Anomalies 1043

In industrial quality control, detecting defects—like cracks in concrete, scratches on metal, or deformities in packaged goods—is critical. Yet traditional…

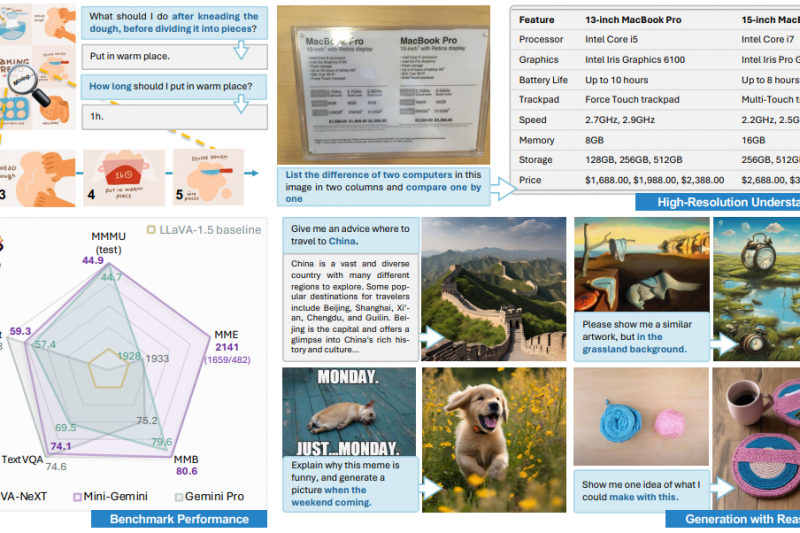

Mini-Gemini: Close the Gap with GPT-4V and Gemini Using Open, High-Performance Vision-Language Models 3323

In today’s AI landscape, multimodal systems that understand both images and language are no longer a luxury—they’re a necessity. Yet,…

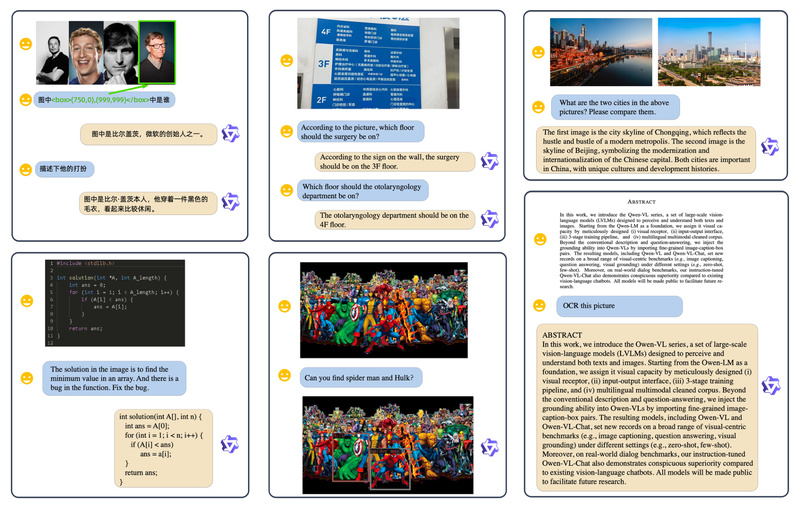

Qwen-VL: Open-Source Vision-Language AI for Text Reading, Object Grounding, and Multimodal Reasoning 6422

In the rapidly evolving landscape of multimodal artificial intelligence, developers and technical decision-makers need models that go beyond basic image…

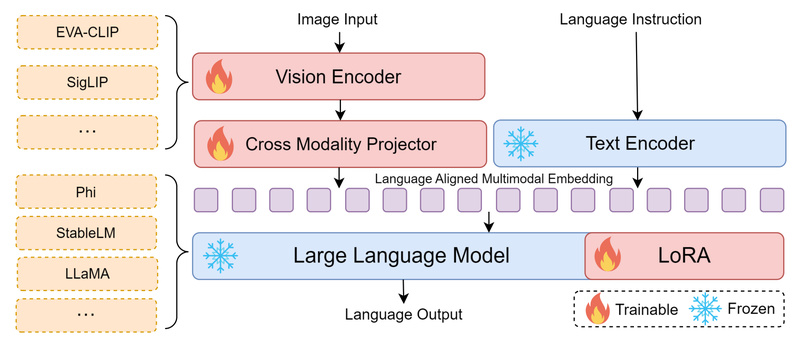

Bunny: High-Performance Multimodal AI Without the Heavy Compute Burden 1046

Multimodal Large Language Models (MLLMs) are transforming how machines understand and reason about visual content. Yet, their adoption remains out…