Imagine you have access to a powerful pre-trained vision-language model like CLIP—capable of understanding both images and text—but you need it to recognize your specific set of classes, such as rare plant species, medical imaging categories, or custom industrial defects. The problem? You only have a handful of labeled examples per class, and retraining the entire model is too costly, time-consuming, or simply infeasible.

This is where CoOp (Context Optimization) comes in. CoOp is a lightweight, prompt-based adaptation method that enables you to customize large vision-language models to your downstream tasks using minimal labeled data—without touching the model’s frozen backbone. By learning continuous, trainable prompt vectors instead of relying on static hand-crafted prompts (e.g., “a photo of a [CLASS]”), CoOp achieves substantial performance gains in few-shot settings while keeping computation and data requirements extremely low.

Developed by Kaiyang Zhou and colleagues, CoOp bridges the gap between the generality of models like CLIP and the specificity needed for real-world applications—making advanced AI accessible even when resources are constrained.

Why CoOp Stands Out

From Static Prompts to Learnable Context

Traditional prompting with CLIP uses fixed templates like “a photo of a dog.” While intuitive, these manual prompts are suboptimal—they don’t adapt to the nuances of your data. CoOp replaces the context words (everything except the class name) with a set of learnable continuous vectors. These vectors are optimized during training using just a few labeled images, effectively “teaching” the model how to interpret your specific classes through better textual context.

Strong Performance with Minimal Data

In experiments across multiple benchmarks, CoOp consistently outperforms manual prompting by large margins—even with only 1–16 examples per class. For instance, on ImageNet, CoOp achieves significant accuracy improvements with as few as 4 shots per class, all while keeping the underlying CLIP model frozen.

Parameter-Efficient and Computationally Light

Unlike full fine-tuning, which updates millions or billions of parameters, CoOp introduces only a tiny number of trainable parameters (e.g., 16 vectors of 512 dimensions for a ViT-based CLIP). This makes it ideal for environments with limited GPU memory or when rapid iteration is needed. The backbone remains untouched, preserving the robust generalization of the original pre-trained model.

Ideal Scenarios for Using CoOp

CoOp excels in practical settings where data is scarce but model customization is essential:

- Few-shot image classification: Deploying classifiers in domains with limited labeled data, such as biodiversity monitoring or niche manufacturing QA.

- Domain-specific adaptation: Quickly adapting CLIP to specialized domains like radiology, satellite imagery, or agricultural drone footage—without collecting thousands of labeled samples.

- Rapid prototyping and evaluation: Testing new classification tasks early in a project lifecycle, when only a small validation set is available.

If your task involves a fixed set of known classes and you can provide at least a few labeled examples per class, CoOp offers a fast, low-overhead path to strong performance.

Getting Started Is Straightforward

The CoOp codebase is built on the Dassl.pytorch framework and integrates seamlessly with CLIP. Here’s how to begin:

- Install the environment: Follow the Dassl installation instructions, then install additional dependencies via

pip install -r requirements.txtin the CoOp directory. - Prepare your dataset: Organize your images into class folders and configure the dataset path as outlined in

DATASETS.md. - Run training: Use the provided scripts to train CoOp on your data. The method supports both ResNet and Vision Transformer (ViT) backbones from CLIP.

- Leverage pre-trained weights: The authors release pre-trained CoOp models on ImageNet (for RN50, RN101, ViT-B/16, and ViT-B/32), which you can use directly for evaluation or as a starting point for transfer.

The repository also includes utilities like draw_curves.py for visualizing few-shot performance—helping you assess your model’s behavior across different shot settings.

Limitations and When to Consider Alternatives

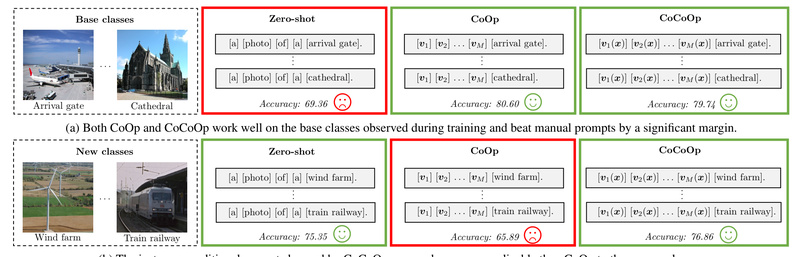

While CoOp is powerful, it has a known limitation: its learned prompts can overfit to the base classes seen during training and may not generalize well to unseen classes within the same dataset. This “class shift” sensitivity means that if your deployment scenario involves expanding to new categories not present in your training set, CoOp’s static prompts may underperform.

In such cases, consider CoCoOp (Conditional Context Optimization), the dynamic extension of CoOp introduced in the same paper. CoCoOp generates instance-specific prompts using a lightweight neural network, improving generalization across both seen and unseen classes—and even across datasets.

However, for fixed-class, few-shot tasks—where all target classes are known at training time—CoOp remains a highly effective, simple, and efficient solution.

Summary

CoOp redefines how we adapt large vision-language models: instead of expensive fine-tuning, it uses learnable prompts to align pre-trained knowledge with your specific task—using just a few labels. It’s fast, lightweight, and remarkably effective for real-world scenarios where data is limited but performance matters. If you’re working with CLIP and need to customize it quickly and cheaply, CoOp is a compelling starting point. And if your needs evolve toward broader generalization, its successor CoCoOp is just a code commit away.