In today’s AI landscape, many organizations rely on large language models (LLMs) to automate complex research tasks—such as competitive analysis, policy briefings, or scientific literature reviews. However, current approaches often hit a wall: either they depend on brittle, hand-crafted prompts that break under real-world ambiguity, or they operate in artificial, closed environments like static Retrieval-Augmented Generation (RAG) systems that can’t cope with the dynamic, noisy nature of the open web.

Enter DeepResearcher—the first end-to-end framework that trains LLM-based research agents using reinforcement learning (RL) directly in real-world web search environments. Unlike conventional methods, DeepResearcher doesn’t assume all answers live in a curated database. Instead, it teaches agents to navigate live websites, extract structured insights from unstructured pages, and develop human-like research behaviors through genuine interaction with the internet.

Why Current AI Research Agents Fall Short

Most existing AI research tools fall into two categories:

- Prompt engineering-based agents: These rely on carefully designed instructions to guide an LLM through research steps. While simple to deploy, they lack robustness—small changes in query phrasing or website structure can derail the entire process.

- RAG-based RL agents: These train within simulated or fixed corpora, assuming all necessary information is pre-indexed. This works in controlled benchmarks but fails in real-world settings where information is scattered, contradictory, or constantly updated.

Both approaches miss a critical truth: real research requires adaptability, skepticism, and the ability to pivot when initial leads dry up. DeepResearcher addresses this gap by grounding agent training in authentic web interactions—complete with broken links, inconsistent formats, and incomplete data.

How DeepResearcher Works

DeepResearcher employs a specialized multi-agent architecture where browsing agents actively explore real websites in response to open-ended research questions. The system is trained end-to-end using reinforcement learning, with rewards tied to factual accuracy, source diversity, and task completion.

Key innovations include:

- Real-world web grounding: Agents interact with live search engines (e.g., via Serper or Bing APIs) and parse actual HTML content—not pre-processed snippets.

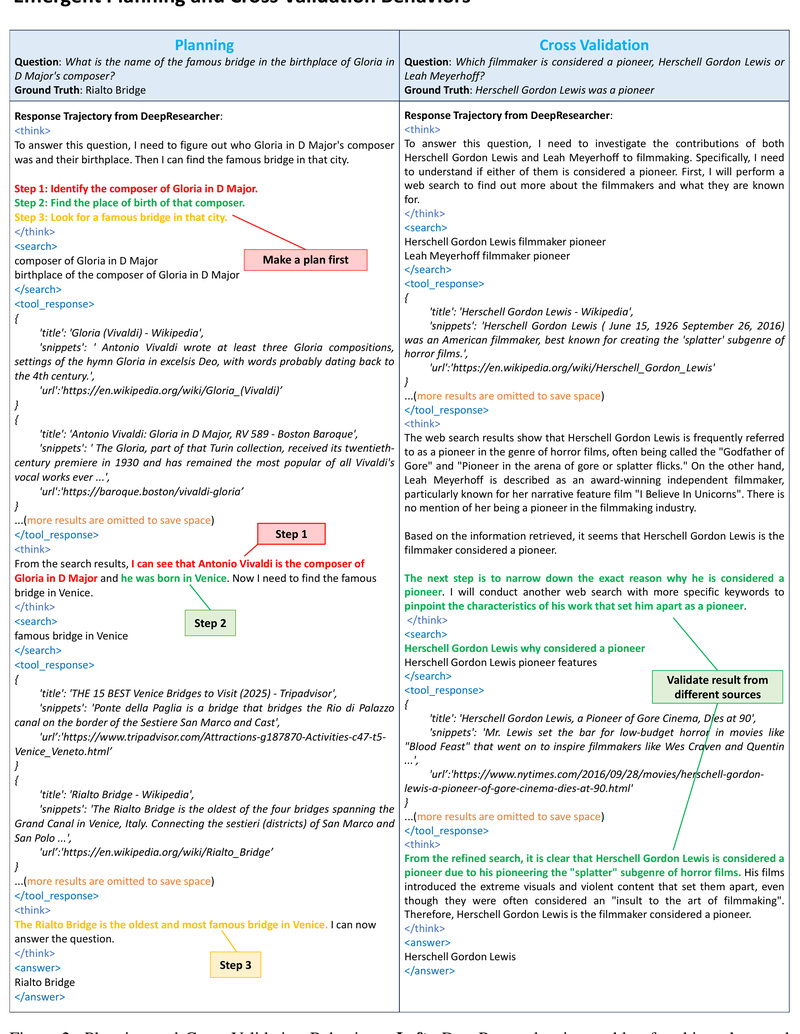

- Emergent cognitive behaviors: Through RL, agents learn to:

- Formulate step-by-step research plans

- Cross-validate claims across multiple independent sources

- Reflect on dead ends and redirect their search strategy

- Honestly admit when definitive answers cannot be found

These behaviors aren’t hard-coded—they emerge naturally from training in environments that mirror real research challenges.

Performance That Matters

On open-domain research benchmarks, DeepResearcher delivers significant gains:

- +28.9 points over prompt engineering baselines

- +7.2 points over RAG-based RL agents

More importantly, qualitative evaluations show agents producing structured, well-sourced reports that reflect genuine reasoning—not just regurgitated snippets. This makes DeepResearcher particularly valuable for high-stakes applications where accuracy and transparency are non-negotiable.

Ideal Use Cases for Technical Decision-Makers

DeepResearcher shines in scenarios requiring autonomous, trustworthy, and adaptive research in open environments. Consider adopting it if your team needs to:

- Automate competitive intelligence (e.g., tracking competitor product launches across news, blogs, and press releases)

- Conduct policy or regulatory analysis using up-to-date government publications and legal databases

- Perform scientific literature synthesis that spans preprint servers, journal sites, and conference proceedings

- Build enterprise AI assistants that answer complex internal queries by retrieving and cross-checking live external data

Unlike chatbots trained on static datasets, DeepResearcher agents actively seek, verify, and synthesize information as a human researcher would—making them far more reliable for mission-critical tasks.

Getting Started: Practical Onboarding Steps

Deploying DeepResearcher requires moderate infrastructure but follows standard MLOps practices:

- Clone the repository and set up a Conda environment with Python 3.10.

- Install dependencies, including PyTorch and FlashAttention.

- Configure API keys for a search engine (e.g., Serper or Azure Bing) and an LLM provider (e.g., Qwen).

- Launch the backend handler, which manages web interactions and LLM calls.

- Train or evaluate using provided scripts (

train_grpo.shfor training,evaluate.shfor rollouts).

The system uses Ray for distributed execution, so even single-node setups require setting PET_NODE_RANK=0 and starting a Ray head node. While not trivial, this architecture ensures scalability for enterprise-grade workloads.

Important Limitations and Considerations

DeepResearcher is powerful but not universally applicable. Keep these constraints in mind:

- API dependencies: Requires active subscriptions to search and LLM APIs (e.g., Serper, Bing, Qwen).

- Infrastructure needs: Multi-component setup involving Ray, web handlers, and model servers.

- Task specificity: Optimized for deep research tasks—not casual Q&A, creative writing, or conversational chat.

- Web variability: Performance may fluctuate with internet reliability, site structure changes, or rate limits.

Teams should assess whether their use case justifies the setup complexity and ongoing API costs. For organizations already investing in autonomous research automation, however, DeepResearcher offers a uniquely robust foundation.

Summary

DeepResearcher redefines what’s possible for AI-powered research by moving training out of artificial sandboxes and into the real web. Its end-to-end RL approach fosters genuine research behaviors—planning, verification, reflection, and intellectual honesty—that static or prompt-driven systems simply can’t replicate. For technical leaders building next-generation research agents, DeepResearcher provides both the framework and the proof that real-world grounding isn’t optional—it’s essential.