Blind image restoration—recovering high-quality images from degraded inputs without knowing the exact type or severity of degradation—is a longstanding challenge in computer vision. Most existing solutions are task-specific: one model for super-resolution, another for denoising, and yet another for face restoration. This fragmentation creates complexity, limits flexibility, and often fails on real-world images with mixed or unknown degradations.

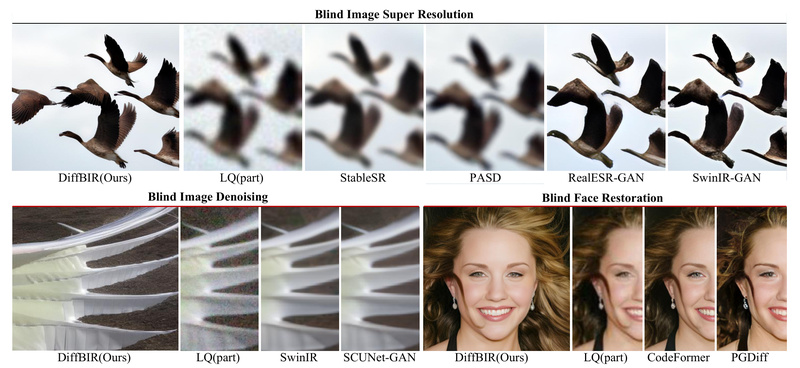

Enter DiffBIR (Diffusion-based Blind Image Restoration), a unified framework that tackles multiple blind restoration tasks—super-resolution, face enhancement, and denoising—within a single, coherent pipeline. Developed by researchers from the Shanghai AI Laboratory and Shenzhen Institute of Advanced Technology, DiffBIR decouples restoration into two intuitive stages: degradation removal and information regeneration. This design not only simplifies deployment but also delivers state-of-the-art visual quality on both synthetic benchmarks and real-world photographs.

What truly sets DiffBIR apart is its ability to generate realistic, plausible details where information was genuinely lost—without hallucinating arbitrarily—by leveraging a generative diffusion prior guided by clean intermediate representations. For engineers, product teams, and researchers seeking a robust, general-purpose restoration tool, DiffBIR offers a compelling balance of quality, versatility, and usability.

Solving Real-World Image Degradation Without Knowing the Degradation

In practice, image degradation rarely follows textbook patterns. A smartphone photo might be both low-resolution and noisy; a vintage portrait could suffer from blur, compression artifacts, and misalignment. Traditional methods often assume a known degradation model (e.g., “blur + bicubic downscaling”), making them brittle in real scenarios.

DiffBIR operates blindly: it doesn’t require you to specify whether the input is blurry, noisy, or low-res—or by how much. Instead, it first uses a pre-trained “cleaner” module (like SwinIR or BSRNet) to strip away degradation and produce a high-fidelity but often over-smoothed base image. This output becomes the foundation for the second stage: IRControlNet, a custom ControlNet that injects realistic textures and fine details using a latent diffusion model (Stable Diffusion v2.1).

Because the generative stage is conditioned on this cleaned image—and optionally, semantic captions from LLaVA—it avoids producing artifacts while recovering plausible structures. This two-stage approach ensures fidelity (faithfulness to the underlying content) and realism (natural-looking textures), two goals that often conflict in image restoration.

Developer-Friendly Design for Practical Deployment

DiffBIR is built with real-world constraints in mind:

- Hardware flexibility: Runs on CUDA, CPU, and Apple Silicon (MPS) with minimal setup changes.

- Low-VRAM support: Tiled sampling enables processing of high-resolution images (e.g., 2396×1596) even on GPUs with limited memory.

- Pre-trained models: Multiple ready-to-use checkpoints (v1, v2, v2.1) trained on diverse datasets (ImageNet, FFHQ, LAION2B, Unsplash) cover general and face-specific restoration.

- Tunable realism vs. fidelity: A region-adaptive guidance scale lets you adjust how strongly the output adheres to the input structure versus how much generative detail is added—no retraining needed.

- Easy experimentation: Launch a local Gradio demo in seconds, or run inference via simple command-line scripts for batch processing.

The codebase is modular, well-documented, and integrates standard components like ControlNet and BasicSR, making it easy to audit, extend, or adapt.

Getting Started in Minutes

You don’t need to train anything to use DiffBIR. Here’s how to restore a blurry image at 4× super-resolution using the latest v2.1 model:

git clone https://github.com/XPixelGroup/DiffBIR.git cd DiffBIR conda create -n diffbir python=3.10 && conda activate diffbir pip install -r requirements.txt python -u inference.py --task sr --upscale 4 --version v2.1 --captioner llava --cfg_scale 8 --input inputs/demo/bsr --output results/restored_sr

Switching to face restoration or denoising requires only changing the --task flag (face, face_background, or denoise). The same IRControlNet model works across all tasks—thanks to the decoupled design—eliminating the need for task-specific models.

For low-memory environments, enable tiled sampling with flags like --cldm_tiled --cldm_tile_size 512. And if you’re restoring unaligned faces in full images, use --task face_background to enhance both face and background simultaneously.

Limitations and Strategic Considerations

While powerful, DiffBIR isn’t a silver bullet:

- No official WebUI yet: Though a Gradio interface exists, a polished web application is still on the roadmap.

- LLaVA dependency for v2.1: The newest model uses LLaVA-generated captions to improve semantic consistency, which adds an extra installation step (though you can fall back to

--captioner nonewith reduced performance). - Speed: DiffBIR prioritizes quality over speed. If latency is critical, consider the team’s newer project HYPIR, which reportedly offers 10× faster inference and even better results.

That said, DiffBIR remains an excellent choice when visual quality, generalization across degradation types, and robustness on real-world data are top priorities.

Summary

DiffBIR redefines blind image restoration by unifying multiple tasks under a two-stage architecture that separates degradation removal from detail regeneration. Its ability to deliver high-fidelity, realistic results—without requiring knowledge of the input degradation—makes it uniquely suited for applications ranging from social media photo enhancement and archival digitization to forensic analysis and creative media workflows. With strong hardware support, pre-trained models, and simple inference scripts, DiffBIR lowers the barrier to deploying high-quality restoration in real projects today.