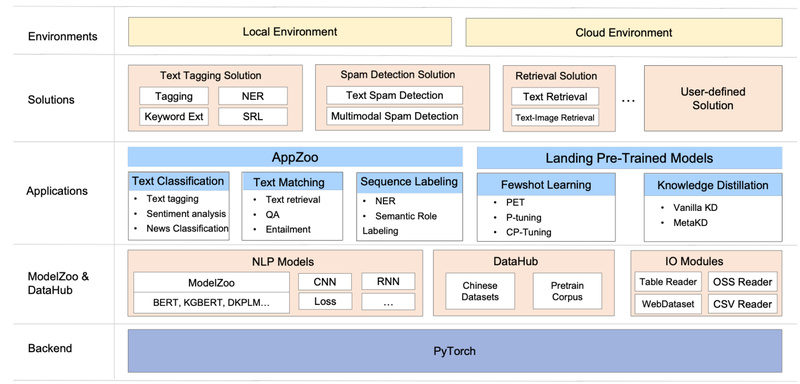

Natural Language Processing (NLP) has been revolutionized by pre-trained language models (PLMs), but turning these powerful models into real-world applications remains a significant engineering challenge—especially for teams without deep research expertise or abundant labeled data. Enter EasyNLP, an open-source, PyTorch-based toolkit developed by Alibaba’s PAI (Platform of Artificial Intelligence) team. Designed specifically for engineers, product teams, and technical decision-makers, EasyNLP bridges the gap between cutting-edge NLP research and industrial deployment by offering a unified, easy-to-use framework for training, evaluating, and serving NLP models at scale.

Since its internal release in 2021, EasyNLP has powered more than 10 business units across Alibaba Group and is natively integrated into Alibaba Cloud’s PAI suite—including PAI-DSW for interactive development, PAI-DLC for distributed cloud training, PAI-EAS for model serving, and PAI-Designer for zero-code workflows. Whether you’re building a Chinese text classifier, fine-tuning a vision-language model, or compressing a massive BERT into a lean serviceable version, EasyNLP provides the tools to do it quickly and reliably.

Why EasyNLP Solves Real Engineering Problems

One-Command NLP Applications with AppZoo

One of EasyNLP’s standout features is AppZoo—a modular library that abstracts complex NLP pipelines into simple, reusable components. With just a single command-line interface (CLI), you can train a text classification model on the SST-2 dataset:

easynlp --mode=train --worker_gpu=1 --tables=train.tsv,dev.tsv --input_schema=label:str:1,sent1:str:1 --first_sequence=sent1 --label_name=label --label_enumerate_values=0,1 --checkpoint_dir=./classification_model --epoch_num=1 --sequence_length=128 --app_name=text_classify --user_defined_parameters='pretrain_model_name_or_path=bert-small-uncased'

This eliminates boilerplate code and standardizes workflows across tasks like classification, sequence labeling, text matching, and generation.

Seamless Integration with Hugging Face and Distributed Training

EasyNLP doesn’t reinvent the wheel. It fully supports models from Hugging Face Transformers, allowing you to plug in any compatible checkpoint while benefiting from Alibaba’s TorchAccelerator—a distributed training framework that speeds up large-scale training on multi-GPU or cloud clusters. This means you get the flexibility of open-source models with enterprise-grade scalability.

Few-Shot Learning for Low-Data Scenarios

In real-world settings, labeled data is often scarce. EasyNLP integrates state-of-the-art few-shot learning techniques, including:

- PET (Pattern-Exploiting Training)

- P-Tuning

- CP-Tuning (Contrastive Prompt Tuning)

These methods enable effective fine-tuning of large PLMs with only a handful of examples—critical for niche domains or rapid prototyping.

Knowledge-Enhanced Models for Higher Accuracy

Beyond standard BERT or RoBERTa, EasyNLP includes knowledge-injected pre-trained models developed by Alibaba’s research teams:

- DKPLM: Decomposable Knowledge-enhanced PLM, winner of the CCF Knowledge Pre-training Competition

- KGBERT: BERT infused with knowledge graph embeddings

These models consistently outperform vanilla counterparts on tasks requiring factual understanding, such as relation extraction or domain-specific classification.

Model Compression via Knowledge Distillation

Deploying billion-parameter models in production is often impractical. EasyNLP offers built-in knowledge distillation capabilities—including Vanilla KD and Meta-KD—to compress large teacher models into smaller, faster student models with minimal accuracy loss. This makes it feasible to serve high-performance NLP models even on resource-constrained environments.

Practical Use Cases Where EasyNLP Excels

Rapid Chinese NLP Deployment

EasyNLP shines in Chinese-language applications, offering optimized models like:

- PAI-BERT-zh (trained on massive Chinese corpora)

- MacBERT, Chinese RoBERTa, WOBERT, and Mengzi

These are readily available in the ModelZoo and can be fine-tuned or deployed with minimal configuration.

Multimodal Applications Out of the Box

Though rooted in NLP, EasyNLP extends into vision-language tasks:

- Text-image matching (e.g., CLIP-style models for e-commerce search)

- Text-to-image generation (DALL·E-style architectures, marked “in progress”)

- Image captioning

This makes it valuable for teams working on cross-modal products like visual search or AI-generated content.

Cloud-Native Workflows on Alibaba Cloud PAI

When used with Alibaba Cloud’s PAI platform, EasyNLP enables end-to-end workflows—from notebook experimentation (PAI-DSW) to scalable training (PAI-DLC) and low-latency serving (PAI-EAS)—all without leaving the ecosystem. Even in open-source form, EasyNLP’s modular design supports integration into custom MLOps pipelines.

Getting Started in Minutes

You don’t need a PhD to use EasyNLP. Installation is straightforward:

git clone https://github.com/alibaba/EasyNLP.git cd EasyNLP python setup.py install

From there, you can either:

- Use the CLI (as shown above) for quick experiments

- Leverage the Python API for custom logic (with

Trainer,AppZoo, andModelZooabstractions)

Pre-trained models span English and Chinese, including BERT, RoBERTa, MacBERT, and Alibaba’s proprietary variants. The toolkit also supports the CLUE benchmark, making it easy to evaluate model performance on standard Chinese NLP tasks.

Limitations and Considerations

While powerful, EasyNLP has boundaries worth noting:

- Framework dependency: Built exclusively on PyTorch—no TensorFlow support.

- Language focus: Best support for English and Chinese; other languages are less tested.

- Cloud integration: Full end-to-end benefits (e.g., one-click deployment) are optimized for Alibaba Cloud PAI, though the open-source version functions independently.

- Multimodal models: Some advanced features (e.g., DALL·E-style generation) are labeled “in progress” and may not be production-ready.

Summary

EasyNLP is not just another NLP library—it’s a production-first toolkit that democratizes access to advanced NLP techniques for engineering teams. By combining one-command training, few-shot learning, knowledge-enhanced modeling, and model compression into a single, cohesive framework, it dramatically reduces the time and expertise required to move from prototype to production. For technical decision-makers evaluating NLP solutions, EasyNLP offers a rare blend of research-grade innovation and industrial robustness—making it a strategic choice for any team aiming to deploy scalable, maintainable, and efficient NLP systems.