Imagine a single AI model that doesn’t just “see” or “read”—but seamlessly blends images and text in both input and output, predicting the next state of a dynamic world like a human might. That’s the promise of Emu3.5, a native multimodal world model introduced by the BAAI Emu team. Unlike traditional pipelines that stitch together separate vision and language modules, Emu3.5 treats vision and language as a unified sequence from the ground up. Trained on over 10 trillion interleaved vision-language tokens—mostly from internet video frames and transcripts—it natively understands and generates alternating sequences of images and text without modality-specific adapters or task-specific heads.

This end-to-end design isn’t just elegant—it’s practical. For technical decision-makers building next-generation AI applications, Emu3.5 eliminates the complexity of multimodal orchestration while unlocking capabilities that were previously fragmented across multiple models. Whether you’re developing creative tools, interactive agents, or simulation environments, Emu3.5 offers a coherent, scalable foundation rooted in world modeling rather than task-specific tricks.

Core Capabilities That Solve Real Engineering Challenges

Native Interleaved Multimodal I/O

One of the biggest pain points in multimodal AI is integration. Most systems require separate encoders, decoders, alignment layers, or fusion mechanisms to handle vision and language. Emu3.5 sidesteps this entirely: it accepts inputs like “Describe this scene → <|IMAGE|> → Then imagine what happens next →” and responds with “The robot turns left → <|IMAGE|> → and picks up the red block.”

This native support means no adapter layers, no cross-attention hacks, and no brittle interface glue. For engineering teams, this translates to simpler architecture, fewer failure points, and easier maintenance.

High-Quality, Flexible Image Generation

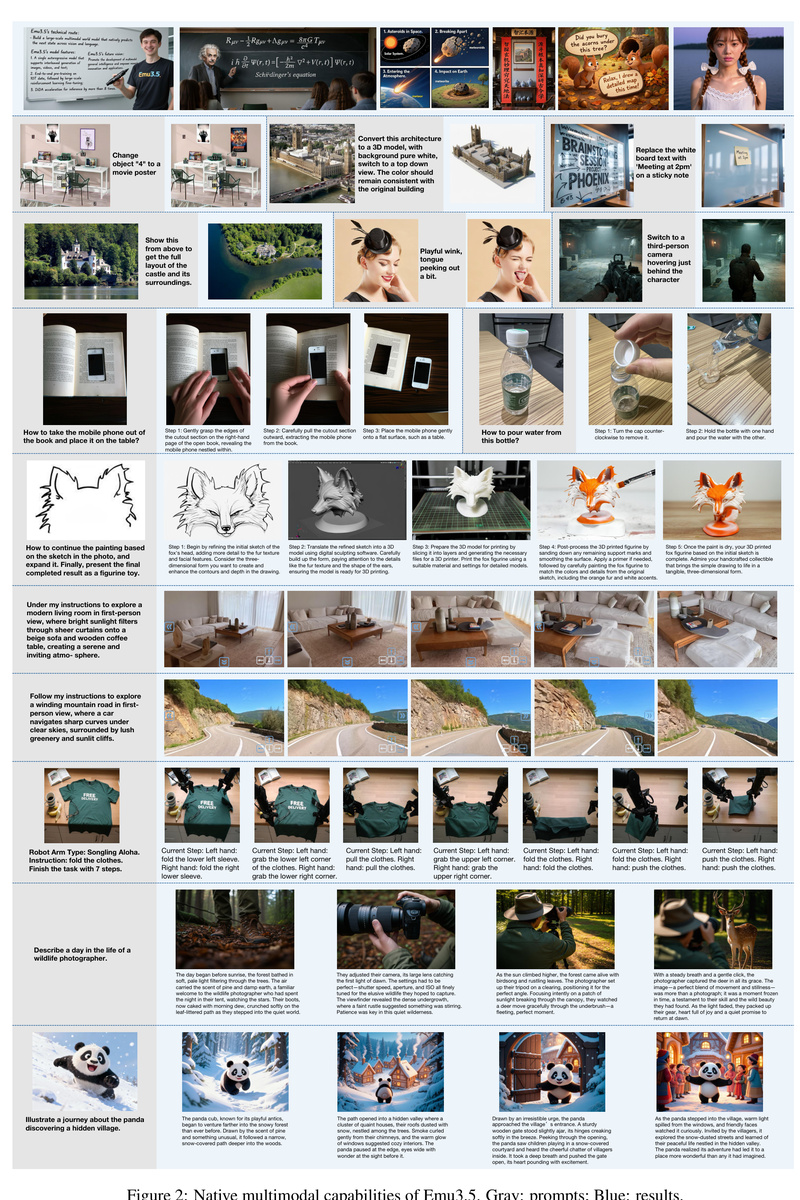

Emu3.5 excels in multiple image synthesis modes:

- Text-to-Image (T2I): Generate images from natural language prompts.

- Any-to-Image (X2I): Transform from other modalities—sketches, partial images, or multimodal contexts—into photorealistic or stylized outputs.

- Text-rich image generation: Produce images with coherent, legible embedded text—historically a weak spot for diffusion models.

Benchmark results show Emu3.5 matches Gemini 2.5 Flash Image (Nano Banana) on standard image generation and editing tasks, while outperforming it on interleaved generation where vision and language alternate over long sequences.

Long-Horizon Multimodal Reasoning

Beyond static generation, Emu3.5 supports long-horizon storytelling and reasoning. It can maintain narrative consistency across dozens of image-text turns—ideal for dynamic content like illustrated children’s books, interactive tutorials, or procedural documentation. This is made possible by its training on sequential video-transcript pairs, which embed spatiotemporal logic directly into the model’s predictions.

Generalizable World Modeling

Perhaps most uniquely, Emu3.5 functions as a world learner. It doesn’t just generate isolated outputs—it models how states evolve over time and space. This enables spatiotemporally consistent world exploration, such as simulating how a room changes as objects are moved, or how a character’s expression shifts during dialogue. For robotics, gaming, or virtual agents, this capability means the model can “imagine” plausible future states, supporting open-world embodied manipulation without explicit environment simulators.

Practical Use Cases for Technical Teams

Emu3.5 isn’t just a research prototype—it’s engineered for real-world deployment:

- AI-Powered Creative Suites: Build tools that let users draft stories with embedded visuals, where each paragraph automatically generates a corresponding illustration, or edit images via natural language instructions in context.

- Embodied AI Agents: Train or simulate agents that perceive environments and plan actions by “imagining” visual outcomes of verbal commands—e.g., “Place the cup on the table” → model generates expected visual state.

- Multimodal Assistants: Create assistants that don’t just answer questions with text, but show answers with generated images, diagrams, or annotated screenshots based on complex, multi-turn prompts.

Because Emu3.5 handles both input and output in interleaved form, these applications avoid the latency and inconsistency of chaining separate T2I and LLM systems.

Getting Started: Accessible for Practitioners

Adopting Emu3.5 doesn’t require a research lab. The team provides clear entry points:

Pre-trained Models for Different Needs

- Use Emu3.5 for interleaved generation (e.g., visual narratives).

- Use Emu3.5-Image for single-image tasks (T2I/X2I) when maximum quality is needed.

Both are available on Hugging Face and open-sourced on GitHub.

Flexible Inference Options

- Quick local testing: Run

inference.pywith a config file—supports T2I, X2I, visual guidance, and storytelling. - High-throughput production: Use the vLLM backend (

inference_vllm.py) for 4–5x faster end-to-end generation, leveraging a custom batch scheduler. - Interactive prototyping: Launch Gradio demos for image generation (

gradio_demo_image.py) or interleaved tasks (gradio_demo_interleave.py) with streaming output.

Ready-to-Use Apps

For non-developers or rapid validation, official web and mobile apps are live at emu.world (global) and zh.emu.world (Mainland China), offering full creation workflows, history, and inspiration feeds.

Note: Outputs are saved in protobuf format (

.pb), which can be visualized into images, text segments, and videos using the providedvis_proto.pyutility.

Current Limitations and Practical Considerations

While powerful, Emu3.5 has constraints that technical teams should factor into planning:

- Inference Speed: The current open weights use token-by-token decoding, which can take several minutes per image on standard hardware.

- DiDA Not Yet Public: The paper introduces Discrete Diffusion Adaptation (DiDA)—a technique that enables 20x faster inference via parallel prediction—but accelerated weights are not yet released.

- Hardware Requirements: For reasonable throughput, ≥2 GPUs are recommended, especially for interleaved or batched tasks.

- Post-Processing Needed: Generated results require a visualization step to convert protobuf files into human-readable images and text sequences.

These are temporary trade-offs for early adopters; the roadmap includes DiDA weights and an advanced image decoder.

Summary

Emu3.5 redefines what’s possible in multimodal AI by unifying vision and language in a single, end-to-end world model. It solves real engineering pain points—eliminating adapter complexity, enabling long-horizon coherence, and supporting diverse generation modes from text, images, or mixed inputs. While current inference speed and hardware demands require planning, the open-source release, vLLM integration, and official apps make it accessible for technical teams ready to build next-generation multimodal applications. For those seeking a native, scalable foundation for vision-language reasoning and generation, Emu3.5 is a compelling choice.