In an increasingly multilingual and interconnected world, spoken language translation (SLT) has moved beyond academic curiosity to become a critical capability for real-world applications—from live interpretation in international meetings to accessible media and intelligent voice assistants. Yet building robust, production-grade SLT systems remains a complex task, often requiring deep expertise in speech processing, machine translation, and model deployment.

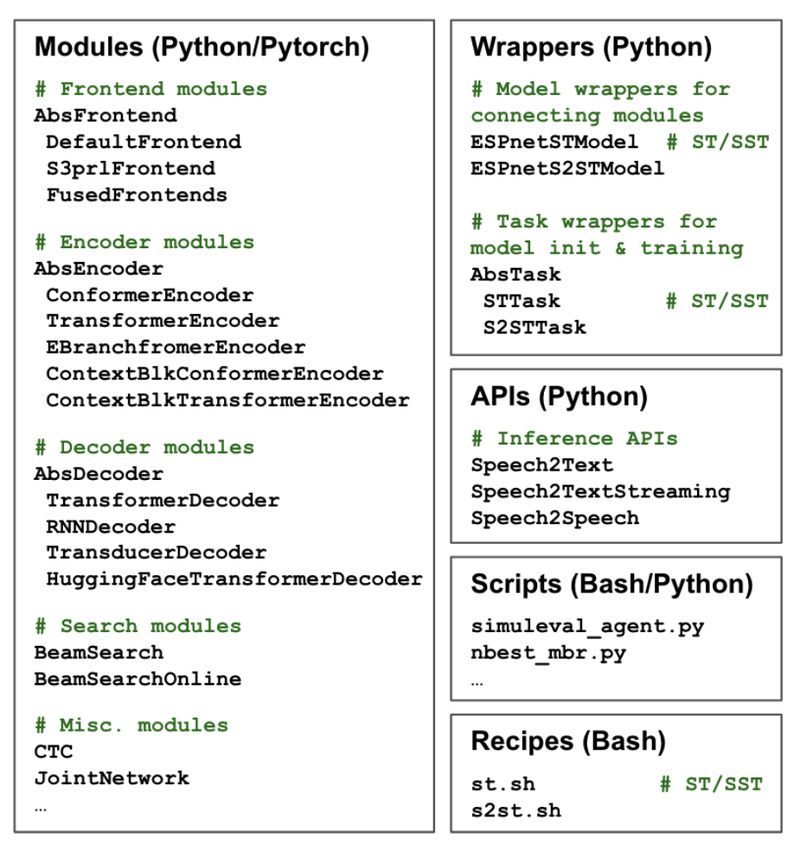

That’s where ESPnet-ST shines. As a core component of the broader ESPnet ecosystem, ESPnet-ST is a fully open-source, multipurpose toolkit specifically engineered for spoken language translation. Its latest iteration—ESPnet-ST-v2—is a major leap forward, unifying support for three distinct but complementary translation paradigms:

- Offline speech-to-text translation (ST)

- Simultaneous speech-to-text translation (SST)

- Offline speech-to-speech translation (S2ST)

Unlike most open-source alternatives that focus on a single mode, ESPnet-ST-v2 provides a comprehensive, modular framework that empowers researchers and engineers to experiment with, benchmark, and deploy state-of-the-art SLT systems—without starting from scratch.

A Unified Toolkit for Diverse Translation Needs

ESPnet-ST-v2 isn’t just another research prototype. It’s designed to address real-world constraints and use cases:

- Accessibility: Automatically translate spoken content for hearing-impaired users or non-native speakers.

- Global collaboration: Enable real-time cross-language communication in business or education.

- Content localization: Generate dubbed audio or transcribed subtitles from source-language videos.

By integrating ST, SST, and S2ST under one roof, ESPnet-ST eliminates the need to stitch together disparate tools or reinvent architectures for each task—saving time, reducing technical debt, and ensuring reproducibility.

Key Technical Capabilities

ESPnet-ST-v2 stands out not just for its breadth of supported tasks, but for the depth and flexibility of its underlying architectures. It offers a rich suite of modern, high-performance models that cater to different trade-offs between latency, quality, and computational efficiency.

State-of-the-Art Model Architectures

The toolkit implements and benchmarks several cutting-edge approaches:

- Hybrid CTC/Attention models: Combine the alignment robustness of CTC with the contextual power of attention mechanisms—ideal for high-accuracy offline ST.

- Transducer-based systems: Enable seamless integration of streaming and offline decoding, particularly useful for SST scenarios.

- Translatotron and direct S2ST models: Bypass intermediate text representations to translate speech directly into speech, preserving prosody and reducing pipeline errors.

- Multi-decoder frameworks with searchable intermediates: Allow flexible decoding strategies, including early exiting for low-latency applications.

- Time-synchronous blockwise CTC/attention: A practical compromise for simultaneous translation, processing speech in blocks while maintaining reasonable latency.

- Discrete unit-based S2ST: Leverage self-supervised speech representations (like HuBERT) to generate fluent target-language speech without text.

These aren’t theoretical constructs—they’re fully implemented, trainable, and benchmarked on standard datasets, with pre-trained models and training recipes readily available.

Built on ESPnet2: Scalable, Reproducible, and Developer-Friendly

ESPnet-ST-v2 leverages the modern ESPnet2 infrastructure, which brings significant engineering advantages:

- Kaldi-style recipe system: Proven, standardized workflows for data preparation, training, and evaluation across dozens of datasets (e.g., Fisher-CallHome Spanish, Must-C, Libri-trans, How2).

- On-the-fly feature extraction: Reduces disk I/O and enables training on massive corpora without memory bottlenecks.

- Multi-GPU and multi-node support: Scale training seamlessly across clusters using PyTorch’s DistributedDataParallel or DeepSpeed.

- Docker and Conda compatibility: Simplifies environment setup across Linux, macOS, and Windows.

- ESPnet Model Zoo integration: Instant access to pre-trained models for inference or transfer learning.

This mature ecosystem means teams can go from “Hello World” to a production-ready ST model faster than with most alternatives.

Practical Use Cases for Teams and Researchers

ESPnet-ST-v2 delivers tangible value across both research and industrial settings:

Real-Time Interpretation with Simultaneous ST (SST)

For developers building live interpreter apps or meeting transcription services, ESPnet-ST’s SST support enables low-latency translation that balances delay and accuracy. The blockwise CTC/attention and transducer decoders allow systems to begin outputting translations before the speaker finishes—critical for user experience.

End-to-End Dubbing with Speech-to-Speech Translation (S2ST)

Media companies or content creators can use ESPnet-ST’s S2ST pipelines (e.g., Translatotron variants) to generate natural-sounding dubbed audio directly from source speech. This avoids error propagation from cascaded ASR + MT + TTS pipelines and better preserves speaker characteristics.

Multilingual Voice Assistants and Accessibility Tools

Offline ST models trained on benchmarks like Must-C deliver high BLEU scores (e.g., 22.91 on En→De) and can be fine-tuned for domain-specific commands or customer support dialogues. Combined with ESPnet’s ASR and TTS capabilities, this enables fully multilingual conversational agents.

Reproducible Research and Rapid Prototyping

Academic labs benefit from ESPnet-ST’s standardized evaluation protocols and extensive recipe coverage. Whether exploring new S2ST architectures or comparing SST strategies, researchers can build on a solid, community-validated foundation—accelerating publication and collaboration.

Getting Started Without a PhD

You don’t need to be a speech processing expert to use ESPnet-ST effectively:

- Choose a recipe: Navigate to

egs2/<dataset>/st1/(e.g.,must_c/st1) for pre-configured training pipelines. - Run with defaults: Launch training using provided scripts that handle data formatting, augmentation, and model configuration.

- Leverage pre-trained models: Use

espnet_model_zooto download and run inference with models likefisher_callhome_spanish.transformer.v1.es-en. - Customize incrementally: Swap encoders (Conformer, Branchformer), decoders, or loss functions using modular config files—no code changes needed.

Even for custom datasets, the Kaldi-style data directory format (wav.scp, text, utt2spk) is intuitive and well-documented.

Limitations and Practical Considerations

While powerful, ESPnet-ST isn’t a magic bullet:

- Computational demands: Training full S2ST systems (especially with discrete units or large Conformers) requires significant GPU resources.

- Learning curve: New users should expect a ramp-up period to understand ESPnet’s config system, though tutorials and Colab demos ease this.

- Streaming limits: Current SST implementations are block-based, not fully character-by-character streaming—so ultra-low-latency (<200ms) scenarios may need custom engineering.

- Deployment overhead: ESPnet-ST excels at training and evaluation, but production deployment (e.g., mobile inference, real-time APIs) typically requires additional tooling like ONNX conversion or custom serving layers.

That said, the toolkit provides all the building blocks—teams can focus on product integration rather than algorithmic reinvention.

Summary

ESPnet-ST-v2 is the most comprehensive open-source toolkit available for spoken language translation today. By unifying offline ST, simultaneous SST, and speech-to-speech S2ST in a single, well-engineered framework—with support for diverse architectures, standardized recipes, and pre-trained models—it dramatically lowers the barrier to building, testing, and deploying multilingual speech translation systems. Whether you’re a researcher pushing the boundaries of S2ST or a product team shipping a real-time interpreter, ESPnet-ST offers the flexibility, performance, and reproducibility you need—without locking you into a proprietary stack.