Evolutionary Computation (EC) has long been a powerful approach for solving complex optimization problems—especially where gradients are unavailable, environments are noisy, or objectives are non-differentiable. But as AI systems grow in scale and complexity, traditional EC tools struggle to keep pace. They’re often slow, hard to scale across devices, and incompatible with modern deep learning workflows.

Enter EvoX: an open-source, distributed, GPU-accelerated framework designed specifically to meet the performance, flexibility, and scalability demands of today’s large-scale optimization challenges. Built with PyTorch compatibility at its core, EvoX enables practitioners and researchers to run 50+ evolutionary algorithms—from classic PSO and CMA-ES to cutting-edge multi-objective methods—across CPUs and GPUs, even in distributed multi-node setups.

Whether you’re tuning hyperparameters, evolving neural networks for reinforcement learning, or optimizing engineering designs, EvoX delivers dramatic speedups (over 100× in many cases) while maintaining a clean, intuitive programming interface.

Why Evolutionary Computation Matters—And Why It Needs a Modern Stack

Many real-world problems resist gradient-based solutions. Think of optimizing robot controllers, designing antennas with physical constraints, or searching neural architectures where the objective function is discontinuous or stochastic. In these contexts, evolutionary algorithms shine because they treat problems as black boxes—requiring only evaluations, not derivatives.

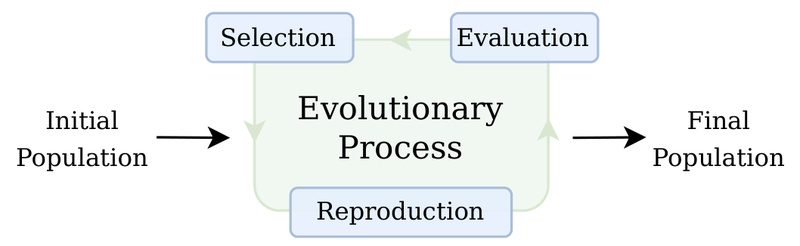

But until recently, harnessing this power meant sacrificing speed and scalability. Most EC libraries run on a single CPU and aren’t designed to leverage modern hardware. EvoX changes that by providing a unified framework that’s:

- GPU-native: Algorithms run on CUDA-enabled devices out of the box.

- Distributed-ready: Scale from one GPU to a cluster without rewriting code.

- PyTorch-integrated: Seamlessly plug into existing deep learning pipelines.

- Feature-complete: Includes 50+ algorithms and 100+ benchmark problems—from DTLZ to Brax RL environments.

This combination makes EvoX uniquely suited for technical decision-makers who need robust, scalable, and fast optimization—but don’t want to build infrastructure from scratch.

Key Strengths That Set EvoX Apart

Performance at Scale

EvoX leverages both CPU and GPU hardware heterogeneously, achieving over 100× speedups compared to CPU-only implementations. Its distributed execution model allows you to scale population-based evaluations across multiple devices or nodes—critical when handling thousands of parallel RL episodes or high-dimensional parameter searches.

Broad Algorithmic Coverage

The framework ships with a comprehensive suite of evolutionary algorithms, categorized by use case:

- Single-objective: PSO, Differential Evolution (e.g., SHADE, CoDE), Evolution Strategies (CMA-ES, OpenES).

- Multi-objective: NSGA-II, MOEA/D, RVEA, IBEA, and more.

This means you can experiment with state-of-the-art methods without switching libraries or reimplementing core logic.

Seamless Integration with Modern ML Stacks

Because EvoX is built on PyTorch, it integrates naturally with models, datasets, and training loops you already use. You can evolve a PyTorch neural network just by wrapping it with EvoX’s ParamsAndVector utility—no custom backend required.

Developer-Friendly Workflows

EvoX introduces a streamlined programming model centered around the StdWorkflow and EvalMonitor abstractions. This reduces boilerplate and lets you go from idea to execution in minutes. For example, solving the Ackley function with Particle Swarm Optimization takes fewer than 15 lines of code.

Where EvoX Shines: Real-World Use Cases

Hyperparameter and Architecture Optimization

EvoX excels at searching large, discrete, or mixed spaces where gradient-free methods are essential. Whether you’re tuning learning rates, dropout ratios, or entire neural topologies, EvoX’s population-based parallelism lets you evaluate hundreds of configurations simultaneously on GPU.

Neuroevolution for Reinforcement Learning

EvoX includes built-in support for Brax environments—enabling you to evolve policies (like MLPs) for tasks such as HalfCheetah or Ant. With population sizes in the thousands and GPU-accelerated rollout, you can achieve competitive performance without relying on backpropagation.

Engineering and Scientific Design

From antenna layout to aerodynamic shape optimization, many real-world design problems involve multiple objectives and expensive simulations. EvoX’s multi-objective solvers (e.g., NSGA-III, RVEA) coupled with custom problem definitions allow you to plug in your own simulators and evolve high-performing solutions efficiently.

Benchmarking and Algorithm Research

With 100+ numerical test functions (e.g., CEC’22, DTLZ, ZDT) and standardized workflows, EvoX also serves as a robust platform for comparing evolutionary algorithms—ideal for researchers developing new EC methods.

Getting Started Is Easier Than You Think

Installation is straightforward:

pip install "evox[default]"

Windows users can even run a one-click install script.

A minimal example—optimizing the Ackley function with PSO—looks like this:

import torch from evox.algorithms import PSO from evox.problems.numerical import Ackley from evox.workflows import StdWorkflow, EvalMonitor algorithm = PSO(pop_size=100, lb=-32 * torch.ones(10), ub=32 * torch.ones(10)) problem = Ackley() monitor = EvalMonitor() workflow = StdWorkflow(algorithm, problem, monitor) workflow.init_step() for i in range(100):workflow.step() monitor.plot()

Uncomment one line to run everything on GPU. The same pattern extends to neuroevolution, multi-objective optimization, and custom problems.

What to Keep in Mind: Limitations and Considerations

EvoX is powerful—but it’s not a universal optimizer. Keep these points in mind:

- License: EvoX uses the GPL-3.0 license. While great for research and open collaboration, this may restrict integration into proprietary commercial products unless you’re prepared to comply with GPL terms.

- PyTorch Dependency: Though you don’t need deep PyTorch expertise, basic familiarity helps—especially when defining custom neural policies or problems.

- Best for Population-Based Tasks: EvoX is optimized for problems that benefit from evaluating many candidate solutions in parallel. If your problem involves only a few evaluations or requires high-frequency sequential decisions, other methods may be more appropriate.

How EvoX Fits Into Your Broader AI/ML Stack

EvoX isn’t just a standalone library—it’s the nucleus of a growing ecosystem:

- EvoRL: Specialized for evolutionary reinforcement learning.

- EvoGP: GPU-accelerated genetic programming.

- EvoMO: Focused on multiobjective optimization.

- TensorNEAT and TensorACO: Tensorized implementations of NEAT and Ant Colony Optimization.

This modular expansion means that as your needs evolve—from single-objective tuning to symbolic regression or Pareto-front exploration—you can stay within a consistent, high-performance framework.

Summary

EvoX bridges the gap between the flexibility of evolutionary computation and the performance demands of modern AI workloads. With GPU acceleration, distributed scaling, PyTorch integration, and a rich algorithm library, it empowers engineers and researchers to tackle large-scale, gradient-free optimization problems that were previously too slow or unwieldy. If you’re facing challenges in hyperparameter tuning, neuroevolution, or multi-objective design—and you need speed, scalability, and simplicity—EvoX is worth serious consideration.