In today’s fast-paced computer vision landscape, high-quality image segmentation is no longer a luxury—it’s a necessity. Yet, despite the groundbreaking capabilities of Meta’s Segment Anything Model (SAM), its computational demands have limited its adoption in real-world, resource-constrained environments. Enter FastSAM: a lean, fast, and remarkably effective alternative that delivers near-SAM segmentation quality at 50 times the inference speed, using only 2% of SAM’s original training data.

Built on a YOLOv8-based convolutional architecture instead of a heavyweight Vision Transformer, FastSAM slashes GPU memory usage by more than half while supporting the same flexible prompting mechanisms—everything, points, boxes, and even text. For engineers, researchers, and product teams needing responsive, scalable segmentation in production systems—especially on edge devices, industrial pipelines, or interactive web applications—FastSAM offers a compelling balance of speed, simplicity, and performance.

Why FastSAM Changes the Game

A Practical Alternative to SAM’s Computational Overhead

SAM revolutionized zero-shot segmentation by enabling users to generate masks from arbitrary prompts. However, its reliance on a high-resolution Vision Transformer results in slow inference (hundreds of milliseconds per image) and high GPU memory consumption (over 7 GB on an RTX 3090). These bottlenecks make SAM impractical for real-time video analysis, embedded deployment, or high-throughput server workloads.

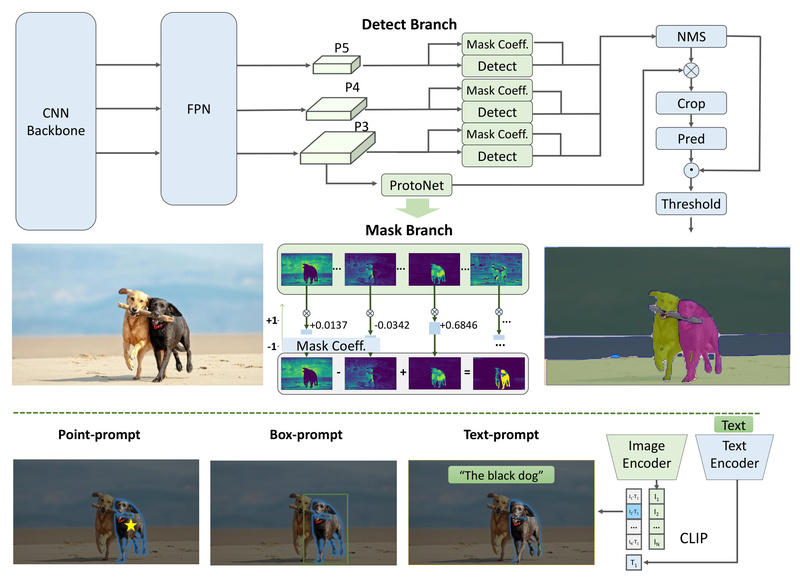

FastSAM rethinks the problem: instead of treating segmentation as a dense prediction task requiring Transformers, it reformulates it as instance segmentation—a well-studied problem solvable with efficient CNNs. By leveraging a YOLOv8 backbone with an instance segmentation head (inspired by YOLACT), FastSAM achieves consistent 40 ms inference time regardless of prompt complexity—compared to SAM’s 110–6,972 ms—while using just 2.6 GB of GPU memory on COCO-scale inputs.

Trained on Just 2% of the SA-1B Dataset

Remarkably, FastSAM doesn’t require massive compute or data. It was trained on only 1/50th (2%) of Meta’s SA-1B dataset, yet still achieves competitive zero-shot transfer performance across diverse downstream tasks—from edge detection to object proposal generation. This efficiency makes retraining or fine-tuning far more accessible to teams without access to large-scale infrastructure.

Key Features That Deliver Real-World Value

1. Multi-Modal Prompting, Just Like SAM

FastSAM supports all four prompt types that users expect from modern segmentation systems:

- Everything mode: Generate all segmentable objects in the image.

- Point prompts: Click foreground/background points to guide mask selection.

- Box prompts: Provide bounding boxes to extract specific regions.

- Text prompts: Use natural language (e.g., “the yellow dog”) via CLIP integration.

This flexibility ensures seamless integration into existing workflows that already rely on SAM-style interaction.

2. Lightweight CNN Architecture

Unlike SAM’s Vision Transformer, FastSAM uses a YOLOv8x or YOLOv8s backbone—proven, production-ready CNNs optimized for speed and accuracy. This architectural choice enables:

- Deterministic, low-latency inference

- Easier optimization (e.g., TensorRT support is already available)

- Straightforward deployment on edge devices

3. Broad Ecosystem Support

FastSAM isn’t just a research prototype. It’s actively integrated into real-world tooling:

- Available in the Ultralytics Model Hub

- Runnable via Hugging Face Spaces, Replicate, and Google Colab

- Comes with a Gradio web UI for rapid prototyping

- Supports export to TensorRT for maximum inference speed

Ideal Use Cases for FastSAM

FastSAM shines in scenarios where latency, memory, or deployment simplicity are critical:

- Industrial visual inspection: Real-time defect detection on production lines where every millisecond counts.

- Video analytics: Segmenting objects frame-by-frame in surveillance or sports analysis without buffering.

- Edge AI applications: Running on drones, robotics, or mobile devices with limited compute.

- Interactive web demos: Enabling user-guided segmentation in browsers via lightweight backends.

- Downstream vision tasks: Serving as a fast, generic mask generator for anomaly detection, salient object segmentation, or geospatial building extraction.

In benchmarks, FastSAM even outperforms SAM on LVIS object proposal recall for small and medium objects—demonstrating its robustness in complex, real-world scenes.

Getting Started in Under 5 Minutes

FastSAM is designed for frictionless adoption. Here’s how to run it locally:

-

Install dependencies:

git clone https://github.com/CASIA-IVA-Lab/FastSAM.git cd FastSAM conda create -n FastSAM python=3.9 && conda activate FastSAM pip install -r requirements.txt pip install git+https://github.com/openai/CLIP.git # only if using text prompts

-

Download a checkpoint (e.g.,

FastSAM.pt). -

Run inference via command line:

# Everything mode python Inference.py --model_path ./weights/FastSAM.pt --img_path ./images/dogs.jpg # Text prompt python Inference.py --model_path ./weights/FastSAM.pt --img_path ./images/dogs.jpg --text_prompt "the yellow dog"

-

Or use the Python API:

from fastsam import FastSAM, FastSAMPrompt model = FastSAM('./weights/FastSAM.pt') results = model('image.jpg', device='cuda') prompt = FastSAMPrompt('image.jpg', results, device='cuda') masks = prompt.text_prompt(text='a photo of a dog') prompt.plot(annotations=masks, output_path='output.jpg')

For zero-setup testing, try the Hugging Face or Replicate demos, which support all prompt modes in the browser.

Limitations and When to Choose SAM Instead

FastSAM is not a drop-in replacement for SAM in every scenario. Key trade-offs include:

- Slightly lower mask quality: On COCO instance segmentation, FastSAM achieves 37.9 AP vs. SAM’s 46.5 AP, with occasional edge jaggedness (though recent updates have improved this).

- Less fine-grained control: While sufficient for most practical uses, SAM may still be preferable for artistic or medical applications demanding pixel-perfect boundaries.

In essence, choose FastSAM when speed, efficiency, and deployability outweigh marginal accuracy gains. For offline, high-precision tasks, SAM remains the gold standard—but for real-time systems, FastSAM is often the smarter choice.

Summary

FastSAM redefines what’s possible in practical image segmentation. By replacing SAM’s compute-heavy Transformer with a lean CNN and training on a fraction of the data, it delivers real-time performance without catastrophic quality loss. With full prompt compatibility, low memory footprint, and strong ecosystem support, it empowers teams to bring state-of-the-art segmentation into production—today. If your project demands responsiveness, scalability, or edge deployment, FastSAM isn’t just an alternative—it’s the upgrade you’ve been waiting for.