Financial analysis is rarely a solo endeavor. In real-world institutions—from investment banks to asset management firms—complex tasks like producing quarterly outlooks, evaluating corporate health, or interpreting regulatory shifts are distributed across specialists: researchers pore over filings, analysts model industry dynamics, accountants verify calculations, and consultants synthesize insights into actionable advice.

Yet most off-the-shelf large language models (LLMs) treat finance as a generic QA domain. They struggle with numerical rigor, contextual depth, and multi-step reasoning required in authentic financial workflows. Enter FinTeam, a multi-agent collaborative intelligence system explicitly designed to mirror how financial professionals actually work. Built by Fudan University’s Data Intelligence and Social Computing Lab (Fudan-DISC), FinTeam orchestrates four specialized LLM agents to tackle comprehensive financial scenarios—from macroeconomic trends to company-level due diligence—with results that earn a 62% human acceptance rate in report generation, outperforming strong baselines like GPT-4o and Xuanyuan.

For practitioners, researchers, and developers seeking a domain-aware, modular, and high-fidelity solution for financial reasoning, FinTeam offers a compelling alternative to general-purpose models that lack financial grounding.

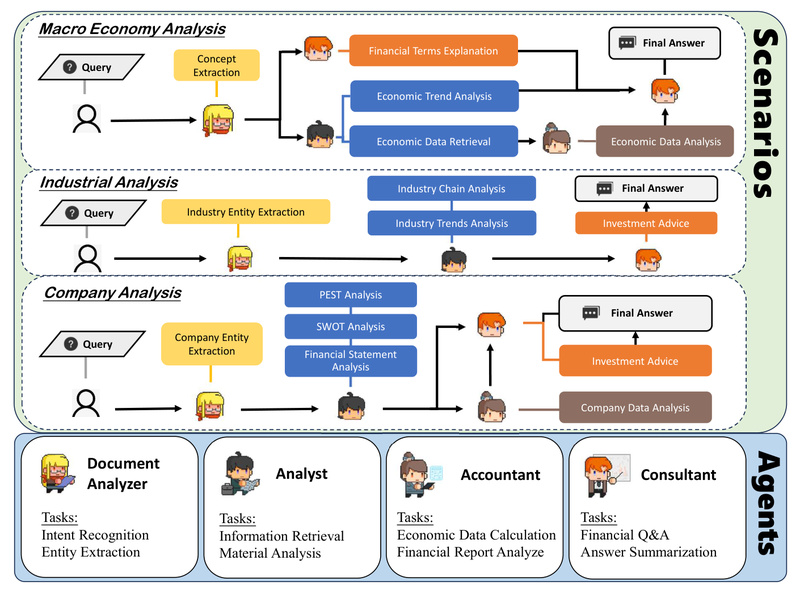

A Multi-Agent Architecture Aligned with Real Financial Workflows

FinTeam isn’t a monolithic model—it’s a coordinated team of four expert agents, each fine-tuned on high-quality Chinese financial instructions and assigned a distinct functional role:

Document Analyzer

This agent handles financial text understanding and processing. Trained on over 110K examples covering sentiment analysis, named entity recognition, event extraction, and document summarization, it can parse earnings reports, news articles, and regulatory filings to extract structured insights.

Analyst

Focused on macroeconomic, industry, and strategic analysis, the Analyst interprets trends, evaluates competitive landscapes, and generates forward-looking assessments. Its training includes retrieval-augmented tasks where it synthesizes insights from real financial news, research reports, and policy documents.

Accountant

Unlike generic LLMs that hallucinate calculations, the Accountant integrates four built-in financial tools: a mathematical expression evaluator, an equation solver, a statistics calculator, and a standard normal distribution table. It can accurately compute growth rates, financial ratios, Black-Scholes option prices, and even expected default frequencies (EDF)—all by generating precise tool-calling commands embedded in its output.

Consultant

The Consultant delivers personalized investment advice and policy interpretations through multi-turn dialogue. Fine-tuned on financial Q&A datasets and forum-style discussions, it provides context-aware, professionally phrased recommendations aligned with Chinese market norms.

Critically, these agents operate in a collaborative workflow that mimics inter-departmental coordination in real firms. A single user query may trigger sequential or parallel agent interactions, ensuring that final outputs combine factual grounding, numerical accuracy, and strategic insight.

Domain-Specific Training That Drives Real Performance Gains

FinTeam’s strength stems from its foundation: the DISC-Fin-SFT dataset, comprising 246,000+ high-quality Chinese financial instructions across four categories:

- 63K consulting-style Q&A pairs (e.g., explaining financial terminology)

- 110K NLP tasks (e.g., extracting entities from 10-K equivalents)

- 57K calculation-intensive problems with tool-call annotations

- 20K retrieval-augmented analysis questions tied to real news and reports

This domain-focused training yields measurable improvements:

- +7.43% average gain on FinCUGE (a Chinese financial NLP benchmark)

- +2.06% accuracy boost on FinEval (a multi-subject financial QA test)

- 35% correctness on hand-crafted financial calculation tasks—nearly double Baichuan-13B-Chat’s 12%

These results confirm that FinTeam doesn’t just “sound” knowledgeable—it delivers verifiable, tool-backed, and contextually anchored financial reasoning.

Modular Deployment: Use Only What You Need

To balance performance and efficiency, FinTeam offers two deployment modes:

Full Fine-Tuned Model

A single Baichuan-13B-Chat model fine-tuned on the entire DISC-Fin-SFT dataset. Ideal for general-purpose financial assistance.

Modular LoRA Adapters

Four lightweight LoRA modules—consulting, computing, retrieval, and task (NLP)—can be dynamically loaded or swapped on the base Baichuan-13B-Chat without reloading the full model. This allows users to:

- Activate only the Accountant for spreadsheet-like calculations

- Load the Consultant + Analyst for investment memo generation

- Switch modules on-demand based on task requirements

All models are available on Hugging Face, and deployment requires only standard Python environments with transformers and peft. A Streamlit web demo and CLI tool are also provided for rapid prototyping.

Practical Use Cases Where FinTeam Excels

FinTeam shines in scenarios that demand integration of data, computation, and domain logic—precisely where generic LLMs falter:

- Generating analyst-style investment memos that combine macro outlooks, sector trends, and company fundamentals

- Automating earnings report analysis by extracting KPIs, computing YoY growth, and flagging anomalies

- Interpreting new financial regulations using up-to-date policy documents via retrieval-augmented reasoning

- Teaching financial modeling concepts with accurate, step-by-step calculations (e.g., DCF, WACC)

- Supporting due diligence workflows by cross-referencing news, filings, and market data

It is not designed for simple fact retrieval or casual financial chat—but for structured, multi-step, output-oriented financial intelligence.

Getting Started Without Heavy Infrastructure

Deploying FinTeam is straightforward:

- Install dependencies:

pip install -r requirements.txt - Load the base model (

Go4miii/DISC-FinLLM) - Optionally attach a LoRA adapter (e.g.,

Baichuan-13B-Chat-lora-Computing) - Run inference via Python API, CLI (

python cli_demo.py), or Streamlit (streamlit run web_demo.py)

The system supports int8/int4 quantization and CPU fallback via Baichuan-13B’s tooling, though an A10/A800-class GPU is recommended for full performance.

Limitations and Important Considerations

While powerful, FinTeam has clear boundaries:

- Geographic focus: Trained primarily on Chinese financial context, including domestic regulations, market structures, and terminology. Performance may degrade on U.S./EU-specific queries without adaptation.

- Real-time data dependency: The retrieval-augmented agent relies on external documents; it does not natively access live market feeds.

- Not a substitute for human advisors: Outputs should be reviewed by qualified professionals—the model is for reference only.

- Hardware requirements: Full model inference benefits from 80GB+ GPU memory; LoRA adapters reduce but don’t eliminate this need.

The project is released under the Apache 2.0 license, with code, models, and evaluation benchmarks publicly available on GitHub.

Summary

FinTeam addresses a critical gap in financial AI: the inability of general-purpose LLMs to replicate the collaborative, multi-expert, and calculation-intensive nature of real financial analysis. By combining domain-specific fine-tuning, built-in financial tooling, and a modular multi-agent design, it delivers human-acceptable reports and measurable performance gains over leading baselines. For teams building financial research assistants, automated reporting systems, or educational tools focused on Chinese markets, FinTeam offers a robust, transparent, and production-ready foundation.