Developing general-purpose robots that can navigate, interact, and manipulate in real-world urban environments remains one of the most demanding challenges in Embodied AI. Real-world data collection is prohibitively expensive, physical testing environments are limited in scale and diversity, and existing simulators often lack the social complexity or scene variety needed to train truly versatile agents. Enter GRUtopia—now evolved into InternUtopia—a groundbreaking simulation platform designed to overcome these barriers by providing a city-scale, interactive 3D society tailored for embodied agents.

GRUtopia enables researchers and engineers to prototype, train, and benchmark embodied AI systems at unprecedented scale—without needing fleets of physical robots or access to real-world infrastructure. By combining massive scene diversity, socially intelligent NPCs, and standardized evaluation tasks, it directly addresses the data scarcity and environment limitations that have historically slowed progress in the field.

Why GRUtopia Solves Real Pain Points in Embodied AI

Embodied AI practitioners routinely face three core bottlenecks:

- High cost and risk of real-world data collection – Deploying legged or mobile robots in dynamic public spaces is expensive, time-consuming, and often unsafe during early development stages.

- Lack of diverse, interactive environments beyond homes – Most simulators focus on domestic settings, yet real-world service robots will operate in offices, cafés, transit hubs, and retail spaces.

- Absence of social dynamics in simulation – Robots must not only avoid obstacles but also interpret human intentions, respond to verbal cues, and coordinate in shared spaces—capabilities rarely modeled in current benchmarks.

GRUtopia tackles all three by offering a Sim2Real-ready platform where large-scale, socially rich, and physically accurate simulation becomes the foundation for scalable learning.

Core Innovations That Power GRUtopia

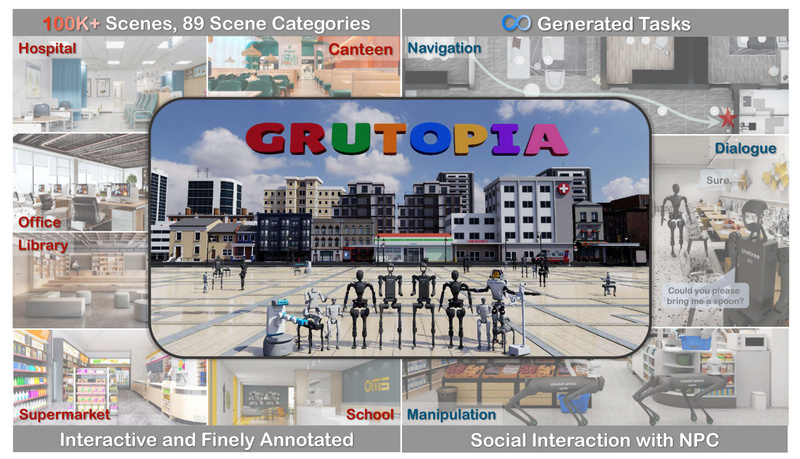

GRScenes: 100K Annotated, Composable Urban Scenes

At the heart of GRUtopia lies GRScenes, a dataset of 100,000 interactive, semantically annotated 3D scenes spanning 89 distinct environment categories—from libraries and gyms to subway stations and grocery stores. Unlike prior datasets limited to residential interiors, GRScenes reflects the service-oriented spaces where general robots are most likely to be deployed first.

Each scene is physically plausible, supporting realistic object interactions (e.g., opening drawers, moving chairs), and can be procedurally combined to construct city-scale virtual environments. This composability allows teams to simulate everything from single-room tasks to multi-building navigation challenges.

GRResidents: LLM-Driven Social NPCs for Realistic Interaction

Robots don’t operate in a vacuum. GRResidents introduces a novel Non-Player Character (NPC) system powered by Large Language Models (LLMs) to simulate human-like social agents. These NPCs can:

- Generate natural-language task instructions (“Take this package to the front desk”)

- React dynamically to robot behavior (“Watch out—you’re blocking the aisle!”)

- Assign context-aware missions based on environment and time of day

This transforms static environments into living, responsive societies—enabling the development of agents that understand not just physics, but also social norms and communication.

GRBench: Standardized Benchmarks for Real-World Skills

GRUtopia ships with GRBench, a benchmark suite focused on three critical capabilities for urban service robots:

- Object Loco-Navigation: Navigate to and interact with specific objects in cluttered scenes.

- Social Loco-Navigation: Move through crowds while respecting personal space and social cues.

- Loco-Manipulation: Combine locomotion with dexterous manipulation (e.g., picking up and delivering items).

The benchmark provides pre-built tasks, evaluation metrics, and baseline policies—ensuring fair, reproducible comparisons across methods. While legged robots are the primary agents, the framework supports a variety of robot morphologies.

Ideal Use Cases for Teams Building Real-World Robots

GRUtopia is particularly valuable for:

- Service robotics startups prototyping delivery or assistance bots for commercial buildings, hospitals, or campuses—without needing physical access to those sites.

- Research labs developing social navigation algorithms or language-grounded task planners who require diverse, dynamic environments for training and validation.

- Autonomous systems teams testing mobile manipulation policies under realistic object interaction constraints and human presence.

- Sim2Real transfer projects that need high-fidelity, physics-accurate simulation as a staging ground before real-world deployment.

Because all scenes and tasks are simulated, teams can run thousands of parallel episodes, rapidly iterate on perception and control stacks, and stress-test edge cases safely.

Getting Started: Developer-Friendly Setup and Configuration

Despite its scale, GRUtopia prioritizes usability:

- Gym-compatible environment interface allows seamless integration with standard RL and imitation learning pipelines.

- Pythonic configuration system lets users compose sensors (RGB-D, LiDAR), controllers, robots, and tasks with minimal code.

- Pre-built examples demonstrate how to drive various robots—including quadrupeds and wheeled platforms—with provided policy weights.

- Asset management is streamlined via built-in scripts (

python -m internutopia.download_assets) that handle downloading GRScenes, robot models, and policy checkpoints.

System Requirements

- Ubuntu 20.04 or 22.04

- NVIDIA GPU (RTX 2070 or higher)

- NVIDIA Omniverse Isaac Sim 4.5.0

- Python 3.10 (via Conda)

- Optional: Docker + NVIDIA Container Toolkit for containerized deployment

The platform also supports teleoperation via Apple Vision Pro and motion capture, enabling human-in-the-loop data collection for behavior cloning.

Limitations and Strategic Considerations

While GRUtopia is a powerful tool, adopters should be aware of key constraints:

- Platform dependency: Requires NVIDIA Isaac Sim, limiting use on non-NVIDIA or macOS/Linux setups without GPU passthrough.

- Licensing: GRScenes is released under a non-commercial Creative Commons license (CC BY-NC-SA 4.0), restricting use in proprietary product development without special arrangements.

- No built-in training framework: As noted in the official TODO list, users must bring their own learning infrastructure (e.g., RLlib, Stable Baselines3, or custom trainers).

- Sim2Real gap remains: Like all simulators, real-world performance depends on effective domain adaptation—GRUtopia enables scalable training but doesn’t eliminate the need for real-world fine-tuning.

Teams should evaluate whether these constraints align with their deployment goals, especially if commercialization is planned.

Summary

GRUtopia (now InternUtopia) represents a significant leap forward in large-scale embodied AI simulation. By delivering a city-scale virtual society with rich scene diversity, socially intelligent NPCs, and standardized benchmarks, it directly addresses the data scarcity, environmental narrowness, and social simplicity that have hindered progress in general-purpose robotics. For project leaders and technical decision-makers seeking a scalable, realistic, and developer-friendly platform to accelerate robot development—particularly for urban service applications—GRUtopia offers a compelling foundation to prototype, evaluate, and iterate before stepping into the real world.