Hunyuan3D 2.0 is a powerful, open-source system developed by Tencent for generating high-resolution, textured 3D assets from either images or text prompts. Designed to bridge the gap between creative vision and technical execution, it offers a complete pipeline that decouples geometry creation from texture synthesis—enabling both professional 3D artists and non-experts to produce photorealistic, production-ready 3D models with minimal effort.

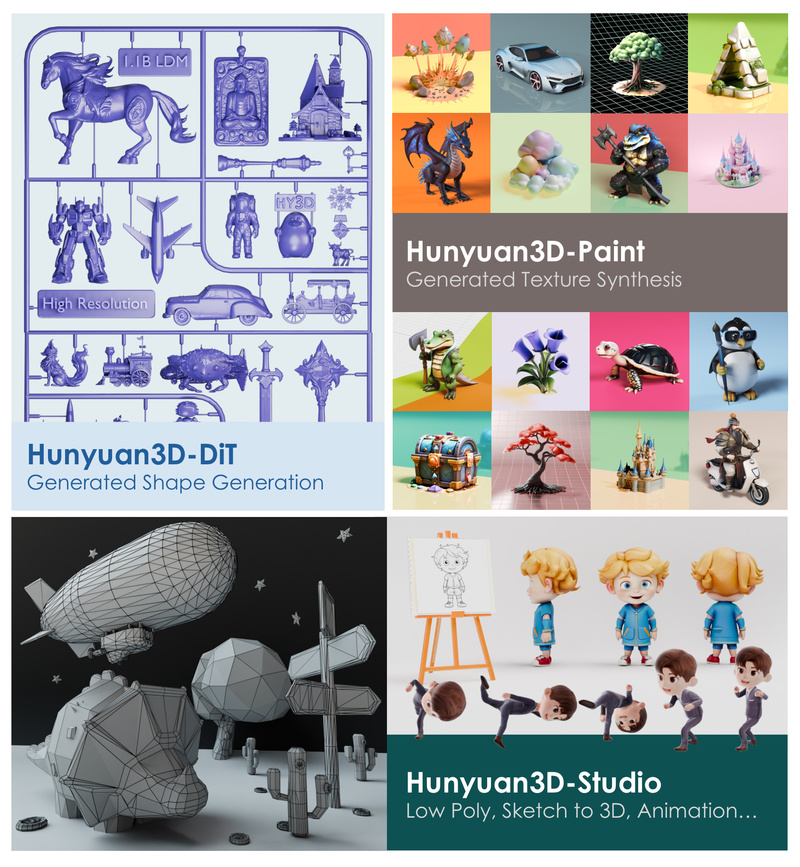

At its core, Hunyuan3D 2.0 consists of two foundation models: Hunyuan3D-DiT, a large-scale shape generation model built on a scalable flow-based diffusion transformer, and Hunyuan3D-Paint, a high-fidelity texture synthesis model that leverages strong geometric priors to produce vibrant, detailed texture maps. Together, they form a robust two-stage generation framework that supports not only AI-generated meshes but also handcrafted ones, offering unmatched flexibility in real-world workflows.

Why Hunyuan3D 2.0 Stands Out

State-of-the-Art Performance Across Key Metrics

Hunyuan3D 2.0 has been rigorously evaluated against both leading open-source and closed-source 3D generation systems. It consistently outperforms competitors in critical quality benchmarks:

- CMMD: 3.193 (lower is better)

- FID_CLIP: 49.165

- FID: 282.429

- CLIP-score: 0.809 (higher is better)

These results reflect superior alignment between input conditions (e.g., reference images) and generated geometry, as well as richer, more coherent textures.

High-Fidelity Geometry and Texture

Unlike many 3D generative systems that produce coarse or misaligned shapes, Hunyuan3D-DiT generates detailed meshes that faithfully respect the structure and semantics of the input image. Hunyuan3D-Paint then overlays 4K-capable textures that are not only visually rich but also geometrically consistent—eliminating common artifacts like seam mismatches or lighting inconsistencies.

This combination ensures assets are suitable for demanding applications such as film, gaming, and immersive VR/AR environments.

Who Can Benefit—and How

For Game Developers and VFX Artists

Rapid asset creation is critical in game development and visual effects. Hunyuan3D 2.0 allows artists to generate base meshes from concept art or screenshots and instantly apply realistic materials, dramatically shortening iteration cycles. The support for handcrafted meshes also means existing models can be retextured automatically for style transfer or quality enhancement.

For E-Commerce and Product Design

Online retailers can use Hunyuan3D 2.0 to convert 2D product photos into interactive 3D displays—enhancing customer engagement without the need for expensive photogrammetry setups or manual modeling.

For Educators and Indie Creators

Thanks to its user-friendly interfaces (including a web platform and Blender plugin), even users with no prior 3D modeling experience can generate and manipulate 3D content. This democratizes access to high-quality 3D creation tools, empowering students, hobbyists, and indie developers.

Flexible and Accessible Usage Options

Hunyuan3D 2.0 is designed for seamless integration into diverse workflows:

- Code (Python): Uses a diffusers-style API for programmatic control. Shape and texture pipelines can be called with just a few lines of code.

- Gradio App: Run a local web interface for interactive image-to-3D generation with options for low-VRAM modes and Turbo variants.

- API Server: Deploy an HTTP endpoint to support scalable, remote 3D generation from any application.

- Blender Addon: Generate or texture models directly inside Blender—the industry-standard 3D software—enabling immediate editing and animation.

- Hunyuan3D Studio: A no-install web platform for quick experimentation and asset creation.

All these options ensure that users can choose the entry point that best matches their technical comfort and project needs.

Hardware and Model Variants for Every Setup

Hunyuan3D 2.0 acknowledges the diversity of user hardware and offers multiple model variants:

- Full pipeline (shape + texture): Requires ~16 GB VRAM

- Shape-only generation: Runs on ~6 GB VRAM

To further optimize for speed or resource constraints, Tencent provides:

- Turbo models (step distillation): Faster inference with minimal quality loss

- Fast models (guidance distillation): Reduced sampling steps

- Mini models (e.g., 0.6B parameters): Ideal for consumer-grade GPUs

All variants are available on Hugging Face and support Windows, macOS, and Linux—ensuring broad accessibility.

A Truly Open and Evolving Ecosystem

Unlike many commercial 3D generation tools, Hunyuan3D 2.0 is fully open-sourced, including:

- Inference code

- Pretrained model weights

- Training code (released in Hunyuan3D-2.1)

- Technical reports

The project also benefits from an active community that has built extensions for ComfyUI, Windows deployment, and Kaggle notebooks. With regular updates—including PBR material support, multiview generation, and texture enhancement modules—Hunyuan3D 2.0 is not just a tool but a growing platform for 3D AI innovation.

Summary

Hunyuan3D 2.0 solves a critical pain point in digital content creation: the high cost and complexity of producing high-quality 3D assets. By combining state-of-the-art diffusion-based geometry and texture generation with open accessibility and multiple user interfaces, it empowers a wide range of creators—from studios to solo developers—to turn ideas into immersive 3D experiences faster and more affordably than ever before. Its strong benchmarks, open philosophy, and active ecosystem make it a compelling choice for anyone exploring AI-driven 3D generation.