Imagine you need to generate a cohesive set of images—say, a film storyboard, a series of product design mockups, or a visual identity kit—where each image must align not only with a text prompt but also with the others in style, composition, and narrative. Traditional text-to-image models often fall short here, producing inconsistent results even when given the same prompt.

Enter In-Context LoRA (IC-LoRA)—a lightweight, highly adaptable method for enhancing diffusion transformer (DiT) models like FLUX to perform in-context, multi-image generation without altering their architecture. By simply concatenating condition and target images into a single composite and fine-tuning with a small dataset (as few as 20–100 examples) using Low-Rank Adaptation (LoRA), IC-LoRA unlocks high-fidelity, prompt-aligned image-set generation. This approach sidesteps the cost and complexity of full-model retraining while delivering results that better respect both visual context and textual instructions.

For technical decision-makers evaluating generative AI tools for real-world applications, IC-LoRA offers a rare balance: architectural simplicity, low data overhead, and strong performance across diverse visual tasks—all built on top of existing, widely used DiT backbones.

How It Works: Simplicity by Design

At its core, IC-LoRA rests on a key insight: modern text-to-image DiTs already possess in-context learning capabilities—they just need the right input structure and minimal tuning to activate them.

The IC-LoRA pipeline consists of three straightforward steps:

- Image Concatenation: Instead of manipulating internal attention tokens (as earlier methods did), IC-LoRA simply stitches condition and target images side-by-side or in a grid to form a single composite input image.

- Joint Natural-Language Captioning: A single caption describes the entire composite, explicitly linking the condition images to the desired outputs using structured markers like

[SCENE-1],[LEFT], or[TOP-RIGHT]. This unified description teaches the model the relationships between images. - Task-Specific LoRA Tuning: Using a small, curated dataset of such image-caption pairs, only lightweight LoRA adapters are trained—leaving the base DiT model untouched.

This design means IC-LoRA requires no modifications to the underlying diffusion transformer, making it plug-and-play compatible with models like FLUX. Training completes in just a few hours on a single 24GB GPU, drastically lowering the barrier to customization.

Key Advantages for Technical Teams

1. Task-Agnostic Architecture, Task-Specific Adaptation

IC-LoRA’s pipeline is universally applicable—whether you’re generating font variants, visual effects, or PPT templates. The same workflow applies; only the training data changes. This reduces engineering overhead and accelerates iteration across use cases.

2. High-Fidelity Multi-Image Consistency

Unlike standard text-to-image models that treat each generation independently, IC-LoRA learns to produce cohesive image sets where visual elements, styles, and semantics remain consistent across panels. This solves a major pain point in design, marketing, and entertainment workflows.

3. Minimal Data, Maximum Impact

Full fine-tuning of diffusion models often demands thousands of examples. IC-LoRA achieves strong results with 20–100 samples, making it feasible to build custom models even for niche applications where labeled data is scarce.

4. Seamless Integration with Existing Tools

Pretrained IC-LoRA models and ComfyUI workflows are already available for tasks like virtual try-on, character storyboarding, and visual identity transfer. Teams can start from these and adapt them—or train new ones using the provided AI-Toolkit configuration.

Real-World Applications That Benefit

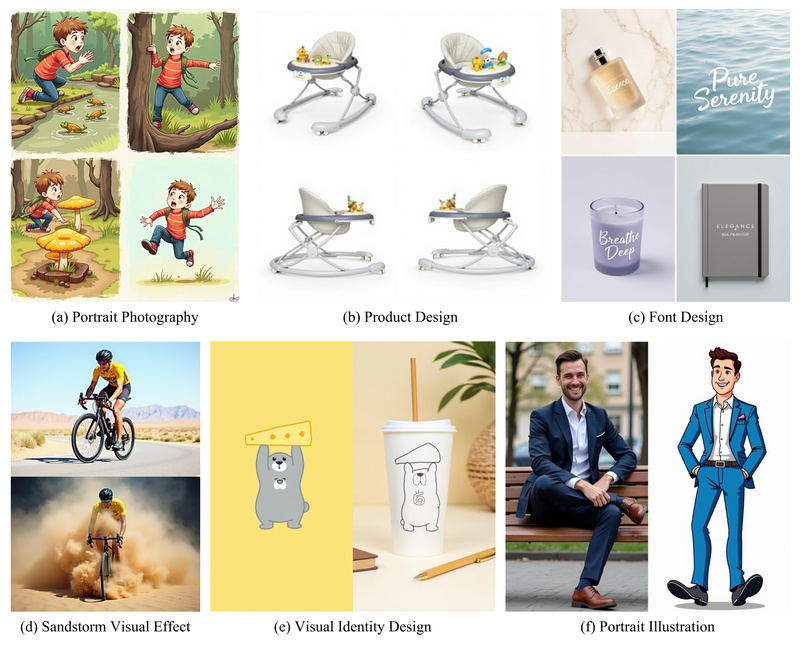

IC-LoRA shines in scenarios requiring structured, multi-panel visual outputs with strong prompt adherence. Official and community models demonstrate success in:

- Film Storyboard Generation: Sequences of scenes depicting character arcs, shot continuity, and narrative flow—all from a single prompt.

- Product & Brand Design: Generating consistent packaging, logo placements, and marketing mockups across different contexts (e.g., tote bags, storefronts).

- Virtual Try-On & Object Migration: Transferring clothing or accessories from one figure to another while preserving texture, lighting, and pose.

- Visual Effects: Applying dynamic overlays like sparklers or sandstorms consistently across before/after image pairs.

- Font & Template Design: Creating multi-panel displays of typographic styles or presentation layouts with contextual annotations.

In each case, IC-LoRA ensures that the generated set respects both the global intent (e.g., “rustic culinary workshop”) and local details (e.g., “ingredient list in bottom-left panel”), something standard models often miss.

Getting Started: From Zero to Custom Model

Adopting IC-LoRA is designed to be frictionless:

- Prepare your data: Collect 20–100 examples of input-output image pairs relevant to your task.

- Create joint captions: Use a consistent template (e.g., the movie-shots example provided) to describe the composite image as a unified scene.

- Train with AI-Toolkit: Use the sample config (

movie-shots.yml) as a base, adjust resolution for your GPU, and runpython run.py config/your-task.yml. - Deploy via ComfyUI: Load your

.safetensorsLoRA file into ComfyUI using the provided workflow templates for immediate inference.

The project provides 10 pretrained models covering diverse domains, offering both ready-to-use solutions and training blueprints.

Important Considerations

While IC-LoRA is architecturally task-agnostic, it still requires task-specific fine-tuning—you cannot use a font-design LoRA for portrait photography without retraining. Additionally:

- Hardware: Default settings assume a GPU with ≥24GB VRAM; lower resolutions can accommodate smaller cards.

- Licensing: IC-LoRA builds on FLUX, so users must comply with FLUX’s license terms.

- Data Rights: The sample datasets are for educational reference only. Commercial use requires verifying copyright status and securing necessary permissions.

Summary

In-Context LoRA redefines what’s possible with minimal-effort customization of diffusion transformers. By leveraging the latent in-context learning abilities of DiTs and combining them with LoRA’s efficiency, it delivers a practical, scalable solution for generating consistent, multi-image outputs across design, media, and creative applications. For teams seeking to move beyond one-off image generation toward structured visual storytelling or product customization—with limited data and engineering resources—IC-LoRA offers a compelling, production-ready path forward.