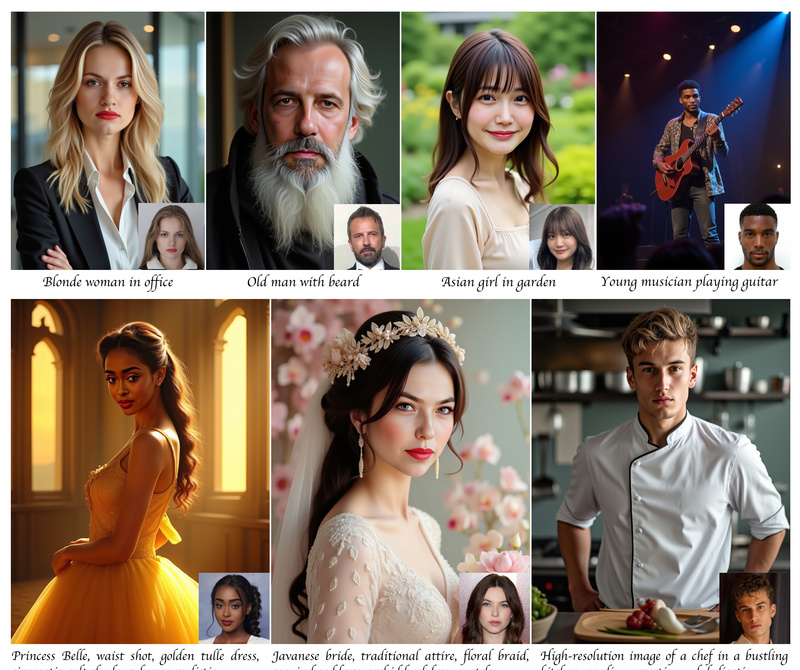

Personalized image generation has long struggled with a fundamental trade-off: how to maintain strong identity fidelity while enabling flexible, high-quality synthesis driven by natural language prompts. Existing approaches—such as IP-Adapter or PuLID-FLUX—often fall short, producing outputs with weak identity resemblance, poor alignment between text and image, or visual artifacts like “face copy-pasting.” Enter InfiniteYou (InfU), a breakthrough framework introduced by ByteDance that leverages advanced Diffusion Transformers (DiTs), specifically FLUX, to deliver state-of-the-art performance in identity-preserving photo recrafting.

Built around a novel component called InfuseNet, InfiniteYou injects identity features directly into the DiT architecture via residual connections—preserving facial identity without compromising the model’s generative power. Combined with a multi-stage training strategy using synthetic single-person-multiple-sample (SPMS) data, InfiniteYou achieves unprecedented balance across three critical dimensions: identity similarity, text-image alignment, and visual aesthetics.

Importantly, InfiniteYou is designed as a plug-and-play solution. It integrates seamlessly with popular tools like ControlNet, LoRAs, and even IP-Adapter for stylization, making it highly adaptable for real-world creative and technical workflows. Whether you’re a developer building avatar systems or a designer crafting personalized marketing visuals, InfiniteYou offers both robustness and flexibility out of the box.

Why InfiniteYou Stands Out: Key Features and Advantages

Strong Identity Preservation Without Artifacts

Unlike many prior methods that either overfit to the reference face or paste it rigidly onto generated bodies, InfiniteYou avoids the “copy-paste” effect entirely. Through InfuseNet’s residual feature injection, identity cues are woven naturally into the generative process, ensuring consistent facial structure, expression, and skin tone—even under dramatic pose, lighting, or style changes.

High Text-to-Image Alignment

One of the most common frustrations with personalized generation is when the output ignores key elements of the prompt (e.g., “cyberpunk outfit,” “sunset beach background”). InfiniteYou’s supervised fine-tuning (SFT) stage on SPMS data explicitly optimizes for prompt adherence. As a result, users get images that not only look like the subject but also accurately reflect the requested scene, clothing, or artistic style.

Dual Model Variants for Customizable Trade-offs

InfiniteYou-FLUX v1.0 ships with two pre-trained variants:

aes_stage2: Optimized for aesthetics and prompt alignment (default).sim_stage1: Prioritizes maximum identity similarity, ideal when visual fidelity to the input face is paramount.

This gives users direct control over the identity–creativity balance without retraining.

Plug-and-Play Compatibility

InfiniteYou doesn’t require you to overhaul your pipeline. It works natively with:

- FLUX.1-dev and its faster sibling FLUX.1-schnell (enabling 4-step generation).

- ControlNet for pose or facial landmark guidance (via

--control_image). - LoRAs, including optional Realism and Anti-blur modules provided by the team.

- IP-Adapter, allowing simultaneous identity preservation and style transfer from reference images.

This modularity makes InfiniteYou a powerful backbone for complex, multi-concept personalization tasks.

Real-World Use Cases: Where InfiniteYou Shines

Personalized Avatars for Social and Gaming Platforms

Generate hundreds of consistent, high-quality avatars from a single selfie—ideal for virtual worlds, dating apps, or professional headshots that adapt to different themes (e.g., “astronaut,” “vintage photographer”).

Creative Photo Recrafting for Marketing and Media

Brands can recraft user-submitted photos into campaign-ready visuals (“wearing our summer collection on a yacht”) while guaranteeing the person remains recognizable—critical for authenticity in influencer collaborations or user-generated content.

Fashion and Style Try-On

Designers and retailers can visualize how real customers would look in new outfits under various conditions (e.g., “casual streetwear in Tokyo rain”), maintaining facial identity across diverse contexts without manual retouching.

AI-Assisted Portrait Editing

Photographers and editors can use InfiniteYou to reimagine portraits—changing backgrounds, lighting, or artistic styles (e.g., oil painting, anime)—while keeping the subject’s identity intact, streamlining post-production.

Problems Solved: Addressing Pain Points in Personalized Image Generation

Before InfiniteYou, practitioners faced recurring challenges:

- Inconsistent identity: Generated faces drift significantly from the input, especially under strong prompt conditioning.

- Weak prompt adherence: The model ignores descriptive text, defaulting to generic poses or outfits.

- Low visual quality: Artifacts like distorted hands, blurry faces, or unnatural textures degrade usability.

- Rigid integration: Most identity-preserving methods are closed ecosystems that resist combination with other control mechanisms.

InfiniteYou directly targets each of these issues. Its InfuseNet architecture ensures identity features are dynamically integrated, not statically overlaid. The SFT phase enforces strict text-image consistency. And its open, modular design invites interoperability—making it not just a model, but a platform.

Getting Started with InfiniteYou: Simple and Flexible Usage

InfiniteYou offers multiple access points tailored to different user preferences:

Local Inference via Command Line

A single command generates a new image:

python test.py --id_image ./assets/examples/man.jpg --prompt "A man, portrait, cinematic" --out_results_dir ./results

Key adjustable parameters include:

--model_version: Switch betweenaes_stage2(default) andsim_stage1.--infusenet_guidance_start: Delay identity injection (e.g.,0.1) to improve composition—especially useful withsim_stage1.--infusenet_conditioning_scale: Reduce slightly (e.g.,0.9) if identity feels too dominant.

Optional LoRAs (--enable_realism_lora, --enable_anti_blur_lora) offer extra refinement but aren’t required.

Interactive Demos

- Run a local Gradio UI with

python app.py. - Try the official Hugging Face demo online (GPU provided by Hugging Face).

- Use official or community ComfyUI nodes for node-based workflows (e.g., bytedance/ComfyUI_InfiniteYou).

Memory constraints? InfiniteYou supports 8-bit quantization and CPU offloading, reducing VRAM usage from 43GB down to as low as 16GB with minimal quality loss.

Practical Limitations and Considerations

While powerful, InfiniteYou has important boundaries to consider:

- Hardware Requirements: Full-precision inference needs ~43GB VRAM, though quantization/offloading strategies make it accessible on 16GB+ GPUs.

- Licensing: The model is released under CC BY-NC 4.0—for non-commercial academic research only. Commercial deployment requires separate permission.

- Prompt Sensitivity: For reliable gender or age representation, include explicit terms in the prompt (e.g., “a woman in her 30s”). The model responds best to clear, descriptive language.

- Base Model Dependency: Currently built on FLUX.1-dev; migration to other DiTs would require re-engineering InfuseNet integration.

Users should also adhere to ethical guidelines—avoid generating misleading, harmful, or non-consensual imagery.

Summary

InfiniteYou redefines what’s possible in identity-preserving image generation. By combining a novel InfuseNet architecture, multi-stage training, and true plug-and-play flexibility, it solves long-standing issues of identity drift, prompt misalignment, and visual degradation. Whether you’re building next-gen avatar systems, creative editing tools, or personalized content engines, InfiniteYou offers a robust, high-fidelity foundation that respects both identity and imagination. With accessible inference options and strong community support (including ComfyUI integrations), it’s positioned to become a go-to solution for responsible, controllable, and visually stunning personalized generation.