Time series forecasting is a foundational task across finance, energy, logistics, and digital platforms—yet traditional Transformer-based models often struggle with real-world complexity. They suffer from performance degradation as lookback windows grow, produce ambiguous attention maps across mixed-variable tokens, and lack flexibility when the set of variables changes. Enter iTransformer: a refreshingly simple yet powerful architectural inversion of the standard Transformer that delivers state-of-the-art results on large-scale multivariate forecasting without modifying any core components.

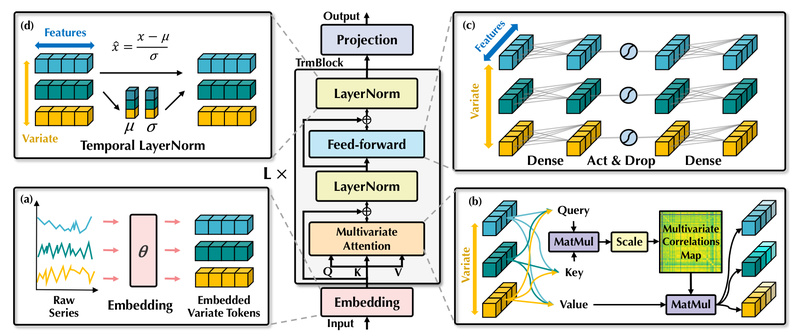

Unlike conventional approaches that treat each time step as a token (fusing all variables at that moment), iTransformer flips the perspective: it treats each variable as a token and embeds its entire time history. This subtle but critical shift enables the model to learn richer, variable-specific temporal representations while using attention to explicitly capture inter-variable relationships. The result? A forecasting backbone that scales efficiently, generalizes across variable sets, and integrates seamlessly into modern ML workflows—all while retaining the familiar Transformer structure.

Why the Inversion Matters

Traditional Transformers in time series modeling concatenate multiple variables (e.g., temperature, humidity, wind speed) into a single token per timestamp. While intuitive, this design conflates distinct physical signals and forces the attention mechanism to disentangle them—a task it wasn’t optimized for. As the number of variables or lookback steps increases, performance plateaus or declines, and computational costs explode.

iTransformer rethinks this assignment of roles:

- Attention operates over variables, not time steps—allowing it to learn interpretable correlations (e.g., how traffic flow in one zone affects another).

- Feed-forward networks process full time series per variable, capturing nonlinear temporal dynamics independently for each signal.

This inversion aligns the Transformer’s strengths with the structure of multivariate time series data, solving key pain points without adding new components or hyperparameters.

Key Strengths That Set iTransformer Apart

1. State-of-the-Art Performance on Real-World Benchmarks

iTransformer consistently outperforms prior models on challenging datasets with hundreds of variables—such as traffic flow, electricity consumption, and Alipay’s transaction load prediction. It lowers both MSE and MAE across the board, proving its robustness in complex, noisy environments.

2. Scales Gracefully with Long Lookback Windows

Where standard Transformers falter with extended history (e.g., 96+ time steps), iTransformer maintains or improves performance. This makes it ideal for applications requiring deep historical context, like demand forecasting or anomaly detection in infrastructure monitoring.

3. Zero-Shot Generalization to Unseen Variables

Train on a subset of variables (e.g., 80 sensors in a network), and iTransformer can forecast new, previously unseen variables at inference time—no retraining required. This flexibility is invaluable in dynamic systems where sensors or metrics change over time.

4. Plug-and-Play Compatibility with Modern Tooling

The model integrates with GluonTS (including probabilistic heads and static covariates), NeuralForecast, and Time-Series-Library. A lightweight pip install iTransformer (courtesy of lucidrains) enables instant use in new projects. Support for FlashAttention further accelerates training and inference.

Ideal Use Cases

iTransformer excels in scenarios involving:

- High-dimensional multivariate signals: e.g., smart grid monitoring (100+ sensors), financial market dashboards (dozens of correlated assets), or e-commerce platform metrics (user activity, inventory, payment flows).

- Dynamic variable sets: When your system adds or removes data streams (e.g., new IoT devices), iTransformer’s variate-token design accommodates these changes without architecture overhaul.

- Long-history forecasting: Applications needing context from weeks or months of data—such as energy load planning or supply chain risk modeling—benefit from its stable scaling.

- Interpretable relationships: The inverted attention maps reveal which variables influence others, aiding diagnostics and stakeholder trust.

Notably, iTransformer is not designed for univariate forecasting. Its power emerges when multiple interdependent time series must be modeled jointly.

How to Get Started

Getting hands-on with iTransformer is straightforward:

-

Install:

pip install iTransformer

-

Use pre-built scripts for common tasks:

- Multivariate forecasting (e.g.,

./scripts/multivariate_forecasting/Traffic/iTransformer.sh) - Testing generalization to unseen variables (

./scripts/variate_generalization/ECL/iTransformer.sh) - Benchmarking with extended lookback windows (

./scripts/increasing_lookback/Traffic/iTransformer.sh)

- Multivariate forecasting (e.g.,

-

Leverage ecosystem integrations:

- Use the GluonTS wrapper for probabilistic forecasting.

- Deploy via NeuralForecast for production-ready pipelines.

All experimental scripts from the original paper are included, enabling full reproducibility.

Limitations and Considerations

While powerful, iTransformer has clear boundaries:

- Multivariate-only: It assumes input consists of multiple aligned time series. Univariate tasks won’t benefit.

- Structured input required: Data must be formatted as a (time × variables) matrix—standard for most forecasting libraries, but preprocessing is still needed.

- PyTorch dependency: The implementation relies on PyTorch; TensorFlow users would need porting.

- Not a silver bullet: In highly regulated or physics-constrained domains (e.g., clinical forecasting), domain-specific models may still outperform general architectures.

Summary

iTransformer reframes a well-known architecture to solve real, persistent challenges in time series forecasting: scalability with long histories, brittleness to variable changes, and opaque decision-making. By inverting how time and variables are processed—without altering Transformer internals—it achieves better performance, generalization, and interpretability. For engineers and technical decision-makers building forecasting systems in dynamic, multivariate environments, iTransformer offers a robust, maintainable, and future-proof backbone that’s ready to deploy today.