If you’re evaluating tools for building intelligent agents that combine planning and learning—whether for games, robotics, scientific discovery, or general sequential decision-making—you’ve likely encountered the power of Monte Carlo Tree Search (MCTS) fused with deep reinforcement learning (RL). Systems like AlphaZero and MuZero demonstrated breakthrough performance, but their implementations are often fragmented, heavyweight, or hard to adapt.

Enter LightZero: an open-source, PyTorch-based algorithm toolkit that unifies MCTS and deep RL in a lightweight, efficient, and highly readable codebase. Developed by OpenDILab, LightZero isn’t just another research repo—it’s a standardized framework designed to accelerate both academic exploration and practical prototyping. By integrating a wide family of MCTS+RL algorithms under one roof—from AlphaZero to the latest ScaleZero—LightZero enables fair benchmarking, rapid iteration, and cross-domain deployment with minimal overhead.

For technical decision-makers, researchers, and engineers, LightZero solves a critical pain point: the lack of a cohesive, well-documented, and extensible platform for experimenting with planning-based RL across diverse tasks and action spaces.

Why LightZero Stands Out

Lightweight, Yet Comprehensive

LightZero packs support for nearly a dozen major MCTS-based algorithms—including MuZero, EfficientZero, Gumbel MuZero, Stochastic MuZero, UniZero, ReZero, and the newly introduced ScaleZero—into a single, lean codebase. Unlike monolithic frameworks, it avoids unnecessary abstraction layers. Each algorithm is implemented with clarity, making it easy to inspect, modify, or extend.

This design is ideal for teams that need to prototype multiple approaches without maintaining separate codebases or deciphering inconsistent architectures.

Engineered for Efficiency

MCTS can be computationally expensive, especially during tree expansion and simulation. LightZero addresses this by leveraging mixed heterogeneous computing: performance-critical components (like tree traversal) are implemented in C++ (ctree) alongside a Python interface (ptree) for flexibility. This hybrid approach delivers speed where it matters most while preserving developer ergonomics.

Built for Understanding and Customization

Documentation is often an afterthought in research code. Not here. LightZero provides:

- Detailed algorithm overviews with principle diagrams

- Function call graphs and network architecture visuals

- Step-by-step guides for customizing environments and algorithms

- Clear configuration templates and logging systems

This lowers the barrier to entry for students, new team members, or researchers transitioning from pure RL to planning-augmented methods.

Breadth of Supported Algorithms and Environments

LightZero doesn’t just support a narrow set of toy problems. It spans a remarkable range of domains:

- Classic board games: TicTacToe, Connect4, Gomoku, Chess, Go

- Discrete control: Atari games (Pong, Ms. Pac-Man, etc.)

- Continuous control: CartPole, Pendulum, LunarLander, BipedalWalker, and MuJoCo benchmarks (Hopper, Walker2d)

- Structured reasoning: 2048, MiniGrid, BSuite, Jericho (text-based games)

- Emerging domains: MetaDrive (autonomous driving simulation)

Each algorithm–environment pair is systematically tested and benchmarked, enabling apples-to-apples comparisons. For instance, you can run MuZero, EfficientZero, and UniZero on the same Atari task using identical evaluation protocols—something rarely possible across disparate GitHub repos.

Real-World Applications and Use Cases

LightZero shines in scenarios where planning, sample efficiency, and generalization matter:

- Academic research: Test hypotheses about MCTS exploration, world model design, or multi-task transfer without rebuilding infrastructure.

- Algorithm prototyping: Quickly validate a new variant of MuZero by subclassing existing policies or models.

- Education and teaching: Use LightZero’s visual documentation to illustrate how MCTS interacts with learned dynamics and reward models.

- Scientific discovery: Extend the framework to domains like molecule design or symbolic reasoning—areas where MCTS has already shown promise (e.g., AlphaFold’s use of search for protein folding).

Crucially, LightZero handles both discrete and continuous action spaces, making it applicable beyond games to robotics, industrial control, and more.

Getting Started Is Straightforward

Installation is simple for Linux and macOS users:

git clone https://github.com/opendilab/LightZero.git cd LightZero pip3 install -e .

Run a classic experiment in seconds:

# Train MuZero on CartPole python3 -u zoo/classic_control/cartpole/config/cartpole_muzero_config.py # Train UniZero on Pong python3 -u zoo/atari/config/atari_unizero_segment_config.py # Train on TicTacToe python3 -u zoo/board_games/tictactoe/config/tictactoe_muzero_bot_mode_config.py

Docker support is also provided for reproducible environments. Note: Windows isn’t currently supported, but Linux/macOS cover most research and development workflows.

Pushing the Frontier: Multi-Task Efficiency with ScaleZero

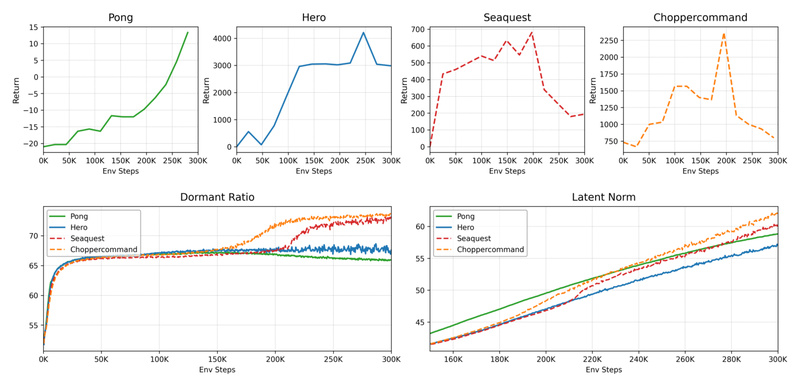

One of LightZero’s most compelling recent advances is ScaleZero, introduced in the paper “One Model for All Tasks: Leveraging Efficient World Models in Multi-Task Planning.”

Traditional multi-task world models suffer from gradient conflicts and loss of plasticity when trained across heterogeneous environments (e.g., Atari + DeepMind Control + text games). ScaleZero tackles this with two innovations:

- Mixture-of-Experts (MoE) architecture: Routes task-specific inputs to specialized subnetworks, reducing interference.

- Dynamic Parameter Scaling (DPS): Progressively adds LoRA adapters based on task learning progress, enabling adaptive capacity allocation.

In evaluations across Atari, DMC, and Jericho, ScaleZero matches specialized single-task agents—using only 71.5% of the environment interactions when DPS is enabled. This makes it a powerful candidate for teams seeking a single, unified model that generalizes across diverse decision-making contexts.

Limitations to Consider

While LightZero is production-ready for research and prototyping, be aware of:

- No official Windows support (Linux/macOS only)

- Compilation dependencies: Requires C++ toolchains and PyTorch

- Work-in-progress integrations: Some algorithm–environment pairs are still under development (marked in the official table)

- Not a turnkey deployment system: Operationalizing agents for real-time inference may require additional engineering

That said, for its target audience—researchers, technical evaluators, and R&D teams—these are manageable trade-offs for the flexibility and insight LightZero provides.

Summary

LightZero delivers what the MCTS+RL community has long needed: a standardized, efficient, and transparent framework that bridges theory and practice. By unifying foundational and cutting-edge algorithms—from AlphaZero to ScaleZero—across a vast array of environments, it empowers technical decision-makers to explore, compare, and deploy planning-based agents with confidence. Whether you’re benchmarking new methods, teaching advanced RL concepts, or building agents for complex sequential tasks, LightZero offers a robust, future-proof foundation.