Imagine an AI agent that doesn’t just talk about using a computer—it actually uses one. That’s the promise of LiteCUA, a lightweight computer-use agent built on the AIOS (AI Agent Operating System) platform. Unlike traditional agents that struggle to map natural language commands to complex, low-level operating system actions, LiteCUA reimagines the computer itself as a semantic environment that large language models (LLMs) can intuitively understand.

At its core, LiteCUA addresses a fundamental bottleneck in agentic AI: the semantic disconnect between how LLMs conceptualize tasks and how real-world digital interfaces are structured. By transforming the computer into an MCP (Model Context Protocol) server, LiteCUA abstracts raw OS states and actions into high-level, context-rich representations that align with an LLM’s reasoning capabilities. The result? A lean, efficient agent that achieves a 14.66% success rate on the OSWorld benchmark—outperforming several heavier, more specialized frameworks despite its minimalist design.

For developers, researchers, and product teams exploring automation, desktop agents, or OS-level AI interaction, LiteCUA offers a pragmatic path forward: safe, contextualized, and surprisingly effective.

Why the Semantic Disconnect Matters

Most computer-use agents today rely on brittle mappings between text prompts and GUI or command-line operations. An LLM might “know” how to draft an email, but it doesn’t inherently understand what clicking a “Send” button means in terms of pixel coordinates, DOM elements, or system calls. This mismatch forces agents to either:

- Depend on fragile screen-scraping or scripting tools, or

- Offload complex decision-making to the model, overwhelming it with low-level noise.

LiteCUA flips this paradigm. Instead of expecting the LLM to decode raw interface mechanics, it contextualizes the computing environment so the LLM reasons at the intent level—e.g., “open Chrome and navigate to GitHub”—while the MCP server handles the translation into concrete OS actions. This decoupling of reasoning complexity from interface complexity is what enables LiteCUA’s surprising performance with minimal architecture.

Key Strengths of LiteCUA

1. Lightweight Yet High-Performing

LiteCUA proves that simplicity doesn’t mean weakness. Its streamlined design avoids over-engineering while delivering competitive results on real-world benchmarks like OSWorld—a dataset that tests agents on actual desktop tasks across browsers, terminals, and applications.

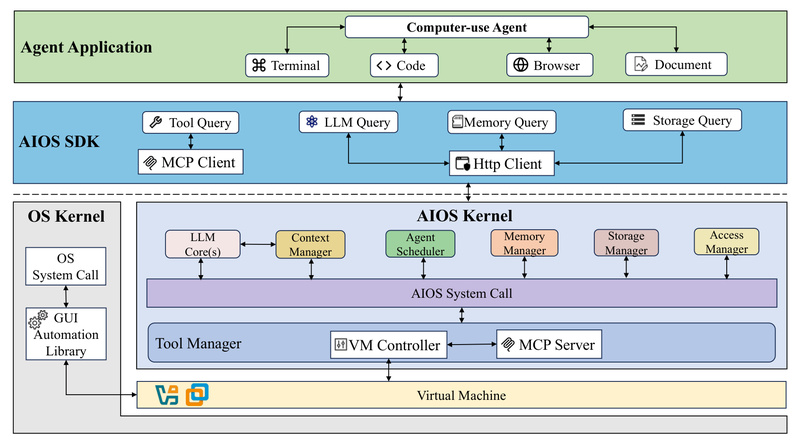

2. Built on AIOS’s Contextualized Environment

As part of the AIOS 1.0 platform, LiteCUA inherits a robust foundation for agent development, including memory management, tool orchestration, and LLM integration. But its real edge lies in AIOS’s computer-use specialized architecture, which embeds an MCP server directly into the system stack.

3. Safe, Sandboxed Execution

Through integration with a virtual machine (VM) controller, LiteCUA operates in a secure, isolated environment. This sandbox prevents unintended system changes during experimentation—critical for both research and development workflows.

4. Framework-Agnostic Compatibility

LiteCUA isn’t locked into a single agent paradigm. It seamlessly integrates with popular frameworks like AutoGen, Open Interpreter, MetaGPT, and ReAct, making adoption straightforward for teams already invested in agentic workflows.

Ideal Use Cases

LiteCUA shines in scenarios where natural language must reliably translate into real computer actions. Consider these applications:

- Automating repetitive desktop workflows: e.g., data entry across spreadsheets, form filling, or report generation.

- Building GUI-navigating agents: for tasks involving web browsers, office suites, IDEs, or design tools—environments where command-line automation falls short.

- Prototyping computer-use capabilities: without deploying heavy infrastructure or writing custom OS wrappers.

- Enhancing agent ecosystems: by providing a standardized, context-aware layer for OS interaction within larger multi-agent systems.

For researchers, LiteCUA offers a clean testbed to study how environmental contextualization improves agent reasoning. For developers, it’s a deployable solution that avoids reinventing the wheel.

How LiteCUA Solves Real Pain Points

Traditional approaches to computer-use automation suffer from three core issues:

- Semantic misalignment: LLMs reason in goals and intentions; OS interfaces expose pixels, clicks, and syscalls.

- Fragility: Small UI changes break hardcoded scripts or vision-based agents.

- Complexity: Heavy frameworks introduce debugging overhead and slow iteration.

LiteCUA tackles these by elevating the environment, not just the agent. The MCP server continuously maps the computer’s state into a structured, language-model-friendly context—like a real-time semantic layer over the OS. This reduces the cognitive load on the LLM, allowing it to focus on what to do rather than how to click. Meanwhile, the VM sandbox ensures failures stay contained, enabling safe experimentation.

Getting Started with LiteCUA

Deploying LiteCUA is straightforward for those familiar with Python-based AI tooling:

-

Clone the repositories:

- AIOS Kernel:

https://github.com/agiresearch/AIOS - AIOS SDK (Cerebrum):

https://github.com/agiresearch/Cerebrum

- AIOS Kernel:

-

Set up your environment:

- Use Python 3.10 or 3.11.

- Install dependencies via

uvorpip(separate requirements for GPU/CPU).

-

Configure your LLM:

- Supports OpenAI, Anthropic, Deepseek, Gemini, Groq, Hugging Face, Ollama, and vLLM.

- Define models and API keys in

aios/config/config.yaml.

-

Prepare a virtualized GUI environment:

- Required for full computer-use functionality (instructions provided in documentation).

-

Launch the AIOS kernel and run LiteCUA through the Cerebrum SDK.

Once running, you can issue natural language commands like “Download the latest report from Gmail and save it to Documents”—and watch LiteCUA execute the full sequence across browser and file system.

Limitations and Practical Considerations

While promising, LiteCUA isn’t a magic bullet:

- Requires a GUI-enabled VM: Full computer-use capabilities depend on a sandboxed desktop environment, which adds setup overhead.

- Part of an evolving ecosystem: AIOS is at v1.0; some features (e.g., cross-device sync, persistent user kernels) are still in development.

- Benchmark performance reflects real-world challenges: A 14.66% success rate on OSWorld is strong for a lightweight agent—but not yet production-ready for mission-critical tasks without human-in-the-loop oversight.

These constraints make LiteCUA best suited for prototyping, research, and semi-automated assistance rather than fully autonomous enterprise deployment—though its trajectory suggests rapid improvement.

Summary

LiteCUA redefines what’s possible for computer-use agents by shifting the burden of understanding from the model to the environment. Through AIOS’s MCP server architecture, it creates a contextual bridge between LLM reasoning and OS mechanics—enabling safer, simpler, and more effective automation.

If you’re building agents that need to truly interact with computers—not just simulate interaction—LiteCUA offers a lean, future-proof foundation. With strong benchmark results, framework flexibility, and a clear architectural vision, it’s a compelling choice for anyone exploring the frontier of agentic computing.