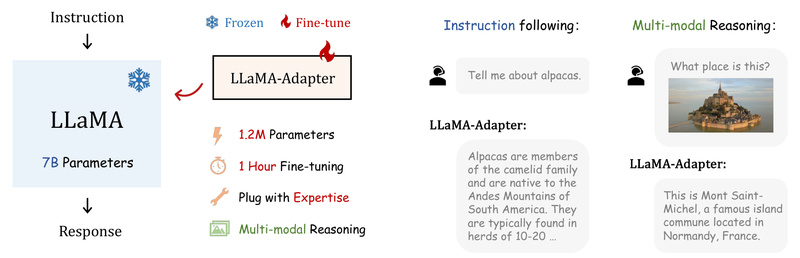

If you’re working on a project that requires a capable language model—but lack the GPU budget, time, or infrastructure for full fine-tuning—LLaMA-Adapter offers a compelling alternative. This lightweight adaptation method enables you to convert the frozen LLaMA model into a high-performing instruction-following or multimodal AI system by training only 1.2 million additional parameters, compared to LLaMA’s original 7 billion. Developed by researchers at OpenGVLab and accepted at ICLR 2024, LLaMA-Adapter delivers performance comparable to fully fine-tuned models like Alpaca while slashing training time to under one hour on 8×A100 GPUs and reducing storage needs to just ~4.7 MB.

At its core, LLaMA-Adapter introduces a simple yet powerful mechanism: zero-initialized attention with zero gating. This design ensures that during early training, the adapter injects no disruptive signals into the base model, preserving LLaMA’s rich pre-trained knowledge while gradually learning how to respond to new instructions. Whether you’re building a domain-specific assistant, a visual question-answering tool, or integrating LLMs into existing workflows via LangChain, LLaMA-Adapter provides a fast, low-cost, and reproducible path forward.

Why LLaMA-Adapter Stands Out

Ultra-Efficient Resource Usage

Traditional full fine-tuning of LLaMA-7B requires updating all 7 billion parameters—demanding significant memory, time, and computational power. In contrast, LLaMA-Adapter modifies only 1.2M trainable parameters, making it accessible even to teams with modest hardware. Training completes in less than one hour on 8 A100 GPUs, and the resulting adapter weights occupy just 4.7 MB on disk. This efficiency dramatically lowers the barrier to customization without sacrificing output quality.

Performance on Par with Full Fine-Tuning

Despite its minimal footprint, LLaMA-Adapter consistently matches or approaches the performance of models like Stanford Alpaca and Alpaca-LoRA across diverse tasks:

- Answering factual questions (e.g., “Who was Mexico’s president in 2019?”)

- Generating coherent advice (“How to develop critical thinking skills?”)

- Writing correct code (e.g., FizzBuzz, Fibonacci sequences)

These results demonstrate that strategic, sparse adaptation can rival brute-force parameter updates when guided by thoughtful architectural design.

Multimodal Capabilities in Later Versions

While LLaMA-Adapter V1 focuses on text-only instruction tuning, V2 and V2.1 extend the approach to multimodal settings. By integrating visual encoders like CLIP-ViT or ImageBind, these versions enable LLaMA to reason over images, generating accurate captions or answering science-related visual questions (e.g., on ScienceQA). Support for audio, video, depth, thermal, and even 3D point clouds is available in advanced variants like ImageBind-LLM—making LLaMA-Adapter a versatile foundation for cross-modal AI applications.

Ideal Use Cases for Practitioners

LLaMA-Adapter shines in scenarios where speed, cost, and simplicity are critical:

- Rapid Prototyping: Test instruction-following behavior in hours, not days.

- Domain-Specific Assistants: Fine-tune on internal documentation or customer support logs without retraining the entire model.

- Multimodal Applications: Build image captioning, visual QA, or document-understanding systems using LLaMA-Adapter V2 with minimal additional components.

- Integration with Existing Tooling: The project includes LangChain integration, enabling seamless deployment in agent-based or retrieval-augmented workflows.

For teams already using LLaMA-compatible checkpoints (including unofficial Hugging Face versions), adopting LLaMA-Adapter requires only a few lines of code to load adapter weights alongside the base model.

Getting Started: Simple and Reproducible

Setting up LLaMA-Adapter is straightforward:

- Create a Conda environment and install PyTorch.

- Install dependencies via

pip install -r requirements.txt. - Download the official LLaMA weights (subject to Meta’s license) and the pre-trained adapter from the project’s repository.

- Run inference with

torchrunand specify paths to both the base model and adapter.

For fine-tuning, the repository provides a minimal script that uses the 52K self-instruct examples from Stanford Alpaca. Key hyperparameters include:

adapter_layer: Number of transformer layers to adapt (e.g., 30)adapter_len: Length of learnable prefix tokens (e.g., 10)max_seq_len,batch_size, and learning rate tuned for stability

The training code is intentionally lean—designed for easy reproduction without complex dependencies.

Limitations and Practical Considerations

While powerful, LLaMA-Adapter comes with important constraints:

- Base Model Dependency: You must have access to LLaMA model weights, which are licensed by Meta and not freely redistributable.

- Architecture-Specific Design: The method is optimized for LLaMA’s architecture. Though the zero-init attention concept has shown promise on ViT and RoBERTa, the official implementation targets LLaMA-family models.

- Version Differences: V1 is text-only. Multimodal features (images, audio, etc.) require V2 or later, which depend on external encoders like CLIP or ImageBind.

- Not a Standalone Model: LLaMA-Adapter is an add-on—it cannot function without the original LLaMA backbone.

These considerations make it essential to verify compatibility with your target use case before committing development resources.

How It Compares to Alternatives

Compared to full fine-tuning (Alpaca) or LoRA-based methods (Alpaca-LoRA), LLaMA-Adapter offers a unique trade-off: near-equivalent output quality with drastically reduced resource demands.

For example, when asked to write a FizzBuzz program, LLaMA-Adapter produces a correct, clean loop—on par with Alpaca and Alpaca-LoRA, and far superior to naive prompting of base LLaMA. Similarly, in factual QA and creative writing tasks, its responses are structured, relevant, and coherent.

Where LoRA inserts low-rank matrices into attention layers, LLaMA-Adapter uses learnable prefix tokens combined with zero-gated attention—a design that inherently limits interference with pre-trained knowledge during early training. This leads to more stable convergence, especially with small datasets.

Thus, if your priority is fast iteration, low GPU cost, and minimal storage, LLaMA-Adapter is a top-tier choice among parameter-efficient tuning methods.

Summary

LLaMA-Adapter redefines what’s possible with lightweight model adaptation. By introducing just 1.2M trainable parameters and leveraging zero-initialized attention, it enables efficient, high-quality instruction tuning of LLaMA without full retraining. Its evolution into multimodal domains (V2+) further expands its utility for modern AI projects. For engineers, researchers, and technical decision-makers seeking a balance of performance, speed, and simplicity, LLaMA-Adapter offers a proven, open-source solution that delivers real-world value with minimal overhead.