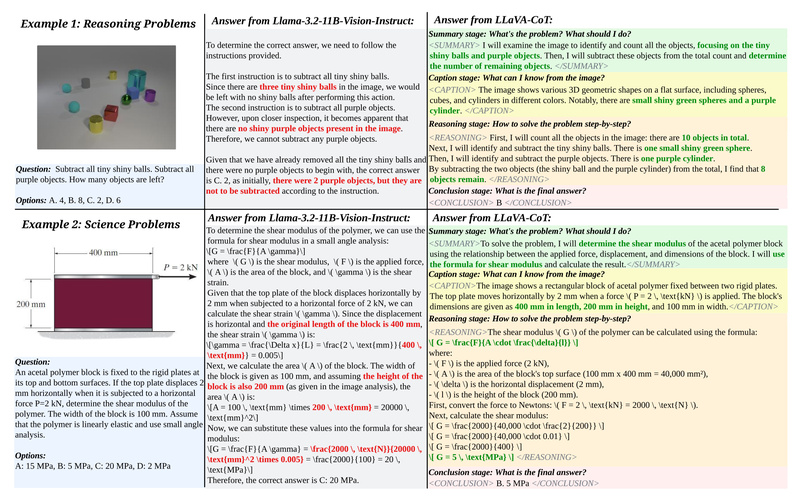

Most vision-language models (VLMs) today can describe what’s in an image—but they often falter when asked to reason about it. Faced with complex questions that require multi-step deduction—like “Subtract all tiny shiny balls and purple objects; how many remain?”—standard models frequently produce plausible-sounding but incorrect answers. This reasoning gap limits their reliability in real-world applications where accuracy and explainability matter.

Enter LLaVA-CoT: a vision-language model explicitly engineered to perform structured, autonomous reasoning. Unlike conventional approaches that rely on chain-of-thought prompting (which still depends on the model’s internal coherence), LLaVA-CoT decomposes reasoning into four explicit, sequential stages: summarization, visual interpretation, logical reasoning, and conclusion generation. The result? A model that doesn’t just guess—it thinks, step by step, and shows its work.

Remarkably, this 11-billion-parameter model—trained on just 100,000 annotated examples—outperforms much larger closed-source systems like GPT-4o-mini, Gemini-1.5-pro, and even Llama-3.2-90B-Vision-Instruct across six challenging multimodal reasoning benchmarks. For technical decision-makers who need transparent, accurate, and reproducible visual reasoning, LLaVA-CoT offers a compelling solution.

Why Structured Reasoning Matters in Vision-Language AI

Traditional VLMs treat visual question answering as a direct input-to-output mapping. While effective for simple queries (“What color is the car?”), this approach breaks down under complexity. Real-world tasks—whether in education, robotics, or industrial automation—often demand inference that combines visual observation with logical operations, domain knowledge, and sequential deduction.

LLaVA-CoT tackles this by mimicking human problem-solving: it first clarifies the task, then extracts relevant visual facts, applies logical steps, and finally formulates a justified answer. This structure not only improves accuracy but also provides explainability—a critical feature for debugging, auditing, and user trust.

For example, when solving a physics problem involving shear modulus from a diagram, LLaVA-CoT correctly identifies dimensions, applies formulas with proper unit conversions, and arrives at the right answer—while clearly documenting each step. In contrast, baseline models may misuse geometric assumptions or miscalculate areas, leading to confident but wrong conclusions.

Core Innovations That Drive Performance

Four-Stage Autonomous Reasoning Pipeline

LLaVA-CoT operates through a fixed, interpretable workflow:

- Summary Stage: Clarifies the problem and outlines the solution strategy.

- Caption (Visual Interpretation) Stage: Extracts relevant visual elements from the image.

- Reasoning Stage: Executes logical or mathematical steps based on the summarized task and observed data.

- Conclusion Stage: Delivers a final answer, often with option selection or short justification.

This pipeline is built into the model’s training objective, ensuring consistent reasoning behavior without requiring special prompting at inference time.

SWIRES: Efficient Test-Time Scaling

To further boost performance without retraining, LLaVA-CoT introduces Stage-Wise Inference with Retracing Search (SWIRES). This method explores alternative reasoning paths during inference and selects the most coherent one, effectively enabling “test-time compute scaling.” It improves accuracy while remaining computationally feasible—ideal for deployment scenarios where quality matters more than raw speed.

Strong Results with Modest Resources

Trained on only 100k samples from a curated dataset (LLaVA-CoT-100k), the model achieves state-of-the-art results on benchmarks like AI2D, ScienceQA, and complex visual reasoning suites. Its ability to surpass 90B-parameter models demonstrates that structured reasoning architecture can outweigh sheer scale—a key insight for teams working with limited data or compute.

Practical Applications Where LLaVA-CoT Excels

LLaVA-CoT is particularly valuable in domains that demand both visual understanding and logical rigor:

- STEM Education Tools: Tutors that solve physics, chemistry, or geometry problems from diagrams while showing step-by-step reasoning.

- Industrial Visual Inspection: Systems that diagnose defects by combining image analysis with rule-based logic (e.g., “If component A is misaligned and temperature exceeds X, flag as failure”).

- Explainable Robotics: Autonomous agents that justify navigation or manipulation decisions based on visual scenes.

- AI Assistants for Technical Work: Tools that help engineers or analysts interpret schematics, charts, or experimental setups with traceable logic.

In all these cases, the ability to show the reasoning path—not just the final answer—enables users to verify, learn from, or correct the model’s output.

Getting Started: Integration and Customization

LLaVA-CoT is designed for practical adoption:

- Pretrained Weights: Available on Hugging Face as

Xkev/Llama-3.2V-11B-cot. - Inference: Compatible with standard

Llama-3.2-11B-Vision-Instructpipelines—no major code changes needed. - Fine-Tuning: Supports training via

llama-recipesor any framework that handles the base Llama-3.2 Vision model. A sample command and dataset loader are provided in the repository. - Dataset: The full

LLaVA-CoT-100kdataset is publicly available for research or domain adaptation.

This compatibility lowers the barrier to entry, allowing teams to integrate LLaVA-CoT into existing multimodal systems with minimal friction.

Limitations and Strategic Considerations

While powerful, LLaVA-CoT has realistic constraints:

- It inherits the hardware and licensing requirements of the Llama-3.2-11B-Vision-Instruct base model, including Meta’s usage terms.

- Reasoning quality depends on clear visual input and well-formulated questions; ambiguous images or poorly phrased prompts may degrade performance.

- The four-stage pipeline introduces slightly higher latency than direct-answer models—acceptable for quality-critical applications but a trade-off for real-time use.

- The 100k training set, while diverse, may not cover highly specialized domains (e.g., medical radiology or satellite analytics) without additional fine-tuning.

Teams should evaluate LLaVA-CoT on their specific use cases—but for general-purpose, reasoning-intensive multimodal tasks, it sets a new bar for open, transparent, and accurate visual AI.

Summary

LLaVA-CoT redefines what open-source vision-language models can do by embedding structured, step-by-step reasoning directly into the model architecture. It delivers superior accuracy, full explainability, and strong performance with modest resources—making it an ideal choice for technical leaders who need trustworthy, auditable, and high-performing multimodal reasoning in education, industry, and research. With public code, weights, and data, adoption is straightforward, and the potential for customization is high.