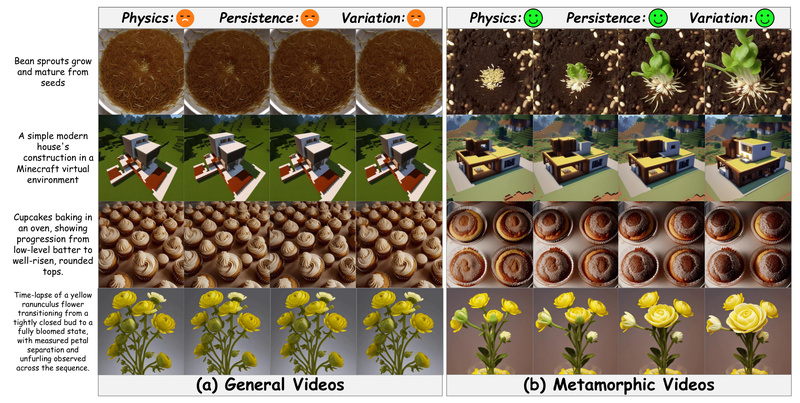

Most text-to-video (T2V) models today excel at generating short clips of people walking, cars driving, or birds flying—but they struggle to depict slow, continuous, physics-driven transformations like a seed sprouting into a plant, an ice cube melting, or a house being built from scratch. These “metamorphic” processes involve dramatic visual changes over time and encode rich physical knowledge about how the real world evolves.

MagicTime directly addresses this gap. Developed by PKU-YuanGroup and accepted by IEEE TPAMI, MagicTime is a specialized T2V framework designed not just to generate videos, but to act as a metamorphic simulator—accurately rendering long-term, dynamic, and physically plausible transformations from textual prompts. By leveraging time-lapse videos as a learning signal, MagicTime teaches models to understand and reproduce the essence of real-world change.

Why General Video Generators Fall Short

Standard T2V models are trained on general video datasets that prioritize short-duration, low-variation motion—think of people waving or vehicles moving. As a result, they often produce videos where objects barely move or change shape, even when the prompt describes a profound transformation. This limitation stems from a lack of explicit modeling of physical dynamics and temporal persistence.

Time-lapse videos, by contrast, compress hours, days, or even years of real-world change into seconds. They inherently contain strong visual cues about causality, material properties, and object evolution—making them ideal for teaching models about physical realism. MagicTime taps into this signal with purpose-built methods and a curated dataset to unlock this capability in generative models.

Core Innovations Behind MagicTime

MagicTime introduces three key technical components that collectively enable high-quality metamorphic video generation:

1. MagicAdapter: Decoupling Spatial and Temporal Learning

Rather than treating video generation as a unified spatiotemporal task, MagicTime uses MagicAdapter to separate spatial (appearance) and temporal (motion/evolution) learning. This decoupling allows the model to better absorb physical dynamics from time-lapse data without corrupting object appearance. It effectively “retrofits” pre-trained T2V backbones like Animatediff or Open-Sora-Plan to handle metamorphic content.

2. Dynamic Frames Extraction

Traditional video sampling strategies assume relatively uniform motion. But in time-lapse sequences—like a flower blooming or clouds forming—the rate and magnitude of change vary dramatically. MagicTime’s Dynamic Frames Extraction strategy adaptively selects frames that best capture these non-uniform transformations, ensuring the training process focuses on the most informative moments of change.

3. Magic Text-Encoder: Understanding Metamorphic Prompts

Prompts for metamorphic videos often describe slow, multi-stage processes (“a tightly closed bud transitions to a fully bloomed flower over several days”). Standard text encoders may not fully grasp the temporal scope or physical implications of such descriptions. MagicTime enhances the text encoder with domain-specific pretraining on time-lapse captions, improving alignment between linguistic intent and visual output.

Purpose-Built Data: The ChronoMagic Dataset

MagicTime is trained on ChronoMagic, a high-quality dataset of 2,265 time-lapse video-text pairs, with captions generated by GPT-4V. Each sample depicts a real-world metamorphic process—ranging from biology (germination) to construction (Minecraft builds) to thermodynamics (melting ice). This dataset is publicly available on Hugging Face, enabling reproducibility and further research.

Additionally, the team released ChronoMagic-Bench, a standardized evaluation set with 1,649 samples, and larger variants (ChronoMagic-Pro and ProH) with hundreds of thousands of examples for scaling.

Practical Use Cases

MagicTime excels in scenarios where realistic, long-term transformation is the goal:

- Education & Science Communication: Visualize plant growth, chemical reactions, or geological changes without filming real experiments.

- Creative Content: Generate compelling time-lapse-style sequences for storytelling, advertising, or artistic projects.

- Simulation & Gaming: Simulate in-game construction, terrain evolution, or character aging in virtual environments like Minecraft.

- Prototyping & Design: Preview how materials weather, structures assemble, or products evolve over time.

Importantly, MagicTime is not intended for general-purpose video generation (e.g., “a dog running in a park”). Its strength lies in prompts that explicitly describe metamorphic processes.

Getting Started: Easy Inference with Flexible Styles

MagicTime is open-source and designed for accessibility:

- Clone the lightweight repo (under 1 MB) using

git clone --depth=1. - Set up the environment with Python 3.10 and install dependencies.

- Download pre-trained weights from Hugging Face or WiseModel.

- Run inference via CLI or the included Gradio web UI (

python app.py).

MagicTime supports multiple visual styles through configuration files:

RealisticVision.yaml: Photorealistic outputsToonYou.yaml: Stylized, anime-like resultsRcnzCartoon.yaml: Cartoon aesthetics

Users can also run batch inference from a text file of custom prompts, making it ideal for content creators or researchers testing multiple scenarios.

Note: Due to floating-point non-determinism across hardware, identical seeds may produce slightly different results on different machines—a known behavior in generative models, not a bug.

Limitations and Future Work

While MagicTime sets a new standard for metamorphic video generation, keep these considerations in mind:

- It is specialized: Don’t expect it to outperform general T2V models on standard action-based prompts.

- Training code is still upcoming (as of mid-2025), so fine-tuning on custom time-lapse data requires patience or adaptation.

- Output quality depends heavily on prompt specificity—vague prompts yield less coherent transformations.

Summary

MagicTime solves a critical but overlooked problem in generative AI: the inability of most T2V models to simulate real-world physical change over time. By combining a novel architecture (MagicAdapter), adaptive data processing (Dynamic Frames Extraction), enhanced language understanding (Magic Text-Encoder), and a purpose-built dataset (ChronoMagic), it enables the generation of videos that are not just visually appealing—but physically meaningful.

For researchers, educators, and creators who need to visualize slow, continuous transformations from text, MagicTime offers a practical, open-source, and state-of-the-art solution. If your work involves simulating how things truly change in the real world, MagicTime is likely the right tool for the job.