As machine learning models grow increasingly complex—from deep convolutional networks to attention-based architectures—the ability to clearly communicate how they work becomes just as important as building them. Yet, most practitioners lack the time or expertise to create compelling visual explanations using traditional animation tools. Enter ManimML, an open-source Python library that bridges this gap by enabling ML engineers, researchers, and educators to generate high-quality animations of neural networks directly from code, using syntax that feels familiar to anyone who’s used PyTorch or TensorFlow.

ManimML eliminates the steep learning curve of animation software by treating visualization as a natural extension of model definition. Instead of hand-crafting visuals frame by frame, you describe your architecture in code—and ManimML automatically composes and animates it.

Why Visual Explanations Matter in Machine Learning

Machine learning is no longer confined to research labs; it powers real-world applications in healthcare, finance, robotics, and more. But with wider adoption comes a communication challenge: stakeholders—from students to executives—often struggle to grasp how models process data, especially when layers transform inputs through convolutions, pooling, or nonlinear activations.

Static diagrams fall short when illustrating dynamic processes like forward passes or dropout regularization. Video animations, on the other hand, excel at showing change over time—making them ideal for demonstrating how signals flow through a network. However, creating such animations traditionally required either bespoke programming or mastery of complex design tools like Blender or Adobe After Effects.

ManimML solves this by letting you stay in your programming environment, using the same mental model you already apply when coding models.

A Code-First Approach That Feels Familiar

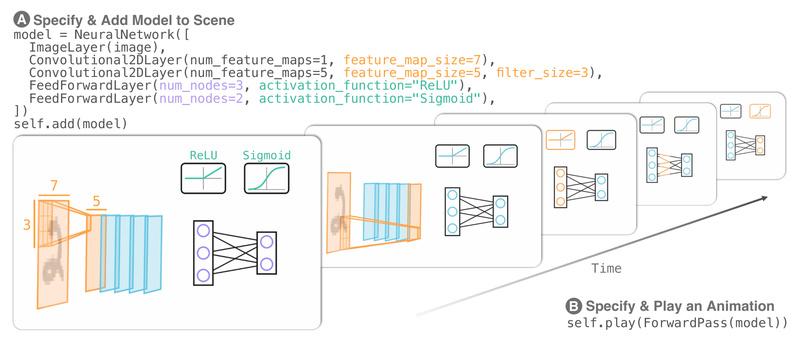

ManimML’s core innovation is its programmatic, code-native interface. You don’t draw boxes or arrows—you declare layers the way you would in a deep learning framework:

NeuralNetwork([Convolutional2DLayer(1, 7, 3),Convolutional2DLayer(3, 5, 3),FeedForwardLayer(3) ])

This syntax mirrors how you’d define a model in PyTorch. Once declared, ManimML automatically renders a visual representation of the network and can animate its forward pass with a single method call:

forward_pass = nn.make_forward_pass_animation() self.play(forward_pass)

This design philosophy—leverage existing programming knowledge instead of requiring new design skills—makes ManimML uniquely accessible to ML practitioners who want to explain their work without becoming animators.

Key Features: From Feedforward to Dropout

ManimML supports a growing set of neural network components, each designed to be composable and animated out of the box:

- Feedforward Networks: Define layers with

num_nodes, and optionally attach activation functions like ReLU or Sigmoid. - Convolutional Neural Networks (CNNs): Specify feature maps, kernel sizes, and spatial dimensions using

Convolutional2DLayer. - Image Inputs: Prepend an

ImageLayerto show how raw pixels enter the network—ideal for vision demos. - Max Pooling: Visualize downsampling with

MaxPooling2DLayer, showing how spatial resolution shrinks. - Activation Functions: Render activation curves over layers to illustrate non-linear transformations.

- Dropout: Simulate stochastic neuron deactivation during training with a dedicated dropout animation.

All these elements integrate seamlessly. For example, you can build a CNN that takes an image, applies ReLU activations, performs max pooling, and ends with a feedforward classifier—then animate the entire pipeline in one go.

Who Should Use ManimML?

ManimML is especially valuable for:

- Educators creating lecture videos or interactive tutorials to help students see how neural networks operate.

- Researchers preparing conference talks or supplementary materials to clarify novel architectures.

- Students learning deep learning concepts by visualizing textbook models in action.

- Engineers building internal demos to explain model behavior to cross-functional teams.

If you’ve ever wished you could “show, not tell” how your model works—without spending weeks learning animation software—ManimML is built for you.

Getting Started Is Straightforward

Installation requires only two steps:

- Install Manim Community Edition (not the original 3Blue1Brown version).

- Run

pip install manim_ml(or install from source for the latest features).

Then, define a scene:

from manim import * from manim_ml.neural_network import NeuralNetwork, FeedForwardLayer class BasicScene(Scene):def construct(self):nn = NeuralNetwork([FeedForwardLayer(3),FeedForwardLayer(5),FeedForwardLayer(3)])self.add(nn)self.play(nn.make_forward_pass_animation())

Render with manim -pql example.py for a quick preview, or -pqh for high resolution. That’s it—your first animated neural network is ready.

What to Keep in Mind

While powerful, ManimML is still under active development. A few considerations:

- It depends on Manim Community, so ensure you’re not using the legacy version.

- Some advanced features may change as the API evolves.

- Documentation is improving but may lag behind new capabilities—checking the GitHub repo and examples is recommended.

These are typical for early-stage open-source tools, but the core functionality (feedforward, CNNs, forward pass animation) is stable and production-ready for educational and presentation use.

How ManimML Fits Into Your Workflow

ManimML shines when integrated into existing practices:

- Reuse architecture definitions from your research code to generate explanatory visuals.

- Rapidly prototype animations for blog posts, grant proposals, or class materials.

- Combine primitive visualizations (e.g., convolution + ReLU + pooling) into unified narratives.

Because it’s code-based, your animations are reproducible, version-controllable, and shareable—just like your models.

Summary

ManimML addresses a critical gap in the machine learning ecosystem: the ability to communicate complex architectures clearly and dynamically, without requiring design expertise. By enabling animations to be generated directly from intuitive, PyTorch-style code, it empowers practitioners to focus on explanation rather than engineering visuals from scratch. Whether you’re teaching, researching, or building, ManimML lowers the barrier to creating professional, engaging visualizations that bring machine learning to life.